license: apache-2.0

tags:

- Text-to-Image

- ControlNet

- Diffusers

- Stable Diffusion

pipeline_tag: text-to-image

datasets:

- fka/awesome-chatgpt-prompts

language:

- as

metrics:

- character

base_model:

- openai/whisper-large-v3-turbo

new_version: stabilityai/stable-diffusion-3.5-large

library_name: asteroid

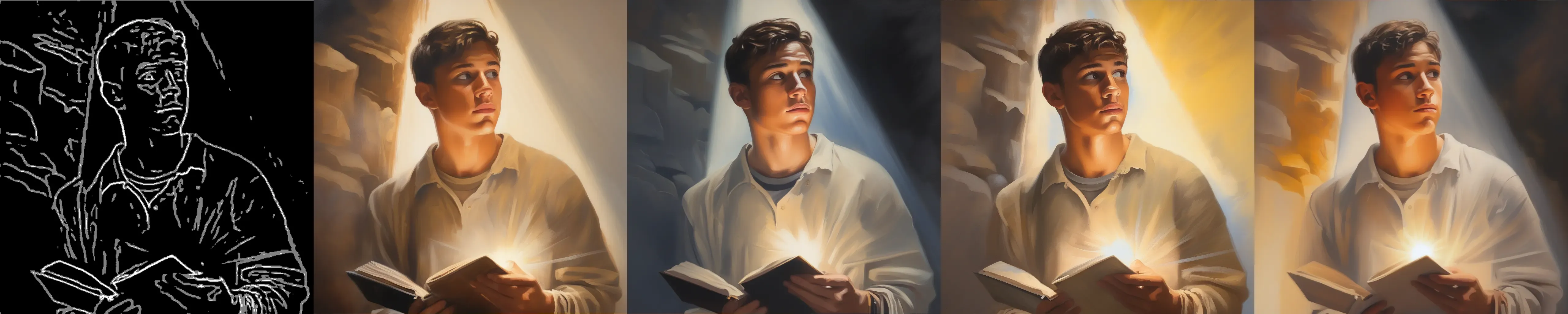

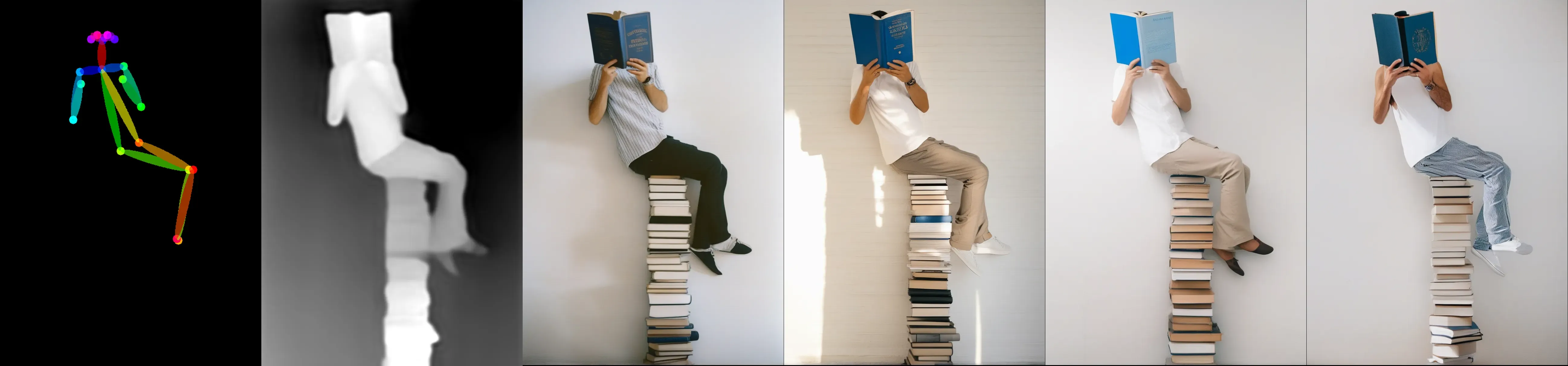

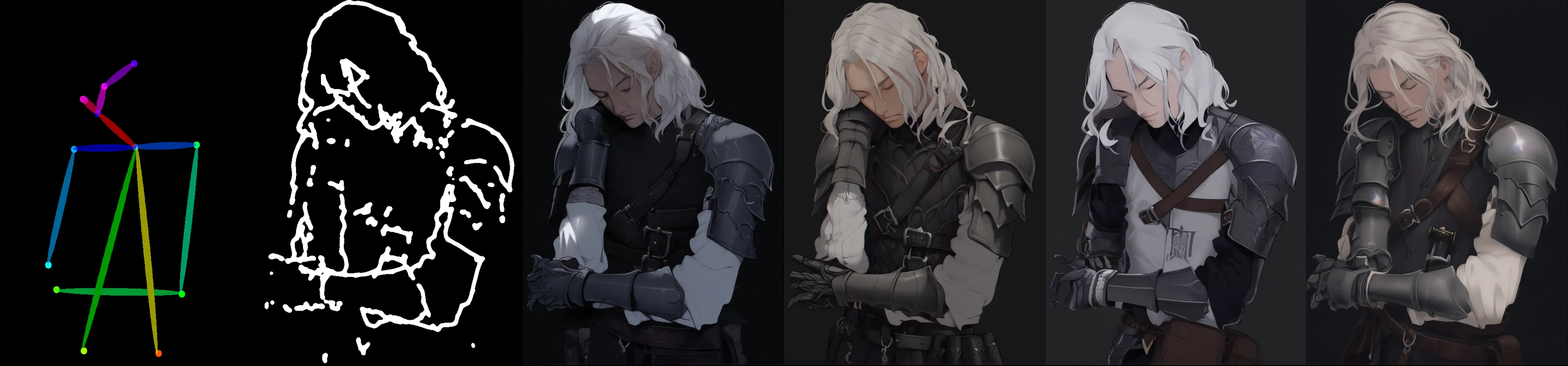

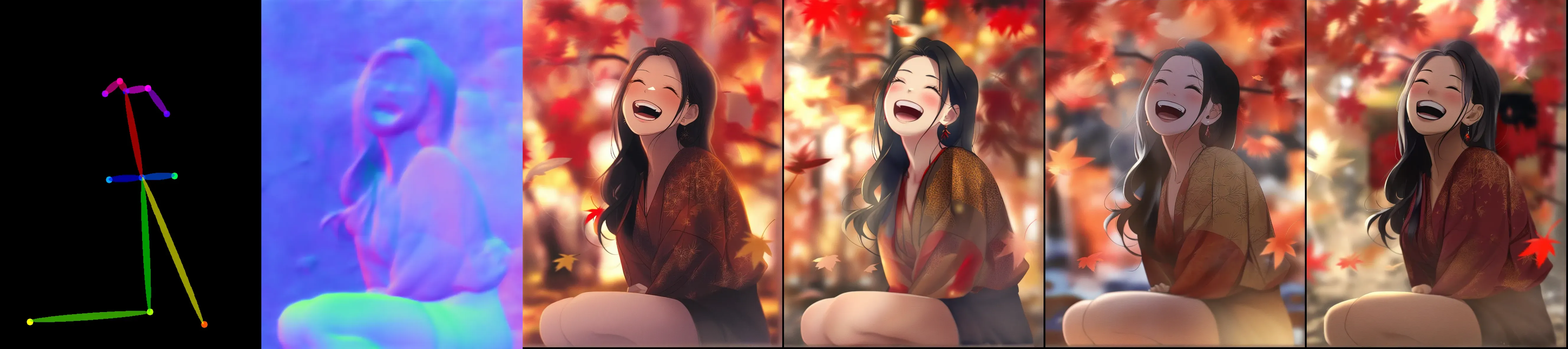

ControlNet++: All-in-one ControlNet for image generations and editing!

ProMax Model has released!! 12 control + 5 advanced editing, just try it!!!

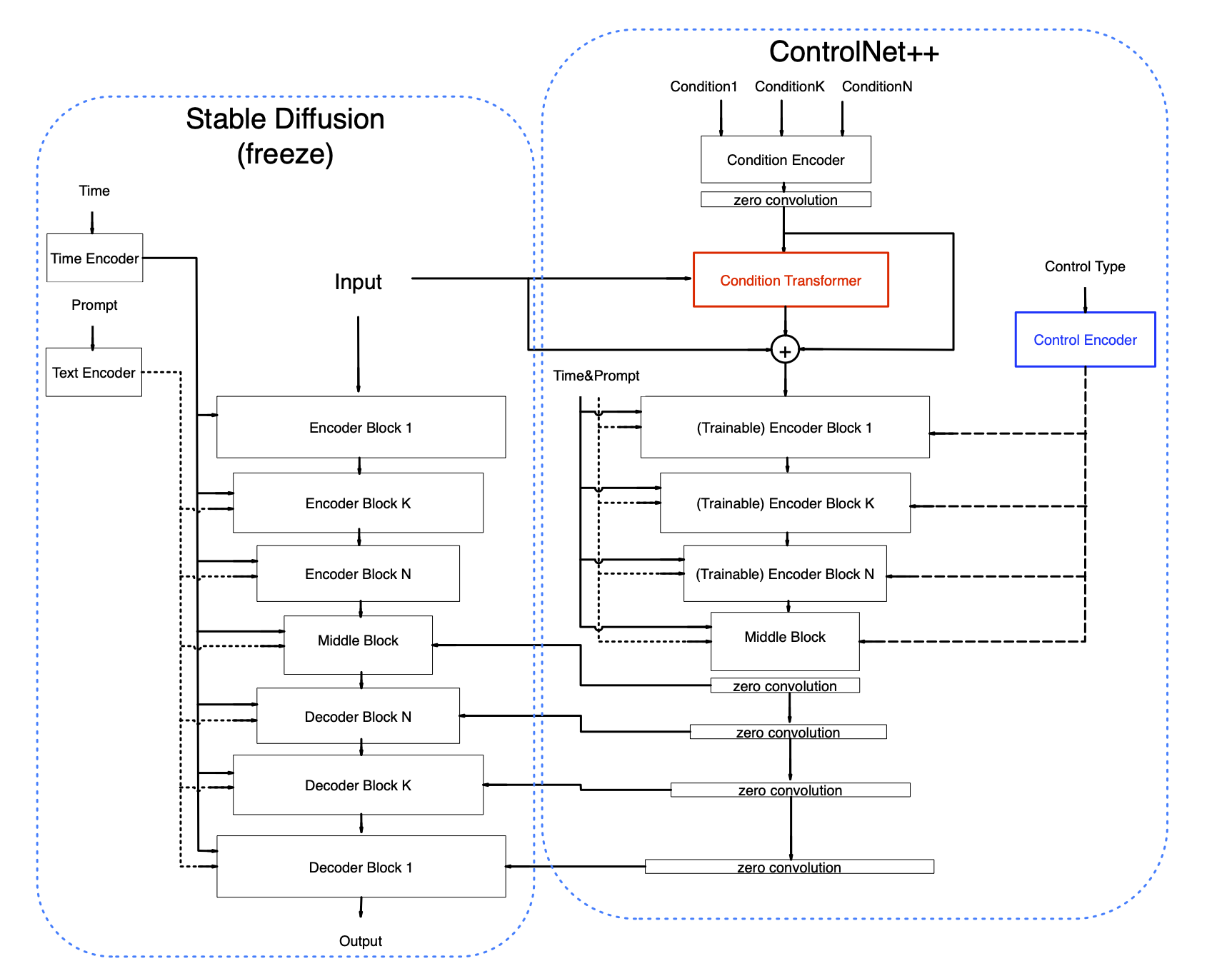

Network Arichitecture

Advantages about the model

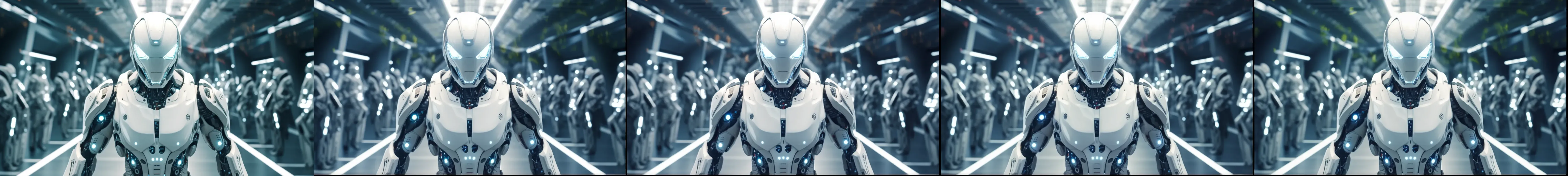

- Use bucket training like novelai, can generate high resolutions images of any aspect ratio

- Use large amount of high quality data(over 10000000 images), the dataset covers a diversity of situation

- Use re-captioned prompt like DALLE.3, use CogVLM to generate detailed description, good prompt following ability

- Use many useful tricks during training. Including but not limited to date augmentation, mutiple loss, multi resolution

- Use almost the same parameter compared with original ControlNet. No obvious increase in network parameter or computation.

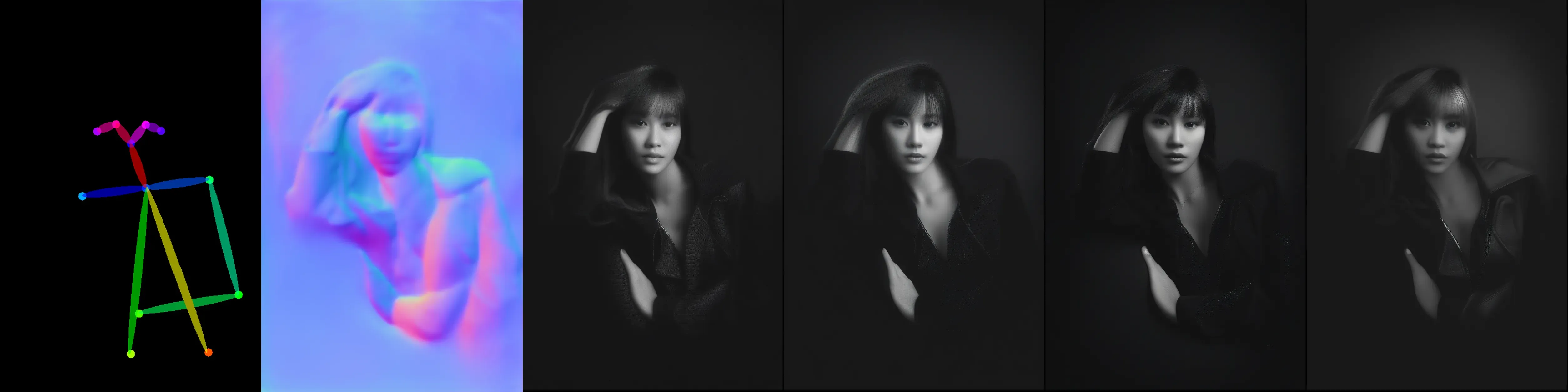

- Support 10+ control conditions, no obvious performance drop on any single condition compared with training independently

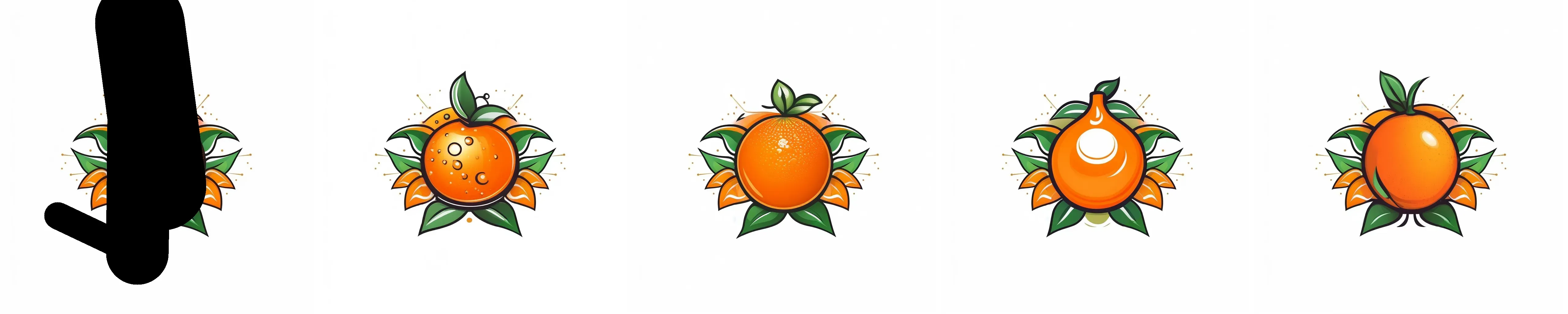

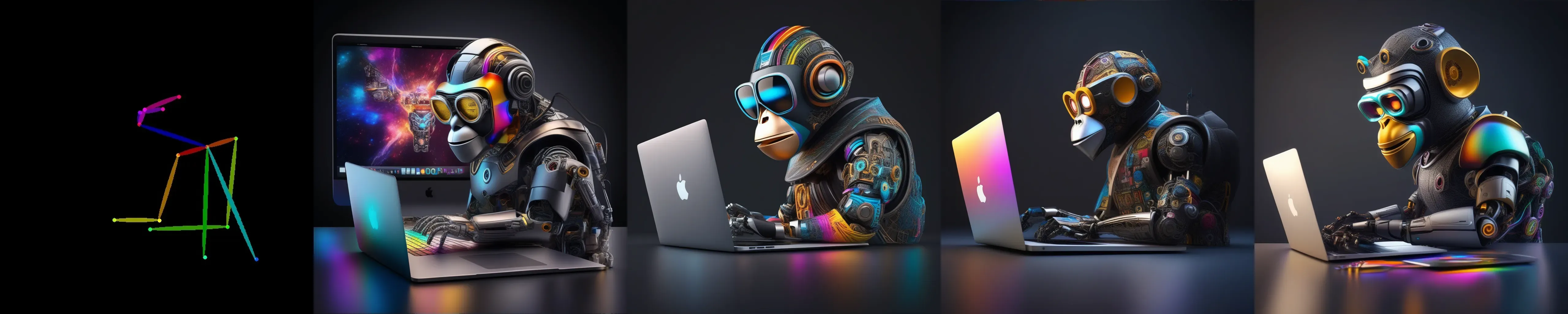

- Support multi condition generation, condition fusion is learned during training. No need to set hyperparameter or design prompts.

- Compatible with other opensource SDXL models, such as BluePencilXL, CounterfeitXL. Compatible with other Lora models.

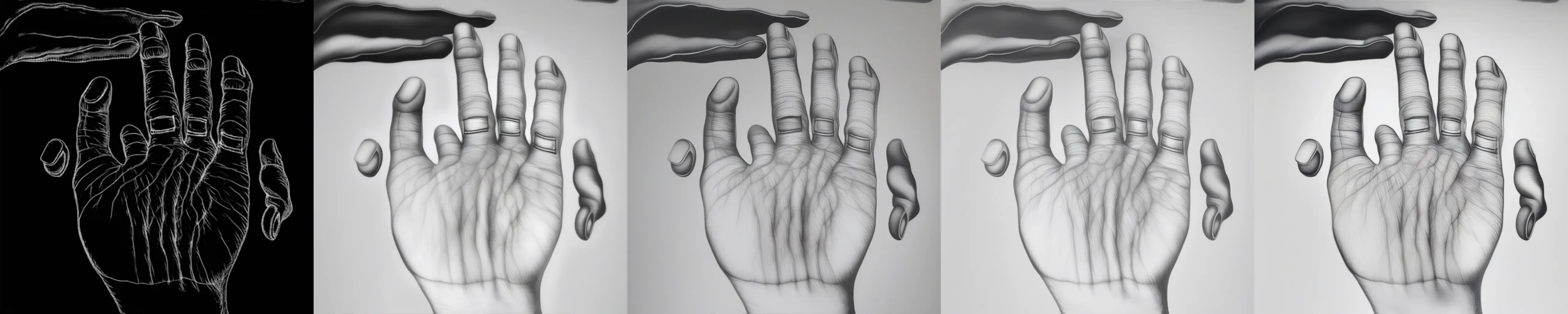

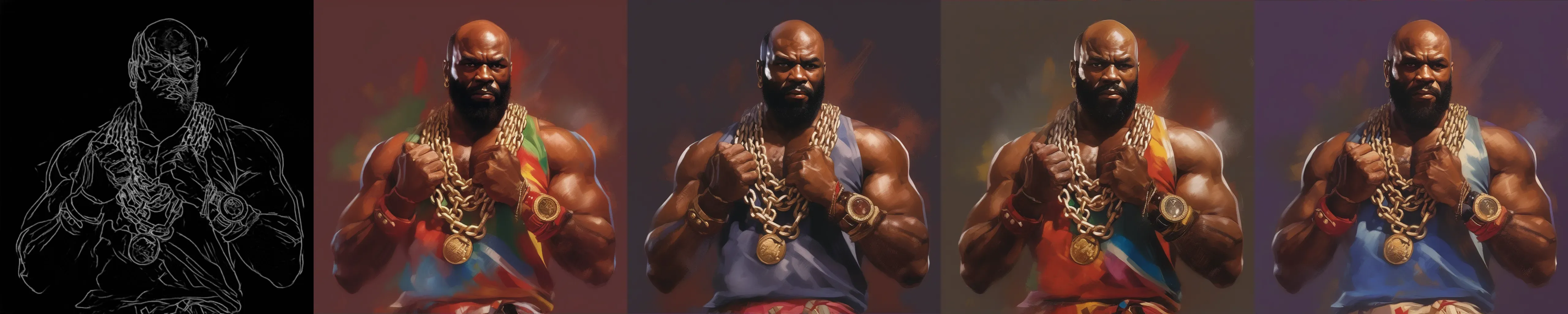

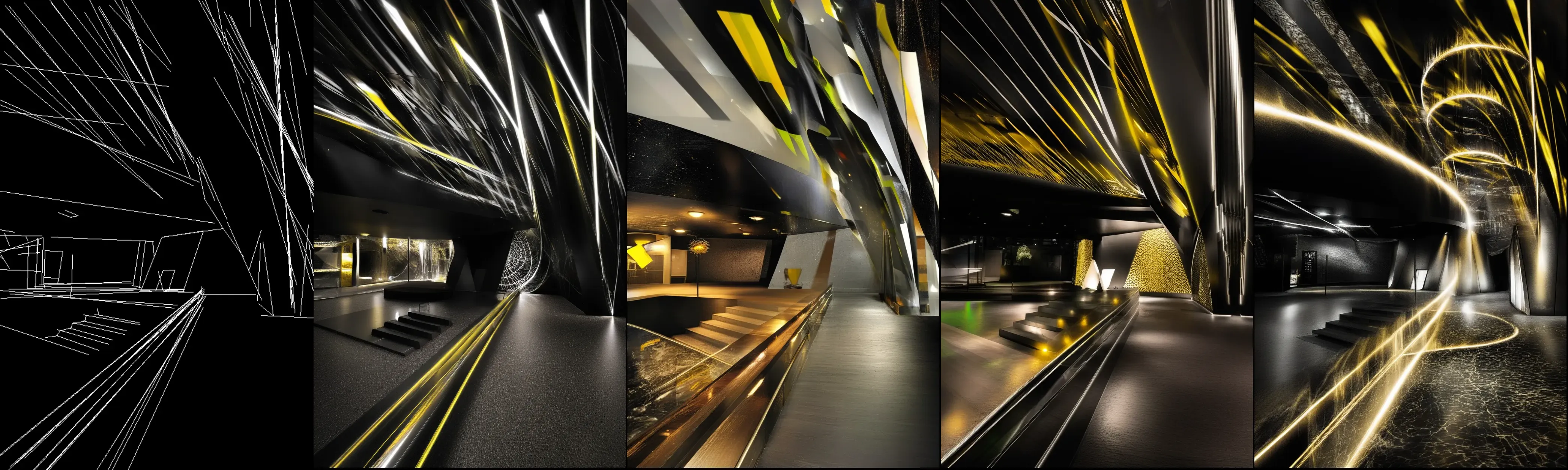

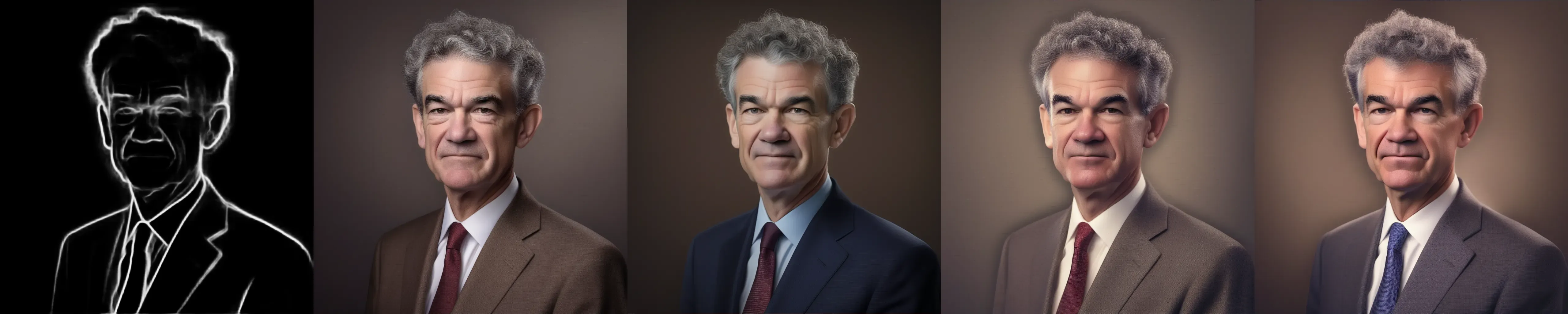

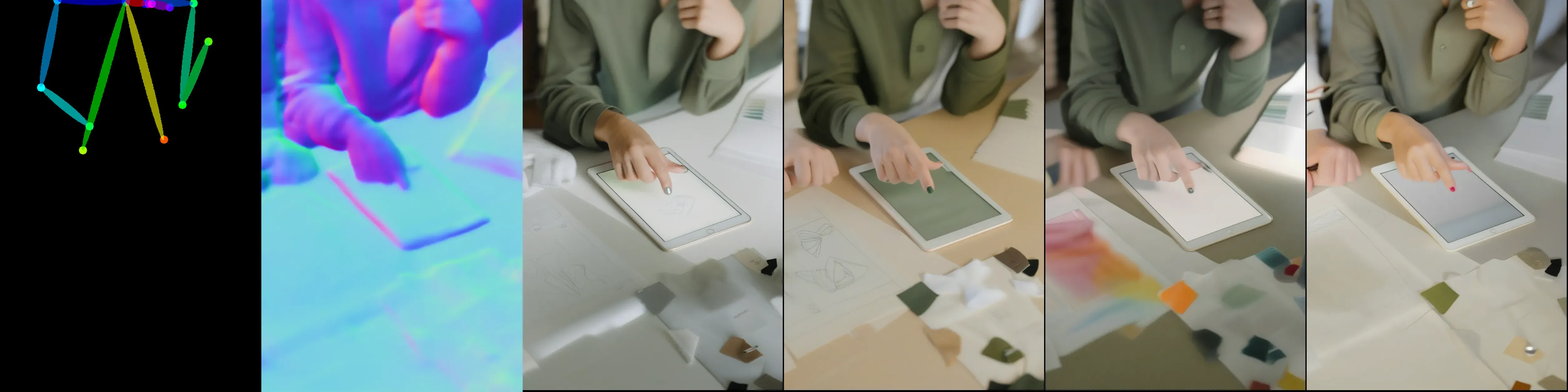

We design a new architecture that can support 10+ control types in condition text-to-image generation and can generate high resolution images visually comparable with midjourney. The network is based on the original ControlNet architecture, we propose two new modules to: 1 Extend the original ControlNet to support different image conditions using the same network parameter. 2 Support multiple conditions input without increasing computation offload, which is especially important for designers who want to edit image in detail, different conditions use the same condition encoder, without adding extra computations or parameters. We do thoroughly experiments on SDXL and achieve superior performance both in control ability and aesthetic score. We release the method and the model to the open source community to make everyone can enjoy it.

Inference scripts and more details can found: https://github.com/xinsir6/ControlNetPlus/tree/main

If you find it useful, please give me a star, thank you very much

SDXL ProMax version has been released!!!,Enjoy it!!!

I am sorry that because of the project's revenue and expenditure are difficult to balance, the GPU resources are assigned to other projects that are more likely to be profitable, the SD3 trainging is stopped until I find enough GPU supprt, I will try my best to find GPUs to continue training. If this brings you inconvenience, I sincerely apologize for that. I want to thank everyone who likes this project, your support is what keeps me going

Note: we put the promax model with a promax suffix in the same huggingface model repo, detailed instructions will be added later.

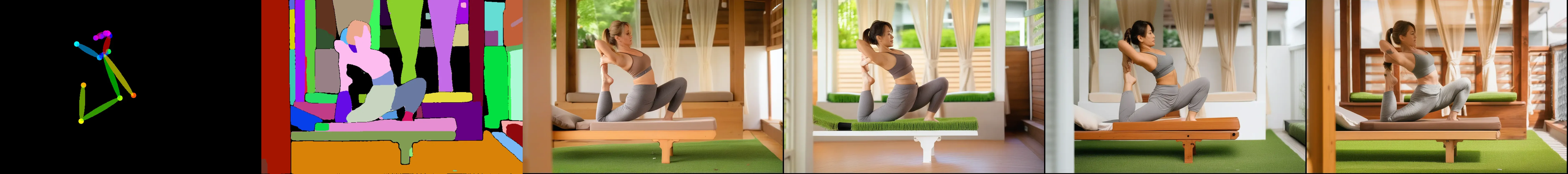

Advanced editing features in Promax Model

Tile Deblur

Tile variation

Tile Super Resolution

Following example show from 1M resolution --> 9M resolution