license: apache-2.0

General Scribble model that can generate images comparable with midjourney!

Controlnet-Scribble-Sdxl-1.0

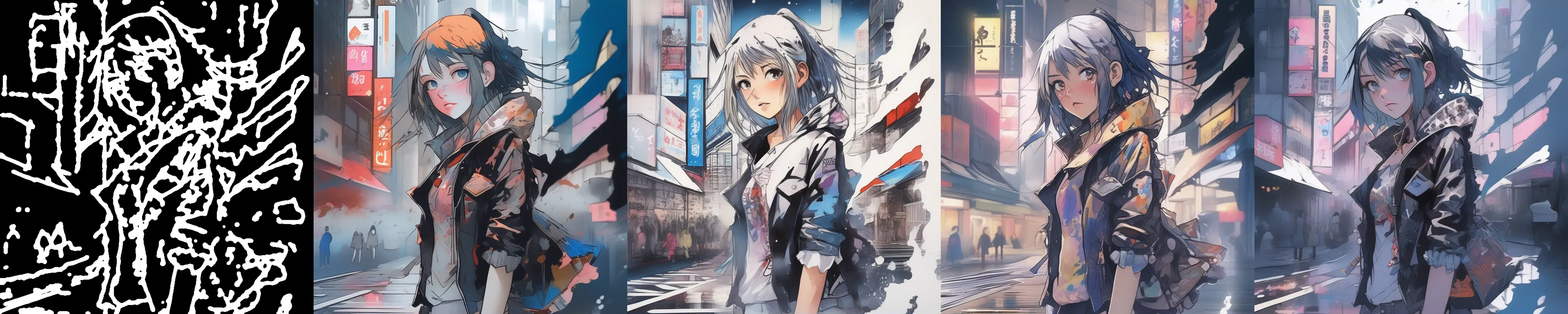

Hello, I am very happy to announce the controlnet-scribble-sdxl-1.0 model, a very powerful controlnet that can generate high resolution images visually comparable with midjourney. The model was trained with large amount of high quality data(over 10000000 images), with carefully filtered and captioned(powerful vllm model). Besides, useful tricks are applied during the training, including date augmentation, mutiple loss and multi resolution. Note that this model can achieve higher aesthetic performance than our Controlnet-Canny-Sdxl-1.0 model, the model support any type of lines and any width of lines, the sketch can be very simple and so does the prompt. This model is more general and good at generate visual appealing images, The control ability is also strong, for example if you are unstatisfied with some local regions about the generated image, draw a more precise sketch and give a detail prompt will help a lot. Note the model also support lineart or canny lines, you can try it and will get a surpurise!!!

Model Details

Model Description

- Developed by: xinsir

- Model type: ControlNet_SDXL

- License: apache-2.0

- Finetuned from model [optional]: stabilityai/stable-diffusion-xl-base-1.0

Model Sources [optional]

- Paper [optional]: https://arxiv.org/abs/2302.05543

Examples

How to Get Started with the Model

Use the code below to get started with the model.

from diffusers import ControlNetModel, StableDiffusionXLControlNetPipeline, AutoencoderKL

from diffusers import DDIMScheduler, EulerAncestralDiscreteScheduler

from controlnet_aux import PidiNetDetector, HEDdetector

from diffusers.utils import load_image

from huggingface_hub import HfApi

from pathlib import Path

from PIL import Image

import torch

import numpy as np

import cv2

import os

def HWC3(x):

assert x.dtype == np.uint8

if x.ndim == 2:

x = x[:, :, None]

assert x.ndim == 3

H, W, C = x.shape

assert C == 1 or C == 3 or C == 4

if C == 3:

return x

if C == 1:

return np.concatenate([x, x, x], axis=2)

if C == 4:

color = x[:, :, 0:3].astype(np.float32)

alpha = x[:, :, 3:4].astype(np.float32) / 255.0

y = color * alpha + 255.0 * (1.0 - alpha)

y = y.clip(0, 255).astype(np.uint8)

return y

def nms(x, t, s):

x = cv2.GaussianBlur(x.astype(np.float32), (0, 0), s)

f1 = np.array([[0, 0, 0], [1, 1, 1], [0, 0, 0]], dtype=np.uint8)

f2 = np.array([[0, 1, 0], [0, 1, 0], [0, 1, 0]], dtype=np.uint8)

f3 = np.array([[1, 0, 0], [0, 1, 0], [0, 0, 1]], dtype=np.uint8)

f4 = np.array([[0, 0, 1], [0, 1, 0], [1, 0, 0]], dtype=np.uint8)

y = np.zeros_like(x)

for f in [f1, f2, f3, f4]:

np.putmask(y, cv2.dilate(x, kernel=f) == x, x)

z = np.zeros_like(y, dtype=np.uint8)

z[y > t] = 255

return z

controlnet_conditioning_scale = 1.0

prompt = "your prompt, the longer the better, you can describe it as detail as possible"

negative_prompt = 'longbody, lowres, bad anatomy, bad hands, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality'

eulera_scheduler = EulerAncestralDiscreteScheduler.from_pretrained("stabilityai/stable-diffusion-xl-base-1.0", subfolder="scheduler")

controlnet = ControlNetModel.from_pretrained(

"xinsir/controlnet-scribble-sdxl-1.0",

torch_dtype=torch.float16

)

# when test with other base model, you need to change the vae also.

vae = AutoencoderKL.from_pretrained("madebyollin/sdxl-vae-fp16-fix", torch_dtype=torch.float16)

pipe = StableDiffusionXLControlNetPipeline.from_pretrained(

"stabilityai/stable-diffusion-xl-base-1.0",

controlnet=controlnet,

vae=vae,

safety_checker=None,

torch_dtype=torch.float16,

scheduler=eulera_scheduler,

)

# you can use either hed to generate a fake scribble given an image or a sketch image totally draw by yourself

if random.random() > 0.5:

# Method 1

# if you use hed, you should provide an image, the image can be real or anime, you extract its hed lines and use it as the scribbles

# The detail about hed detect you can refer to https://github.com/lllyasviel/ControlNet/blob/main/gradio_fake_scribble2image.py

# Below is a example using diffusers HED detector

image_path = Image.open("your image path, the image can be real or anime, HED detector will extract its edge boundery")

processor = HEDdetector.from_pretrained('lllyasviel/Annotators')

controlnet_img = processor(image_path, scribble=True)

controlnet_img.save("a hed detect path for an image")

# following is some processing to simulate human sketch draw, different threshold can generate different width of lines

controlnet_img = np.array(controlnet_img)

controlnet_img = nms(controlnet_img, 127, 3)

controlnet_img = cv2.GaussianBlur(controlnet_img, (0, 0), 3)

# higher threshold, thiner line

random_val = int(round(random.uniform(0.01, 0.10), 2) * 255)

controlnet_img[controlnet_img > random_val] = 255

controlnet_img[controlnet_img < 255] = 0

controlnet_img = Image.fromarray(controlnet_img)

else:

# Method 2

# if you use a sketch image total draw by yourself

control_path = "the sketch image you draw with some tools, like drawing board, the path you save it"

controlnet_img = Image.open(control_path) # Note that the image must be black-white(0 or 255), like the examples we list

# must resize to 1024*1024 or same resolution bucket to get the best performance

width, height = controlnet_img.size

ratio = np.sqrt(1024. * 1024. / (width * height))

new_width, new_height = int(width * ratio), int(height * ratio)

controlnet_img = controlnet_img.resize((new_width, new_height))

images = pipe(

prompt,

negative_prompt=negative_prompt,

image=controlnet_img,

controlnet_conditioning_scale=controlnet_conditioning_scale,

width=new_width,

height=new_height,

num_inference_steps=30,

).images

images[0].save(f"your image save path, png format is usually better than jpg or webp in terms of image quality but got much bigger")