YAML Metadata

Warning:

empty or missing yaml metadata in repo card

(https://huggingface.co/docs/hub/model-cards#model-card-metadata)

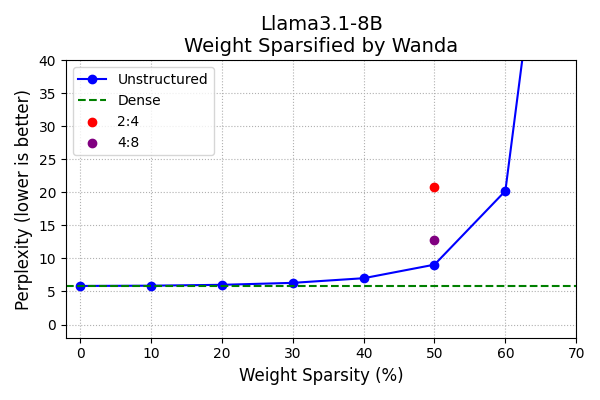

A set of 50% weight-sparse Llama3.1-8B pruned by Wanda. Model links are in the table below. Models can be loaded as is with Huggingface Transformers.

Perplexity

MMLU (5-shot)

| MMLU (5-shot) | Accuracy (%) | Relative to Dense (%) | Model Link |

|---|---|---|---|

| Dense | 65.1 | baseline | Meta-Llama-3.1-8B-wanda-unstructured-0.0 |

| Unstructured | 50.0 | -15.1 | Meta-Llama-3.1-8B-wanda-unstructured-0.5 |

| 4:8 | 39.3 | -25.8 | Meta-Llama-3.1-8B-wanda-4of8 |

| 2:4 | 28.7 | -36.4 | Meta-Llama-3.1-8B-wanda-2of4 |

- Downloads last month

- 68