Thank you to the Akash Network for sponsoring this project and providing A100s/H100s for compute!

Overview

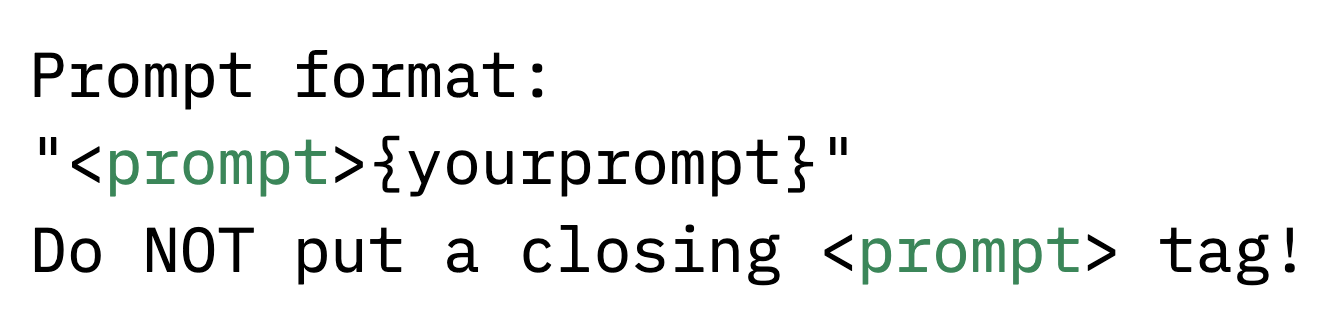

Writing good AI art prompts for Midjourney, Stable Diffusion, etcetera takes time and practice. I fine-tuned a small language model (Phi-3) to help you improve your prompts.

Fine-tuned version of unquantized unsloth/Phi-3-mini-4k-instruct using Unsloth on ~100,000 high quality Midjourney AI art prompts

This Hugging Face repo contains adapter weights only (you need to load on top of the base Phi-3 weights)

Inference

Recommended inference settings: repetition_penalty = 1.2, temperature = 0.5-1.0 Adjust if the model starts repeating itself.

Fine-tuning Details

I used this reference code from Anoop Maurya. After experimenting with various settings and parameters, this is what I settled on:

- Max seq length: 128 (few prompts are longer than 128 tokens, at this point you probably get diminishing returns on image quality)

- fine-tuned on unquantized base weights

- LoRA: R=32, Alpha=32

- Batch size: 32

- Epochs: 1

- Gradient accumulation steps: 4

- Warmup steps: 100

- Learning rate: 1e4

- Optimizer: adamw_8bit I used an H100 GPU from the Akash Network.

Dataset

Please see gaodrew/midjourney-prompts-highquality

Limitations

The model is prone to listing out adjectives. For example: "kitten, cute kitten with a big smile on its face and fluffy fur. The background is filled with colorful flowers in various shades of pink, purple, blue, yellow, orange, green, red, white, black, brown, gray, gold, silver, bronze, copper, brass, steel, aluminum, titanium, platinum, diamond"