File size: 570 Bytes

c031eb3 3f2f86f |

1 2 3 4 5 6 7 8 |

---

license: cc

---

# LLaVA Compress model weights to int4 using NNCF

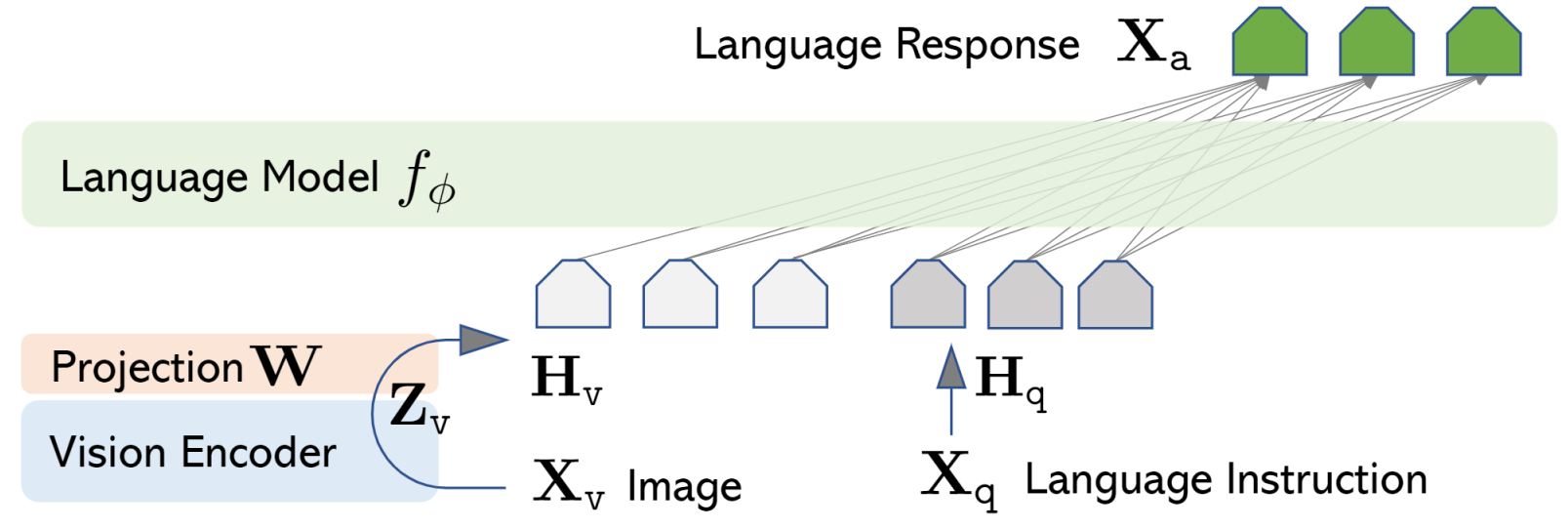

[LLaVA](https://llava-vl.github.io) (Large Language and Vision Assistant) is large multimodal model that aims to develop a general-purpose visual assistant that can follow both language and image instructions to complete various real-world tasks.

LLaVA connects pre-trained [CLIP ViT-L/14](https://openai.com/research/clip) visual encoder and large language model like Vicuna, LLaMa v2 or MPT, using a simple projection matrix.

|