LLaVA Compress model weights to int4 using NNCF

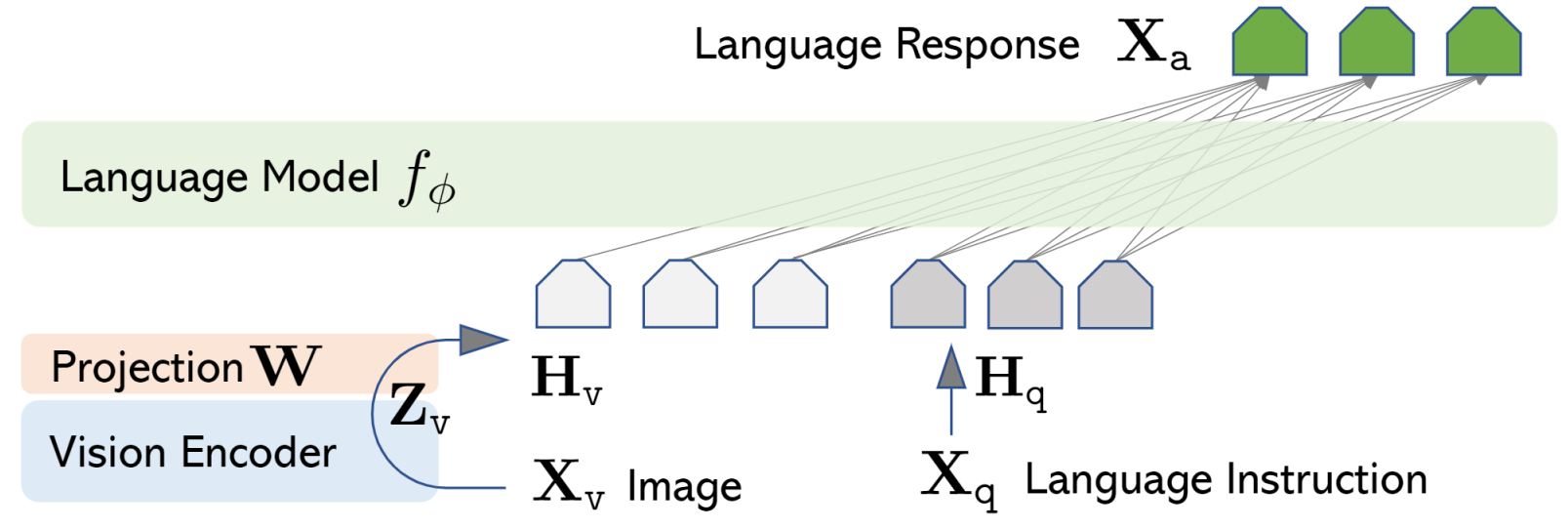

LLaVA (Large Language and Vision Assistant) is large multimodal model that aims to develop a general-purpose visual assistant that can follow both language and image instructions to complete various real-world tasks.

LLaVA connects pre-trained CLIP ViT-L/14 visual encoder and large language model like Vicuna, LLaMa v2 or MPT, using a simple projection matrix.

- Downloads last month

- 17

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.