Marigold Pipelines for Computer Vision Tasks

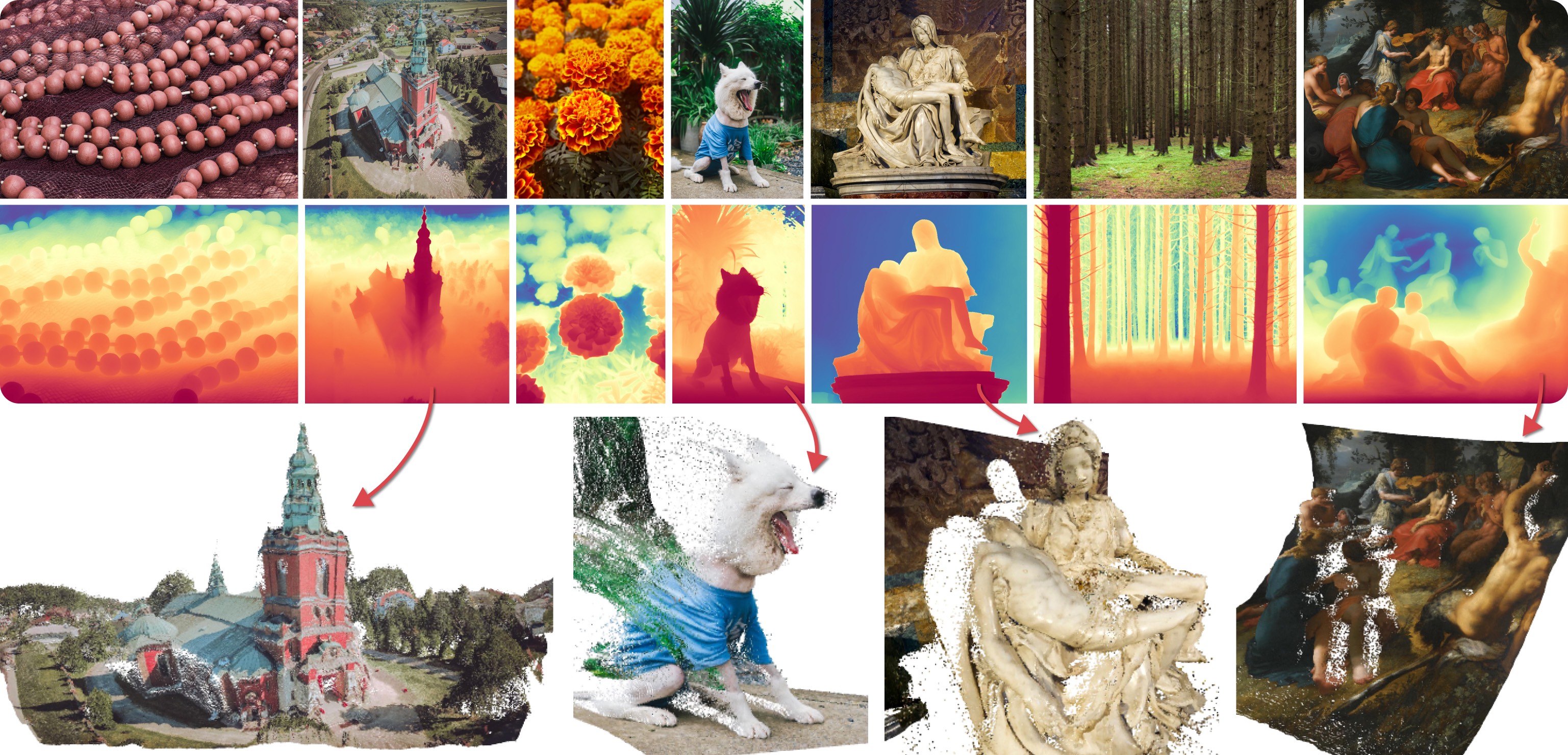

Marigold was proposed in Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation, a CVPR 2024 Oral paper by Bingxin Ke, Anton Obukhov, Shengyu Huang, Nando Metzger, Rodrigo Caye Daudt, and Konrad Schindler. The idea is to repurpose the rich generative prior of Text-to-Image Latent Diffusion Models (LDMs) for traditional computer vision tasks. Initially, this idea was explored to fine-tune Stable Diffusion for Monocular Depth Estimation, as shown in the teaser above. Later,

- Tianfu Wang trained the first Latent Consistency Model (LCM) of Marigold, which unlocked fast single-step inference;

- Kevin Qu extended the approach to Surface Normals Estimation;

- Anton Obukhov contributed the pipelines and documentation into diffusers (enabled and supported by YiYi Xu and Sayak Paul).

The abstract from the paper is:

Monocular depth estimation is a fundamental computer vision task. Recovering 3D depth from a single image is geometrically ill-posed and requires scene understanding, so it is not surprising that the rise of deep learning has led to a breakthrough. The impressive progress of monocular depth estimators has mirrored the growth in model capacity, from relatively modest CNNs to large Transformer architectures. Still, monocular depth estimators tend to struggle when presented with images with unfamiliar content and layout, since their knowledge of the visual world is restricted by the data seen during training, and challenged by zero-shot generalization to new domains. This motivates us to explore whether the extensive priors captured in recent generative diffusion models can enable better, more generalizable depth estimation. We introduce Marigold, a method for affine-invariant monocular depth estimation that is derived from Stable Diffusion and retains its rich prior knowledge. The estimator can be fine-tuned in a couple of days on a single GPU using only synthetic training data. It delivers state-of-the-art performance across a wide range of datasets, including over 20% performance gains in specific cases. Project page: https://marigoldmonodepth.github.io.

Available Pipelines

Each pipeline supports one Computer Vision task, which takes an input RGB image as input and produces a prediction of the modality of interest, such as a depth map of the input image. Currently, the following tasks are implemented:

| Pipeline | Predicted Modalities | Demos |

|---|---|---|

| MarigoldDepthPipeline | Depth, Disparity | Fast Demo (LCM), Slow Original Demo (DDIM) |

| MarigoldNormalsPipeline | Surface normals | Fast Demo (LCM) |

Available Checkpoints

The original checkpoints can be found under the PRS-ETH Hugging Face organization.

Make sure to check out the Schedulers guide to learn how to explore the tradeoff between scheduler speed and quality, and see the reuse components across pipelines section to learn how to efficiently load the same components into multiple pipelines. Also, to know more about reducing the memory usage of this pipeline, refer to the [“Reduce memory usage”] section here.

Marigold pipelines were designed and tested only with DDIMScheduler and LCMScheduler.

Depending on the scheduler, the number of inference steps required to get reliable predictions varies, and there is no universal value that works best across schedulers.

Because of that, the default value of num_inference_steps in the __call__ method of the pipeline is set to None (see the API reference).

Unless set explicitly, its value will be taken from the checkpoint configuration model_index.json.

This is done to ensure high-quality predictions when calling the pipeline with just the image argument.

See also Marigold usage examples.

MarigoldDepthPipeline

class diffusers.MarigoldDepthPipeline

< source >( unet: UNet2DConditionModel vae: AutoencoderKL scheduler: typing.Union[diffusers.schedulers.scheduling_ddim.DDIMScheduler, diffusers.schedulers.scheduling_lcm.LCMScheduler] text_encoder: CLIPTextModel tokenizer: CLIPTokenizer prediction_type: typing.Optional[str] = None scale_invariant: typing.Optional[bool] = True shift_invariant: typing.Optional[bool] = True default_denoising_steps: typing.Optional[int] = None default_processing_resolution: typing.Optional[int] = None )

Parameters

- unet (

UNet2DConditionModel) — Conditional U-Net to denoise the depth latent, conditioned on image latent. - vae (

AutoencoderKL) — Variational Auto-Encoder (VAE) Model to encode and decode images and predictions to and from latent representations. - scheduler (

DDIMSchedulerorLCMScheduler) — A scheduler to be used in combination withunetto denoise the encoded image latents. - text_encoder (

CLIPTextModel) — Text-encoder, for empty text embedding. - tokenizer (

CLIPTokenizer) — CLIP tokenizer. - prediction_type (

str, optional) — Type of predictions made by the model. - scale_invariant (

bool, optional) — A model property specifying whether the predicted depth maps are scale-invariant. This value must be set in the model config. When used together with theshift_invariant=Trueflag, the model is also called “affine-invariant”. NB: overriding this value is not supported. - shift_invariant (

bool, optional) — A model property specifying whether the predicted depth maps are shift-invariant. This value must be set in the model config. When used together with thescale_invariant=Trueflag, the model is also called “affine-invariant”. NB: overriding this value is not supported. - default_denoising_steps (

int, optional) — The minimum number of denoising diffusion steps that are required to produce a prediction of reasonable quality with the given model. This value must be set in the model config. When the pipeline is called without explicitly settingnum_inference_steps, the default value is used. This is required to ensure reasonable results with various model flavors compatible with the pipeline, such as those relying on very short denoising schedules (LCMScheduler) and those with full diffusion schedules (DDIMScheduler). - default_processing_resolution (

int, optional) — The recommended value of theprocessing_resolutionparameter of the pipeline. This value must be set in the model config. When the pipeline is called without explicitly settingprocessing_resolution, the default value is used. This is required to ensure reasonable results with various model flavors trained with varying optimal processing resolution values.

Pipeline for monocular depth estimation using the Marigold method: https://marigoldmonodepth.github.io.

This model inherits from DiffusionPipeline. Check the superclass documentation for the generic methods the library implements for all the pipelines (such as downloading or saving, running on a particular device, etc.)

__call__

< source >( image: typing.Union[PIL.Image.Image, numpy.ndarray, torch.Tensor, typing.List[PIL.Image.Image], typing.List[numpy.ndarray], typing.List[torch.Tensor]] num_inference_steps: typing.Optional[int] = None ensemble_size: int = 1 processing_resolution: typing.Optional[int] = None match_input_resolution: bool = True resample_method_input: str = 'bilinear' resample_method_output: str = 'bilinear' batch_size: int = 1 ensembling_kwargs: typing.Optional[typing.Dict[str, typing.Any]] = None latents: typing.Union[torch.Tensor, typing.List[torch.Tensor], NoneType] = None generator: typing.Union[torch._C.Generator, typing.List[torch._C.Generator], NoneType] = None output_type: str = 'np' output_uncertainty: bool = False output_latent: bool = False return_dict: bool = True ) → MarigoldDepthOutput or tuple

Parameters

- image (

PIL.Image.Image,np.ndarray,torch.Tensor,List[PIL.Image.Image],List[np.ndarray]), —List[torch.Tensor]: An input image or images used as an input for the depth estimation task. For arrays and tensors, the expected value range is between[0, 1]. Passing a batch of images is possible by providing a four-dimensional array or a tensor. Additionally, a list of images of two- or three-dimensional arrays or tensors can be passed. In the latter case, all list elements must have the same width and height. - num_inference_steps (

int, optional, defaults toNone) — Number of denoising diffusion steps during inference. The default valueNoneresults in automatic selection. The number of steps should be at least 10 with the full Marigold models, and between 1 and 4 for Marigold-LCM models. - ensemble_size (

int, defaults to1) — Number of ensemble predictions. Recommended values are 5 and higher for better precision, or 1 for faster inference. - processing_resolution (

int, optional, defaults toNone) — Effective processing resolution. When set to0, matches the larger input image dimension. This produces crisper predictions, but may also lead to the overall loss of global context. The default valueNoneresolves to the optimal value from the model config. - match_input_resolution (

bool, optional, defaults toTrue) — When enabled, the output prediction is resized to match the input dimensions. When disabled, the longer side of the output will equal toprocessing_resolution. - resample_method_input (

str, optional, defaults to"bilinear") — Resampling method used to resize input images toprocessing_resolution. The accepted values are:"nearest","nearest-exact","bilinear","bicubic", or"area". - resample_method_output (

str, optional, defaults to"bilinear") — Resampling method used to resize output predictions to match the input resolution. The accepted values are"nearest","nearest-exact","bilinear","bicubic", or"area". - batch_size (

int, optional, defaults to1) — Batch size; only matters when settingensemble_sizeor passing a tensor of images. - ensembling_kwargs (

dict, optional, defaults toNone) — Extra dictionary with arguments for precise ensembling control. The following options are available:- reduction (

str, optional, defaults to"median"): Defines the ensembling function applied in every pixel location, can be either"median"or"mean". - regularizer_strength (

float, optional, defaults to0.02): Strength of the regularizer that pulls the aligned predictions to the unit range from 0 to 1. - max_iter (

int, optional, defaults to2): Maximum number of the alignment solver steps. Refer toscipy.optimize.minimizefunction,optionsargument. - tol (

float, optional, defaults to1e-3): Alignment solver tolerance. The solver stops when the tolerance is reached. - max_res (

int, optional, defaults toNone): Resolution at which the alignment is performed;Nonematches theprocessing_resolution.

- reduction (

- latents (

torch.Tensor, orList[torch.Tensor], optional, defaults toNone) — Latent noise tensors to replace the random initialization. These can be taken from the previous function call’s output. - generator (

torch.Generator, orList[torch.Generator], optional, defaults toNone) — Random number generator object to ensure reproducibility. - output_type (

str, optional, defaults to"np") — Preferred format of the output’spredictionand the optionaluncertaintyfields. The accepted values are:"np"(numpy array) or"pt"(torch tensor). - output_uncertainty (

bool, optional, defaults toFalse) — When enabled, the output’suncertaintyfield contains the predictive uncertainty map, provided that theensemble_sizeargument is set to a value above 2. - output_latent (

bool, optional, defaults toFalse) — When enabled, the output’slatentfield contains the latent codes corresponding to the predictions within the ensemble. These codes can be saved, modified, and used for subsequent calls with thelatentsargument. - return_dict (

bool, optional, defaults toTrue) — Whether or not to return a MarigoldDepthOutput instead of a plain tuple.

Returns

MarigoldDepthOutput or tuple

If return_dict is True, MarigoldDepthOutput is returned, otherwise a

tuple is returned where the first element is the prediction, the second element is the uncertainty

(or None), and the third is the latent (or None).

Function invoked when calling the pipeline.

Examples:

>>> import diffusers

>>> import torch

>>> pipe = diffusers.MarigoldDepthPipeline.from_pretrained(

... "prs-eth/marigold-depth-lcm-v1-0", variant="fp16", torch_dtype=torch.float16

... ).to("cuda")

>>> image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

>>> depth = pipe(image)

>>> vis = pipe.image_processor.visualize_depth(depth.prediction)

>>> vis[0].save("einstein_depth.png")

>>> depth_16bit = pipe.image_processor.export_depth_to_16bit_png(depth.prediction)

>>> depth_16bit[0].save("einstein_depth_16bit.png")ensemble_depth

< source >( depth: Tensor scale_invariant: bool = True shift_invariant: bool = True output_uncertainty: bool = False reduction: str = 'median' regularizer_strength: float = 0.02 max_iter: int = 2 tol: float = 0.001 max_res: int = 1024 ) → A tensor of aligned and ensembled depth maps and optionally a tensor of uncertainties of the same shape

Parameters

- depth (

torch.Tensor) — Input ensemble depth maps. - scale_invariant (

bool, optional, defaults toTrue) — Whether to treat predictions as scale-invariant. - shift_invariant (

bool, optional, defaults toTrue) — Whether to treat predictions as shift-invariant. - output_uncertainty (

bool, optional, defaults toFalse) — Whether to output uncertainty map. - reduction (

str, optional, defaults to"median") — Reduction method used to ensemble aligned predictions. The accepted values are:"mean"and"median". - regularizer_strength (

float, optional, defaults to0.02) — Strength of the regularizer that pulls the aligned predictions to the unit range from 0 to 1. - max_iter (

int, optional, defaults to2) — Maximum number of the alignment solver steps. Refer toscipy.optimize.minimizefunction,optionsargument. - tol (

float, optional, defaults to1e-3) — Alignment solver tolerance. The solver stops when the tolerance is reached. - max_res (

int, optional, defaults to1024) — Resolution at which the alignment is performed;Nonematches theprocessing_resolution.

Returns

A tensor of aligned and ensembled depth maps and optionally a tensor of uncertainties of the same shape

(1, 1, H, W).

Ensembles the depth maps represented by the depth tensor with expected shape (B, 1, H, W), where B is the

number of ensemble members for a given prediction of size (H x W). Even though the function is designed for

depth maps, it can also be used with disparity maps as long as the input tensor values are non-negative. The

alignment happens when the predictions have one or more degrees of freedom, that is when they are either

affine-invariant (scale_invariant=True and shift_invariant=True), or just scale-invariant (only

scale_invariant=True). For absolute predictions (scale_invariant=False and shift_invariant=False)

alignment is skipped and only ensembling is performed.

MarigoldNormalsPipeline

class diffusers.MarigoldNormalsPipeline

< source >( unet: UNet2DConditionModel vae: AutoencoderKL scheduler: typing.Union[diffusers.schedulers.scheduling_ddim.DDIMScheduler, diffusers.schedulers.scheduling_lcm.LCMScheduler] text_encoder: CLIPTextModel tokenizer: CLIPTokenizer prediction_type: typing.Optional[str] = None use_full_z_range: typing.Optional[bool] = True default_denoising_steps: typing.Optional[int] = None default_processing_resolution: typing.Optional[int] = None )

Parameters

- unet (

UNet2DConditionModel) — Conditional U-Net to denoise the normals latent, conditioned on image latent. - vae (

AutoencoderKL) — Variational Auto-Encoder (VAE) Model to encode and decode images and predictions to and from latent representations. - scheduler (

DDIMSchedulerorLCMScheduler) — A scheduler to be used in combination withunetto denoise the encoded image latents. - text_encoder (

CLIPTextModel) — Text-encoder, for empty text embedding. - tokenizer (

CLIPTokenizer) — CLIP tokenizer. - prediction_type (

str, optional) — Type of predictions made by the model. - use_full_z_range (

bool, optional) — Whether the normals predicted by this model utilize the full range of the Z dimension, or only its positive half. - default_denoising_steps (

int, optional) — The minimum number of denoising diffusion steps that are required to produce a prediction of reasonable quality with the given model. This value must be set in the model config. When the pipeline is called without explicitly settingnum_inference_steps, the default value is used. This is required to ensure reasonable results with various model flavors compatible with the pipeline, such as those relying on very short denoising schedules (LCMScheduler) and those with full diffusion schedules (DDIMScheduler). - default_processing_resolution (

int, optional) — The recommended value of theprocessing_resolutionparameter of the pipeline. This value must be set in the model config. When the pipeline is called without explicitly settingprocessing_resolution, the default value is used. This is required to ensure reasonable results with various model flavors trained with varying optimal processing resolution values.

Pipeline for monocular normals estimation using the Marigold method: https://marigoldmonodepth.github.io.

This model inherits from DiffusionPipeline. Check the superclass documentation for the generic methods the library implements for all the pipelines (such as downloading or saving, running on a particular device, etc.)

__call__

< source >( image: typing.Union[PIL.Image.Image, numpy.ndarray, torch.Tensor, typing.List[PIL.Image.Image], typing.List[numpy.ndarray], typing.List[torch.Tensor]] num_inference_steps: typing.Optional[int] = None ensemble_size: int = 1 processing_resolution: typing.Optional[int] = None match_input_resolution: bool = True resample_method_input: str = 'bilinear' resample_method_output: str = 'bilinear' batch_size: int = 1 ensembling_kwargs: typing.Optional[typing.Dict[str, typing.Any]] = None latents: typing.Union[torch.Tensor, typing.List[torch.Tensor], NoneType] = None generator: typing.Union[torch._C.Generator, typing.List[torch._C.Generator], NoneType] = None output_type: str = 'np' output_uncertainty: bool = False output_latent: bool = False return_dict: bool = True ) → MarigoldNormalsOutput or tuple

Parameters

- image (

PIL.Image.Image,np.ndarray,torch.Tensor,List[PIL.Image.Image],List[np.ndarray]), —List[torch.Tensor]: An input image or images used as an input for the normals estimation task. For arrays and tensors, the expected value range is between[0, 1]. Passing a batch of images is possible by providing a four-dimensional array or a tensor. Additionally, a list of images of two- or three-dimensional arrays or tensors can be passed. In the latter case, all list elements must have the same width and height. - num_inference_steps (

int, optional, defaults toNone) — Number of denoising diffusion steps during inference. The default valueNoneresults in automatic selection. The number of steps should be at least 10 with the full Marigold models, and between 1 and 4 for Marigold-LCM models. - ensemble_size (

int, defaults to1) — Number of ensemble predictions. Recommended values are 5 and higher for better precision, or 1 for faster inference. - processing_resolution (

int, optional, defaults toNone) — Effective processing resolution. When set to0, matches the larger input image dimension. This produces crisper predictions, but may also lead to the overall loss of global context. The default valueNoneresolves to the optimal value from the model config. - match_input_resolution (

bool, optional, defaults toTrue) — When enabled, the output prediction is resized to match the input dimensions. When disabled, the longer side of the output will equal toprocessing_resolution. - resample_method_input (

str, optional, defaults to"bilinear") — Resampling method used to resize input images toprocessing_resolution. The accepted values are:"nearest","nearest-exact","bilinear","bicubic", or"area". - resample_method_output (

str, optional, defaults to"bilinear") — Resampling method used to resize output predictions to match the input resolution. The accepted values are"nearest","nearest-exact","bilinear","bicubic", or"area". - batch_size (

int, optional, defaults to1) — Batch size; only matters when settingensemble_sizeor passing a tensor of images. - ensembling_kwargs (

dict, optional, defaults toNone) — Extra dictionary with arguments for precise ensembling control. The following options are available:- reduction (

str, optional, defaults to"closest"): Defines the ensembling function applied in every pixel location, can be either"closest"or"mean".

- reduction (

- latents (

torch.Tensor, optional, defaults toNone) — Latent noise tensors to replace the random initialization. These can be taken from the previous function call’s output. - generator (

torch.Generator, orList[torch.Generator], optional, defaults toNone) — Random number generator object to ensure reproducibility. - output_type (

str, optional, defaults to"np") — Preferred format of the output’spredictionand the optionaluncertaintyfields. The accepted values are:"np"(numpy array) or"pt"(torch tensor). - output_uncertainty (

bool, optional, defaults toFalse) — When enabled, the output’suncertaintyfield contains the predictive uncertainty map, provided that theensemble_sizeargument is set to a value above 2. - output_latent (

bool, optional, defaults toFalse) — When enabled, the output’slatentfield contains the latent codes corresponding to the predictions within the ensemble. These codes can be saved, modified, and used for subsequent calls with thelatentsargument. - return_dict (

bool, optional, defaults toTrue) — Whether or not to return a MarigoldDepthOutput instead of a plain tuple.

Returns

MarigoldNormalsOutput or tuple

If return_dict is True, MarigoldNormalsOutput is returned, otherwise a

tuple is returned where the first element is the prediction, the second element is the uncertainty

(or None), and the third is the latent (or None).

Function invoked when calling the pipeline.

Examples:

>>> import diffusers

>>> import torch

>>> pipe = diffusers.MarigoldNormalsPipeline.from_pretrained(

... "prs-eth/marigold-normals-lcm-v0-1", variant="fp16", torch_dtype=torch.float16

... ).to("cuda")

>>> image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

>>> normals = pipe(image)

>>> vis = pipe.image_processor.visualize_normals(normals.prediction)

>>> vis[0].save("einstein_normals.png")ensemble_normals

< source >( normals: Tensor output_uncertainty: bool reduction: str = 'closest' )

Parameters

Ensembles the normals maps represented by the normals tensor with expected shape (B, 3, H, W), where B is

the number of ensemble members for a given prediction of size (H x W).

MarigoldDepthOutput

class diffusers.pipelines.marigold.MarigoldDepthOutput

< source >( prediction: typing.Union[numpy.ndarray, torch.Tensor] uncertainty: typing.Union[NoneType, numpy.ndarray, torch.Tensor] latent: typing.Optional[torch.Tensor] )

Parameters

- prediction (

np.ndarray,torch.Tensor) — Predicted depth maps with values in the range [0, 1]. The shape is always $numimages imes 1 imes height imes width$, regardless of whether the images were passed as a 4D array or a list. - uncertainty (

None,np.ndarray,torch.Tensor) — Uncertainty maps computed from the ensemble, with values in the range [0, 1]. The shape is $numimages imes 1 imes height imes width$. - latent (

None,torch.Tensor) — Latent features corresponding to the predictions, compatible with thelatentsargument of the pipeline. The shape is $numimages * numensemble imes 4 imes latentheight imes latentwidth$.

Output class for Marigold monocular depth prediction pipeline.

MarigoldNormalsOutput

class diffusers.pipelines.marigold.MarigoldNormalsOutput

< source >( prediction: typing.Union[numpy.ndarray, torch.Tensor] uncertainty: typing.Union[NoneType, numpy.ndarray, torch.Tensor] latent: typing.Optional[torch.Tensor] )

Parameters

- prediction (

np.ndarray,torch.Tensor) — Predicted normals with values in the range [-1, 1]. The shape is always $numimages imes 3 imes height imes width$, regardless of whether the images were passed as a 4D array or a list. - uncertainty (

None,np.ndarray,torch.Tensor) — Uncertainty maps computed from the ensemble, with values in the range [0, 1]. The shape is $numimages imes 1 imes height imes width$. - latent (

None,torch.Tensor) — Latent features corresponding to the predictions, compatible with thelatentsargument of the pipeline. The shape is $numimages * numensemble imes 4 imes latentheight imes latentwidth$.

Output class for Marigold monocular normals prediction pipeline.