The full dataset viewer is not available (click to read why). Only showing a preview of the rows.

Error code: DatasetGenerationCastError

Exception: DatasetGenerationCastError

Message: An error occurred while generating the dataset

All the data files must have the same columns, but at some point there are 1 missing columns ({'sentence_id'})

This happened while the json dataset builder was generating data using

hf://datasets/rose-e-wang/backtracing/inquisitive/sources/test/0101.json (at revision 277287c6057342d7d3f74181e1318eb8a16698e5)

Please either edit the data files to have matching columns, or separate them into different configurations (see docs at https://hf.co/docs/hub/datasets-manual-configuration#multiple-configurations)

Traceback: Traceback (most recent call last):

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 2011, in _prepare_split_single

writer.write_table(table)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/arrow_writer.py", line 585, in write_table

pa_table = table_cast(pa_table, self._schema)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/table.py", line 2302, in table_cast

return cast_table_to_schema(table, schema)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/table.py", line 2256, in cast_table_to_schema

raise CastError(

datasets.table.CastError: Couldn't cast

text: string

to

{'sentence_id': Value(dtype='int64', id=None), 'text': Value(dtype='string', id=None)}

because column names don't match

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/src/services/worker/src/worker/job_runners/config/parquet_and_info.py", line 1577, in compute_config_parquet_and_info_response

parquet_operations = convert_to_parquet(builder)

File "/src/services/worker/src/worker/job_runners/config/parquet_and_info.py", line 1191, in convert_to_parquet

builder.download_and_prepare(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1027, in download_and_prepare

self._download_and_prepare(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1122, in _download_and_prepare

self._prepare_split(split_generator, **prepare_split_kwargs)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1882, in _prepare_split

for job_id, done, content in self._prepare_split_single(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 2013, in _prepare_split_single

raise DatasetGenerationCastError.from_cast_error(

datasets.exceptions.DatasetGenerationCastError: An error occurred while generating the dataset

All the data files must have the same columns, but at some point there are 1 missing columns ({'sentence_id'})

This happened while the json dataset builder was generating data using

hf://datasets/rose-e-wang/backtracing/inquisitive/sources/test/0101.json (at revision 277287c6057342d7d3f74181e1318eb8a16698e5)

Please either edit the data files to have matching columns, or separate them into different configurations (see docs at https://hf.co/docs/hub/datasets-manual-configuration#multiple-configurations)Need help to make the dataset viewer work? Make sure to review how to configure the dataset viewer, and open a discussion for direct support.

text

string | sentence_id

int64 |

|---|---|

What happened to the net income in the first and second quarter? | 1 |

What was done differently in the third-quarter that the net income rose 26%? | 1 |

How much is 26% in dollars? | 1 |

Third-quarter net income rose because the chemical business was doing well? | 1 |

What was done differently this year that was not done last year? | 2 |

which year? | 2 |

How did the sales shoot up 7.4% in such a short time? | 3 |

When was it $540 mil? | 3 |

how quickly? | 3 |

Were there any losses at all? | 4 |

Is there a common name for this? | 4 |

what is caustic soda? | 4 |

What are gains in electrochemicals? | 4 |

How did the volume increase and what was the cause of higher prices? | 5 |

Who is buying the most? | 5 |

what is a volume increase? | 5 |

Which operations will it sell? | 1 |

Which year? | 2 |

Why did profit drop? | 2 |

Are they going to get unemployment? | 4 |

Which services? | 5 |

What is the number after taxes? | 5 |

Why is Canada narrowing the trade surplus with U.S.? | 1 |

Does this mean reducing the tariffs and thus reducing lost money when trading? | 1 |

did it have a bad effect? | 2 |

Why did imports barely rise when exports rose so much? | 2 |

How has this decrease in surplus affect trading? | 3 |

Why did the trade surplus reduce so much if tariffs were cut? | 3 |

which person said it? | 4 |

What kind of new plant equipment? | 4 |

who is the second biggest pair? | 5 |

Why are they in such a hurry to come to agreements for the tariff cuts? | 5 |

Who is he and where did he come from? | 1 |

What is this company? | 2 |

Did this mean he dealt with the money required for the campaign? | 3 |

What does strategy refer too in this context? | 5 |

What are they? | 1 |

What is a defunct Arkansas thrift? | 1 |

How did worthless loan guarantees inflate the earnings? | 1 |

why are they worthless loan guarantees? | 1 |

What is a thrift in this context? | 1 |

How did someone so crooked get two positions of power at this company? | 2 |

is he likely to get it? | 2 |

Does he have a chance of parole? | 2 |

why hasnt it been set? | 3 |

Why and what is the delay? | 3 |

How did he think he'd get away with that? | 4 |

What methods did he take to commit to this conspiracy? | 4 |

Again, it doesn't let me highlight everything - just a single word. What wrongdoing were the initially accused of? | 5 |

Why would these two individuals not be charged as well? | 5 |

why werent they charged? | 5 |

How did Prospect Group Inc. gain any control, over Recognition Equipment Inc? | 1 |

How was the offer hostile? | 1 |

How did it gain control despite failing? | 1 |

What is the name of this spokeswoman? | 2 |

Who replaced those guys? | 3 |

what was his reasoning for resignation? | 3 |

Why did Mr, Sheinberg get promoted? | 4 |

Why will he retain this role if not the other? | 4 |

does he want to keep the position? | 4 |

How many top spots did Recognition have? | 5 |

WHAT IS A TENDER OFFER? | 5 |

How did they help? | 1 |

What does that equate to? | 1 |

why is it a concern? | 2 |

How does this play into things? | 3 |

was this a long time ago? | 3 |

How much would they dividend be? | 4 |

Why did it climb? | 5 |

What happened on this date? | 1 |

What is significant about Tuesday, October 17,1989? | 1 |

What is this date? | 1 |

Why don't interest rates always represent transactions? | 2 |

What is a good representation of actual transactions? | 2 |

what do they represent? | 2 |

Where is the rest of the list? | 3 |

what is a prime rate? | 3 |

What is this for? | 3 |

What is the base rate on corporate loans at large US banks? | 4 |

which banks? | 4 |

How much in USD is that number? | 5 |

What are federal funds? | 5 |

What do Federal Funds represent? | 5 |

What are these for? | 5 |

Why was there such a big debt in severance? | 1 |

How big was it's database software inventories before? | 1 |

Are these severance costs going to laid off employees? | 1 |

What was the reason for the slide? | 2 |

What was the revenue prior to this? | 2 |

What happened compared to a year-ago? | 3 |

What was the reason behind the decline in revenue? | 3 |

Is this the exact same quarter just one year prior? | 3 |

What is Ashton Tate? | 4 |

What is the company's profit during this period? | 5 |

Is this time measured in quarters or months? | 5 |

who is hoping? | 1 |

What does \"deficit-reduction legislation\" mean? | 1 |

What does the \"House version\" consist of? | 1 |

Why did the conference break down? | 1 |

Which house leaders? | 2 |

Backtracing: Retrieving the Cause of the Query

Paper • Code • Video • Citation

Authors: Rose E. Wang, Pawan Wirawarn, Omar Khattab, Noah Goodman, Dorottya Demszky

Findings of EACL, Long Paper, 2024.

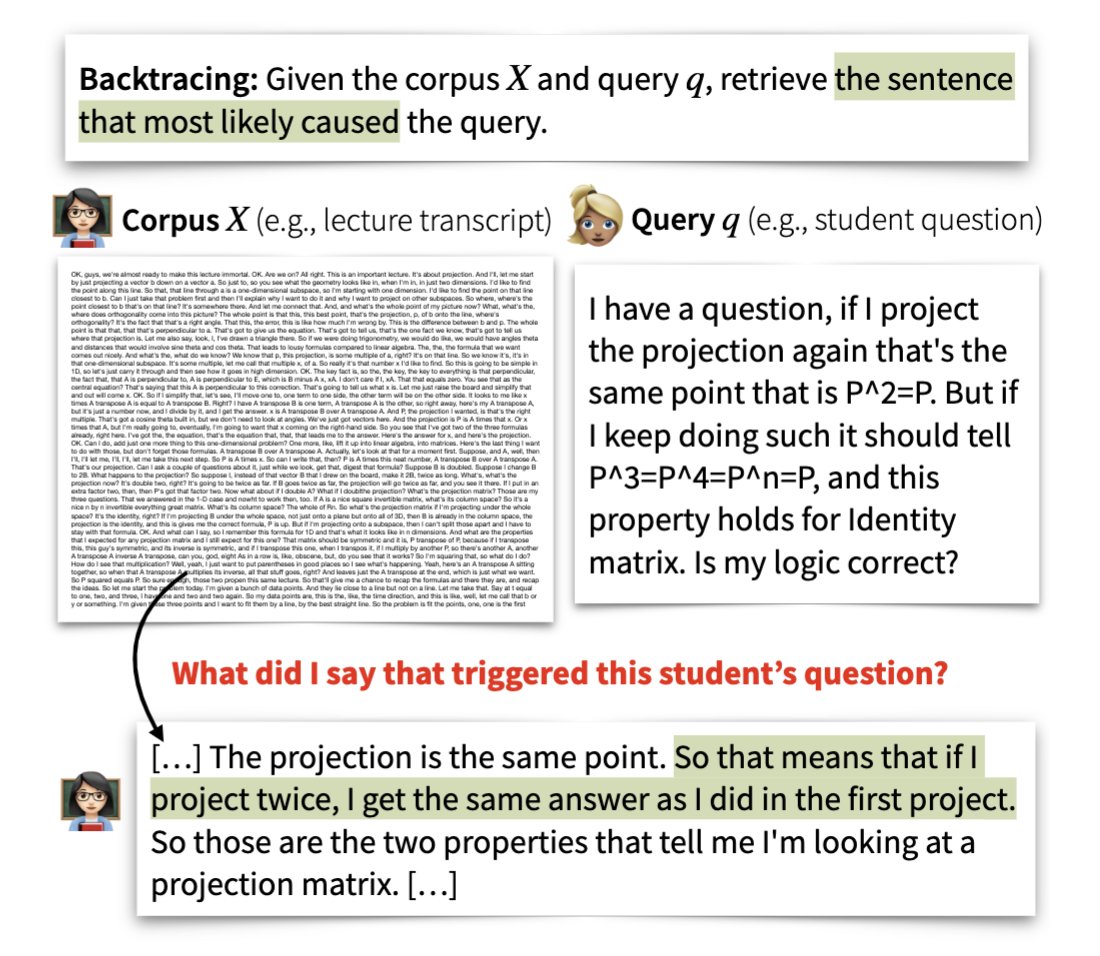

What is Backtracing?

Many online content portals allow users to ask questions to supplement their understanding (e.g., of lectures or news articles). While information retrieval (IR) systems may provide answers for such user queries, they do not directly assist content creators identify segments that caused a user to ask those questions; this can be useful for several purposes like helping improve their content. We introduce the task of backtracing, in which systems retrieve the text segment that most likely provoked a user query.

This Repository

In this repository, you will find:

- The first benchmark for backtracing, composed of three heterogeneous datasets and causal retrieval tasks: understanding the cause of (a) student confusion in the Lecture domain, (b) reader curiosity in the News Article domain, and (c) user emotion in the Conversation domain.

- Evaluations of a suite of retrieval systems on backtracing, including: BM25, bi-encoder methods, cross-encoder methods, re-ranker methods, gpt-3.5-turbo-16k, and several likelihood-based methods that use pre-trained language models to estimate the probability of the query conditioned on variations of the corpus.

Our results reveal several limitations of these methods; for example, bi-encoder methods struggle when the query and target segment share limited similarity and likelihood-based methods struggle with modeling what may be unknown information to a user. Overall, these results suggest that Backtracing is a challenging task that requires new retrieval approaches.

We hope our benchmark serves to evaluate and improve future retrieval systems for Backtracing, and ultimately, spawns systems that empower content creators to understand user queries, refine their content and provide users with better experiences.

Citation

If you find our work useful or interesting, please consider citing it!

@inproceedings{wang2024backtracing,

title = {Backtracing: Retrieving the Cause of the Query},

booktitle = {Findings of the Association for Computational Linguistics: EACL 2024},

publisher = {Association for Computational Linguistics},

year = {2024},

author = {Wang, Rose E. and Wirawarn, Pawan and Khattab, Omar and Goodman, Noah and Demszky, Dorottya},

}

Reproducibility

We ran our experiments with Python 3.11 and on A6000 machines. To reproduce the results in our work, please run the following commands:

>> conda create -n backtracing python=3.11

>> conda activate backtracing

>> pip install -r requirements.txt # install all of our requirements

>> source run_table_evaluations.sh

run_table_evaluations.sh outputs text files under results/<dataset>/. The text files contain the results reported in Table 2 and 3. Here is an example of what the result should look like:

>> cat results/sight/semantic_similarity.txt

Query dirs: ['data/sight/query/annotated']

Source dirs: ['data/sight/sources/annotated']

Output fname: results/sight/annotated/semantic_similarity.csv

Output fname: results/sight/annotated/semantic_similarity.csv

Accuracy top 1: 0.23

Min distance top 1: 91.85

Accuracy top 3: 0.37

Min distance top 3: 35.22

Dataset Structure

The datasets are located under the data directory.

Each dataset contains the query directory (e.g., student question) and the sources directory (e.g., the lecture transcript sentences).

└── data # Backtracing Datasets

└── sight # Lecture Domain, SIGHT, derived from https://github.com/rosewang2008/sight

├── query

└── sources

└── inquisitive # News Article Domain, Inquisitive, derived from https://github.com/wjko2/INQUISITIVE

├── query

└── sources

└── reccon # Conversation Domain, RECCON, derived from https://github.com/declare-lab/RECCON

├── query

└── sources

Retrieving from scratch

The section above uses the cached scores. If you want to run the retrieval from scratch, then run:

>> export OPENAI_API_KEY='yourkey' # if you want to run the gpt-3.5-turbo-16k results as well. otherwise skip.

>> source run_inference.sh

- Downloads last month

- 75