license: gpl-3.0

datasets:

- andreabac3/MedQuaAD-Italian-Fauno-Baize

- andreabac3/StackOverflow-Italian-Fauno-Baize

- andreabac3/Quora-Italian-Fauno-Baize

- teelinsan/camoscio_cleaned

language:

- it

- en

tags:

- large language model

- italian large language model

- baize

- 'llama '

- italian

Fauno - Italian LLM

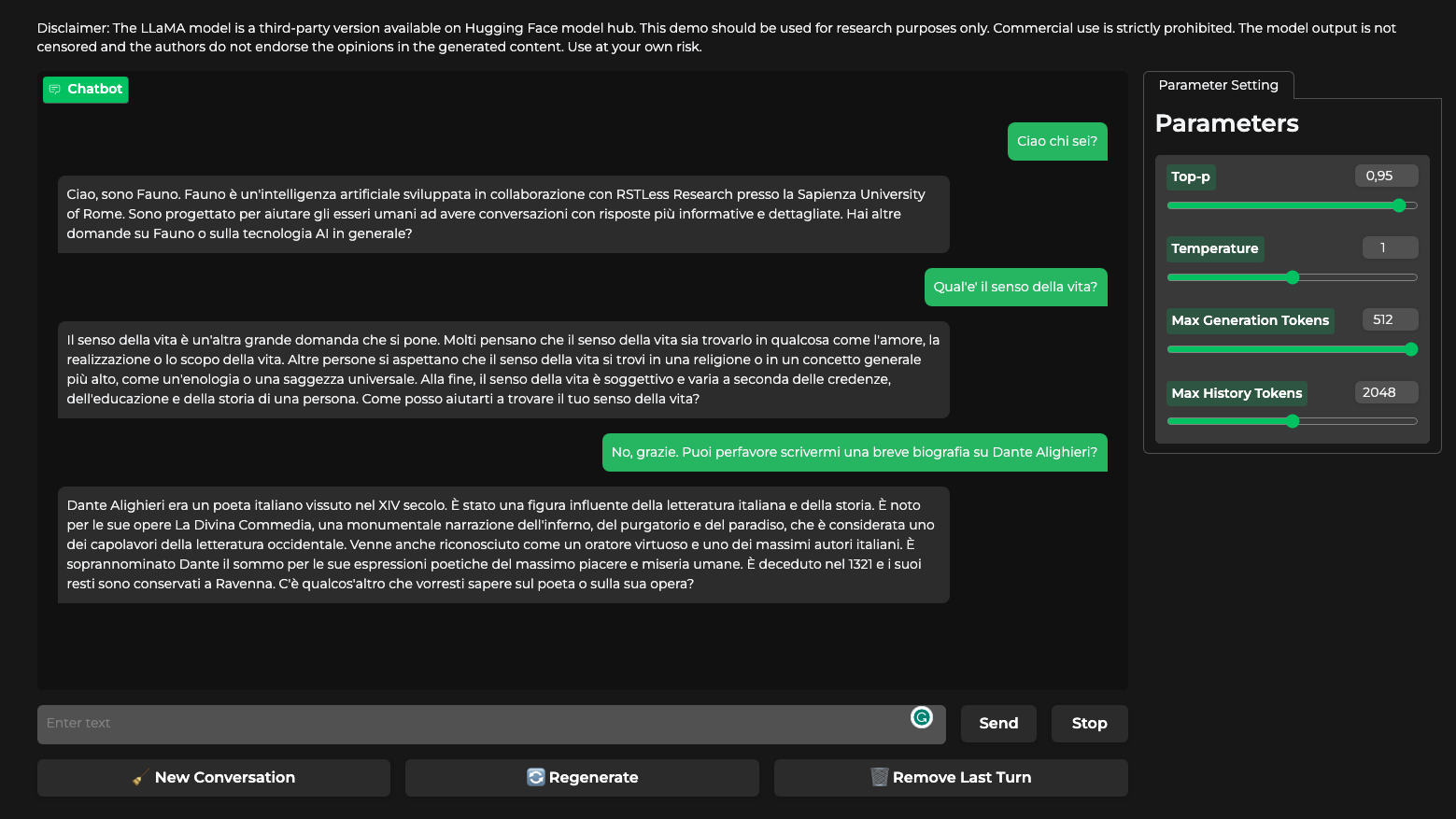

Get ready to meet Fauno - the Italian language model crafted by the RSTLess Research Group from the Sapienza University of Rome.

The talented research team behind Fauno includes Andrea Bacciu, Dr. Giovanni Trappolini, Andrea Santilli, and Professor Fabrizio Silvestri.

Fauno represents a cutting-edge development in open-source Italian Large Language Modeling. It's trained on extensive Italian synthetic datasets, encompassing a wide range of fields such as medical data 🩺, technical content from Stack Overflow 💻, Quora discussions 💬, and Alpaca data 🦙 translated into Italian.

Hence, our model is able to answer to your questions in Italian 🙋, fix your buggy code 🐛 and understand a minimum of medical literature 💊.

The 🇮🇹 open-source version of chatGPT!

Discover the capabilities of Fauno and experience the evolution of Italian language models for yourself.

Why Fauno?

We started with a model called Baize, named after a legendary creature from Chinese literature. Continuing along this thematic line, we developed our Italian model based on Baize and named it Fauno, inspired by an iconic figure from Roman mythology. This choice underlines the link between the two models, while maintaining a distinctive identity rooted in Italian culture.

Did you know that you can run Fauno on Colab base?

Follow this link to access a Colab notebook with our 7B version!

🔎 Model's details

Fauno is a fine-tuned version of the LoRa weights of Baize, that is an improved version of LLama.

We translated and cleaned the data of Baize, and then we fine-tuned the 7b model using a single RTX A6000 (48GB of VRAM) with 19 hours for one epoch.

Fauno 30B and 65B are coming soon!

Model initialization

from transformers import LlamaTokenizer, LlamaForCausalLM, GenerationConfig

from peft import PeftModel

tokenizer = LlamaTokenizer.from_pretrained("decapoda-research/llama-7b-hf")

model = LlamaForCausalLM.from_pretrained(

"decapoda-research/llama-7b-hf",

load_in_8bit=True,

device_map="auto",

)

model = PeftModel.from_pretrained(model, "andreabac3/Fauno-Italian-LLM-7B")

model.eval()

Inference

def evaluate(question: str) -> str:

prompt = f"The conversation between human and AI assistant.\n[|Human|] {question}.\n[|AI|] "

inputs = tokenizer(prompt, return_tensors="pt")

input_ids = inputs["input_ids"].cuda()

generation_output = model.generate(

input_ids=input_ids,

generation_config=generation_config,

return_dict_in_generate=True,

output_scores=True,

max_new_tokens=256

)

output = tokenizer.decode(generation_output.sequences[0]).split("[|AI|]")[1]

return output

your_question: str = "Qual'è il significato della vita?"

print(evaluate(your_question))

Output

Il senso della vita è una domanda che molte persone hanno cercato di rispondere per secoli.

Alla fine, il senso della vita è soggettivo e varia da persona a persona.

Alcune persone credono che il senso della vita sia trovare la felicità, mentre altre credono che sia raggiungere i propri obiettivi o aiutare gli altri.

Alla fine, il senso della vita è determinato dall'individuo e dai loro valori e credenze.

In definitiva, il senso della vita è qualcosa che ognuno deve trovare da solo.

Non c'è una risposta giusta o sbagliata, poiché ogni persona ha le proprie convinzioni e credenze.

La ricerca del senso della vita può essere un viaggio lungo e difficile, ma vale la pena perseguire.

📖 Cite our work

To use our translated dataset and model weights in your research, remember to cite our work.

@misc{fauno,

author = {Andrea Bacciu, Giovanni Trappolini, Andrea Santilli, Fabrizio Silvestri},

title = {Fauno: The Italian Large Language Model that will leave you senza parole!},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/andreabac3/Fauno-Italian-LLM}},

}

🔑 License

This project is a derivative of Baize, and we adhere to the licensing constraints imposed by both Baize's creators and the authors of LLama.

⚠️ Hallucinations

It is important to remark that current generation models are prone to the problem of hallucinations. So we advise you not to take their answers seriously.

👏 Acknowledgement

- LLama - Meta AI: https://github.com/facebookresearch/llama

- Baize: https://github.com/project-baize/baize-chatbot

- Standford Alpaca: https://github.com/tatsu-lab/stanford_alpaca

- Camoscio: https://github.com/teelinsan/camoscio