license: creativeml-openrail-m

license: creativeml-openrail-m

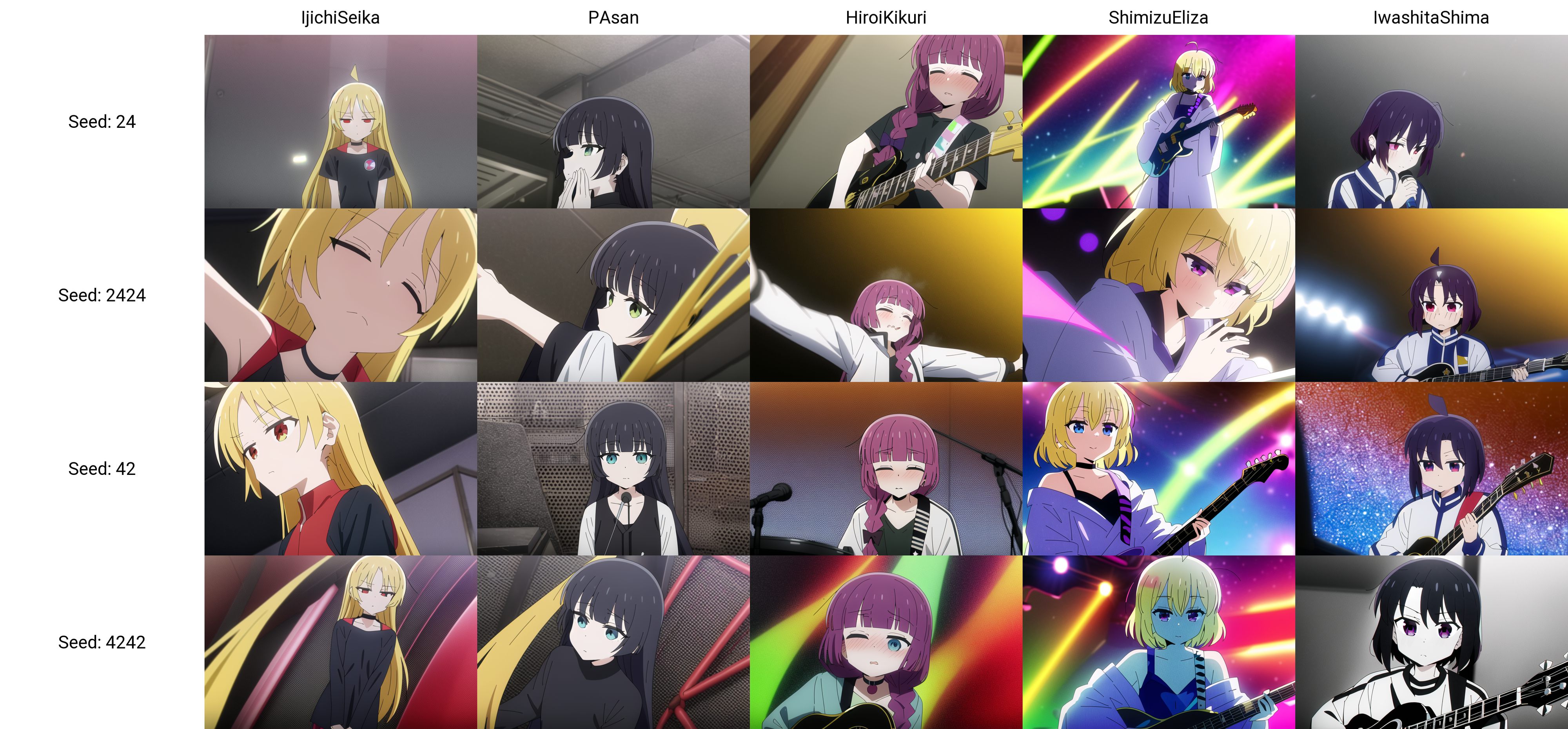

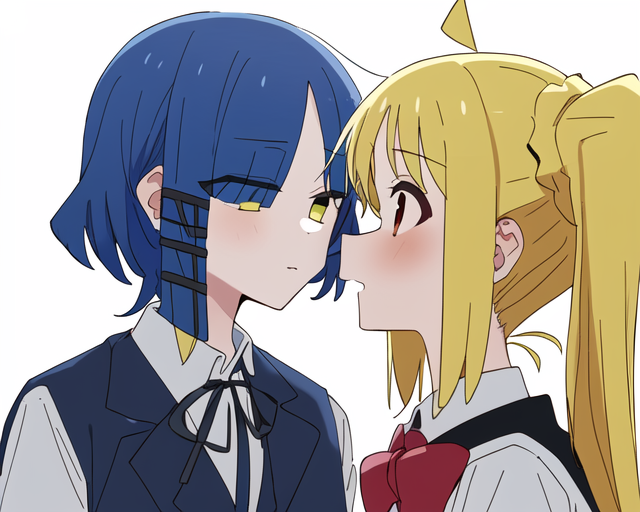

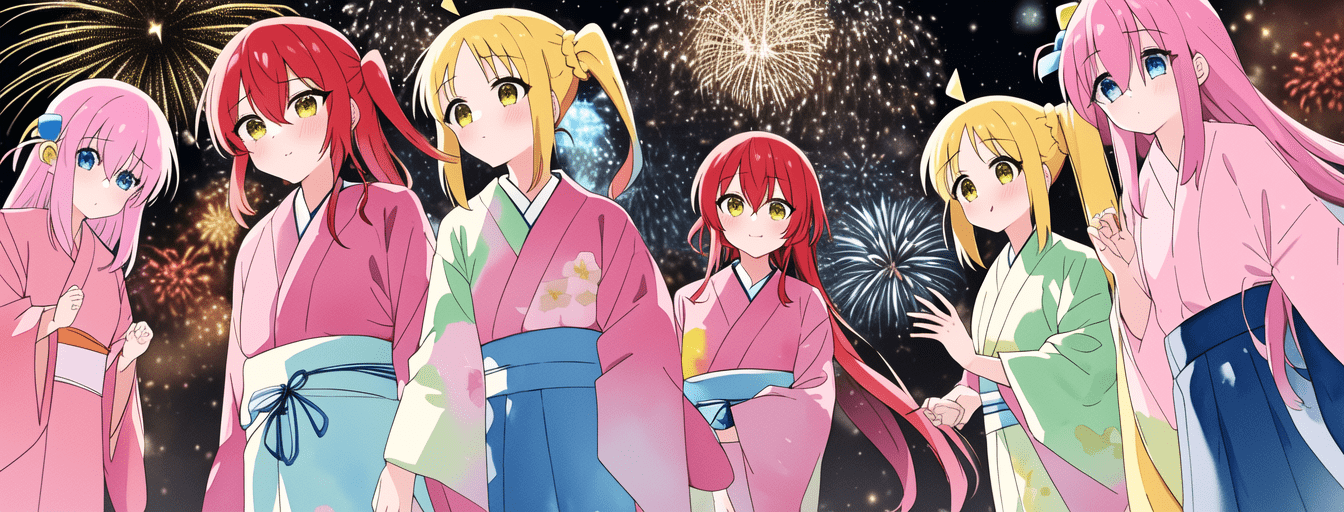

This is a low-quality bocchi-the-rock (ぼっち・ざ・ろっく!) character model. Similar to my yama-no-susume model, this model is capable of generating multi-character scenes beyond images of a single character. Of course, the result is still hit-or-miss, but I with some chance you can get the entire Kessoku Band right in one shot, and otherwise, you can always rely on inpainting. Here are two examples:

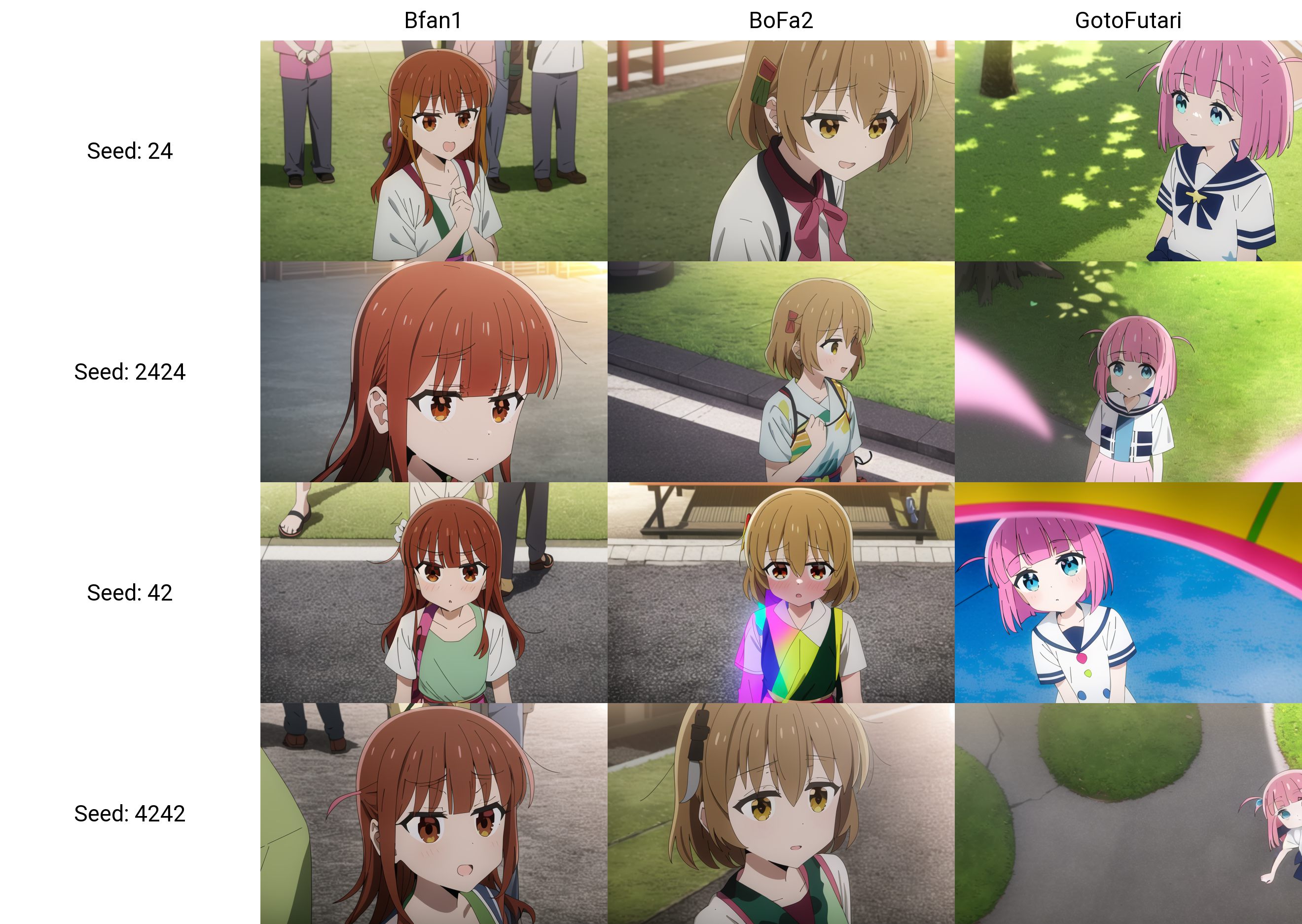

Characters

The model knows 12 characters from bocchi the rock. The ressemblance with a character can be improved by a better description of their appearance (for example by adding long wavy hair to ShimizuEliza).

Dataset description

The dataset contains around 27K images with the following composition

- 7024 anime screenshots

- 1630 fan arts

- 18519 customized regularization images

The model is trained with a specific weighting scheme to balance between different concepts. For example, the above three categories have weights respectively 0.3, 0.25, and 0.45. Each category is itself split into many sub-categories in a hierarchical way. For more details on the data preparation process please refer to https://github.com/cyber-meow/anime_screenshot_pipeline

Training Details

Trainer

The model is trained using EveryDream1 as

EveryDream seems to be the only trainer out there that supports sample weighting (through the use of multiply.txt).

Note that for future training it makes sense to migrate to EveryDream2.

Hardware and cost

The model is trained on runpod using 3090 and cost me around 15 dollors.

Hyperparameter specification

The model is trained for 48000 steps, at batch size 4, lr 1e-6, resolution 512, and conditional dropping rate of 10%.

Note that as a consequence of the weighting scheme which translates into a number of different multiply for each image, the count of repeat and epoch has a quite different meaning here. For example, depending on the weighting, I have around 300K images (some images are used multiple times) in an epoch, and therefore I did not even finish an entire epoch with the 48000 steps at batch size 4.

Failures

- For the first 24000 steps I use the trigger words

Bfan1andBfan2for the two fans of Bocchi. However, these two words are too similar and the model fails to different characters for these. Therefore I changed Bfan2 to Bofa2 at step 24000. This seemed to solve the problem. - Character blending is always an issue.

- When prompting the four characters of Kessoku Band we often get side shots. I think this is because of some overfitting to a particular image.