Model description

LSTM trained on Andrej Karpathy's tiny_shakespeare dataset, from his blog post, The Unreasonable Effectiveness of Recurrent Neural Networks.

Made to experiment with Hugging Face and W&B.

Intended uses & limitations

The model predicts the next character based on a variable-length input sequence. After 18 epochs of training, the model is generating text that is somewhat coherent.

def generate_text(model, encoder, text, n):

vocab = encoder.get_vocabulary()

generated_text = text

for _ in range(n):

encoded = encoder([generated_text])

pred = model.predict(encoded, verbose=0)

pred = tf.squeeze(tf.argmax(pred, axis=-1)).numpy()

generated_text += vocab[pred]

return generated_text

sample = "M"

print(generate_text(model, encoder, sample, 100))

MQLUS:

I will be so that the street of the state,

And then the street of the street of the state,

And

Training and evaluation data

Training procedure

The dataset consists of various works of William Shakespeare concatenated into a single file. The resulting file consists of individual speeches separated by \n\n.

The tokenizer is a Keras TextVectorization preprocessor that uses a simple character-based vocabulary.

To construct the training set, 100 characters are taken with the next character used as the target. This is repeated for each character in the text and results in 1,115,294 shuffled training examples.

TODO: upload encoder

Training hyperparameters

| Hyperparameters | Value |

|---|---|

epochs |

18 |

batch_size |

1024 |

optimizer |

AdamW |

weight_decay |

0.001 |

learning_rate |

0.00025 |

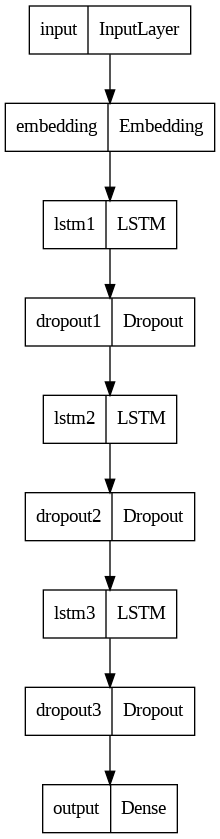

Model Plot

- Downloads last month

- 14