Ziya2-13B-Chat

- Main Page:Fengshenbang

- Github: Fengshenbang-LM

姜子牙系列模型

- Ziya-LLaMA-13B-v1.1

- Ziya-LLaMA-7B-Reward

- Ziya-LLaMA-13B-Pretrain-v1

- Ziya-Writing-LLaMa-13B-v1

- Ziya-BLIP2-14B-Visual-v1

- Ziya-Coding-15B-v1

- Ziya-Coding-34B-v1.0

- Ziya2-13B-Base

- Ziya-Reader-13B-v1.0

简介 Brief Introduction

Ziya2-13B-Chat是基于Ziya2-13B-Base的对话模型,在30万高质量的通用指令微调数据以及40万知识增强的指令微调数据上进行了有监督训练,并且在数万条高质量人类偏好数据训练的奖励模型上进行了全参数的人类反馈强化学习训练。

Ziya2-13B-Chat is a chat version of Ziya2-13B-Base. Ziya2-13B-Chat was fine-tuned on 300,000 high-quality general instruction data as well as 400,000 knowledge-enhanced instruction data, and then trained with full-parameter RLHF on a feedback model trained on tens of thousands of high-quality human preference data.S

模型分类 Model Taxonomy

| 需求 Demand | 任务 Task | 系列 Series | 模型 Model | 参数 Parameter | 额外 Extra |

|---|---|---|---|---|---|

| 通用 General | AGI模型 | 姜子牙 Ziya | LLaMA2 | 13B | English&Chinese |

模型信息 Model Information

继续预训练 Continual Pretraining

Meta在2023年7月份发布了Llama2系列大模型,相比于LLaMA1的1.4万亿Token数据,Llama2预训练的Token达到了2万亿,并在各个榜单中明显超过LLaMA1。

Meta released the Llama2 series of large models in July 2023, with pre-trained tokens reaching 200 billion compared to Llama1's 140 billion tokens, significantly outperforming Llama1 in various rankings.

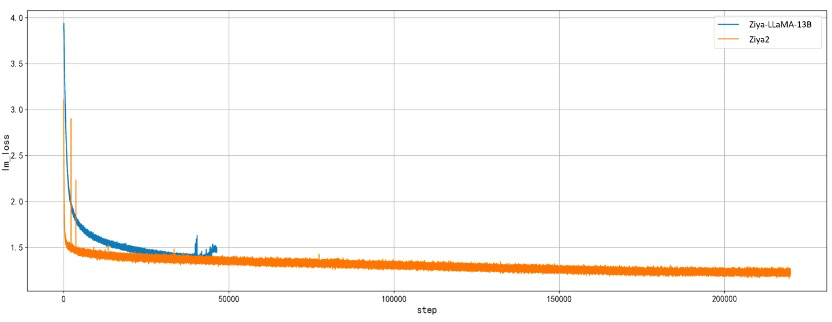

Ziya2-13B-Base沿用了Ziya-LLaMA-13B高效的中文编解码方式,但采取了更优化的初始化算法使得初始训练loss更低。同时,我们对Fengshen-PT继续训练框架进行了优化,效率方面,整合了FlashAttention2、Apex RMS norm等技术来帮助提升效率,对比Ziya-LLaMA-13B训练速度提升38%(163 TFLOPS/per gpu/per sec)。稳定性方面,我们采取BF16进行训练,修复了底层分布式框架的bug,确保模型能够持续稳定训练,解决了Ziya-LLaMA-13B遇到的训练后期不稳定的问题,并在7.25号进行了直播,最终完成了全部数据的继续训练。我们也发现,模型效果还有进一步提升的趋势,后续也会对Ziya2-13B-Base进行继续优化。

Ziya2-13B-Base retained the efficient Chinese encoding and decoding techniques of Ziya-LLaMA-13B, but employed a more optimized initialization algorithm to achieve lower initial training loss. Additionally, we optimized the Fengshen-PT fine-tuning framework. In terms of efficiency, we integrated technologies such as FlashAttention2 and Apex RMS norm to boost efficiency, resulting in a 38% increase in training speed compared to Ziya-LLaMA-13B (163 TFLOPS per GPU per second). For stability, we used BF16 for training, fixed underlying distributed framework bugs to ensure consistent model training, and resolved the late-stage instability issues encountered in the training of Ziya-LLaMA-13B. We also conducted a live broadcast on July 25th to complete the continued training of all data. We have observed a trend towards further improvements in model performance and plan to continue optimizing Ziya2-13B-Base in the future.

指令微调 Supervised Fine-tuning

依托于Ziya2-13B-Base强大的基础能力,我们优化了SFT阶段的训练策略。

我们发现高质量和多样的任务指令数据能够最大程度地激发预训练阶段所学到的知识。因此,我们利用Evol-Instruct的方法,对我们收集到的指令数据集进行了数据增强,并利用奖励模型筛选出了高质量的样本。最终,我们从2000万的指令数据集中,构造得到了30万高质量的通用指令微调数据,涵盖了问答、推理、代码、常识、对话、写作、自然语言理解、安全性等广泛的任务。

此外,我们发现在有监督微调阶段,引入知识增强训练,可以进一步提升模型的效果。我们利用检索模块,显式地将与指令有关的知识拼到上下文后进行训练。在这一部分,我们构造了约10万条知识增强的指令样本。

最终,我们在经过了300B token预训练的Ziya2-13B-Base模型的基础上,使用约40万的指令样本,使用8k的上下文窗口,经过两个epoch的训练得到SFT阶段的模型。

Based on the strong capability of Ziya2-13B-Base, we optimized the training strategy for the supervised fine-tuning phase (SFT).

We found that high-quality and varied task instruction data maximizes the stimulation of the knowledge learned in the pre-training phase. Therefore, we utilized the Evol-Instruct approach to augment our collected instruction dataset with data and filtered out high-quality samples using a reward model. We eventually constructed 300,000 high-quality general-purpose instruction fine-tuning data from a 20 million instruction dataset, covering a wide range of tasks such as QA, reasoning, coding, common sense, dialog, writing, natural language understanding, security, etc.

In addition, we find that the introduction of knowledge-enhanced training can further improve the model. We used the retrieval module to obtain knowledge related to the questions and concatenated their text into the context of the training data. In this section, we constructed about 100,000 samples of knowledge-enhanced instructions.

Finally, we obtained the SFT model after two epochs of training using about 400,000 instruction samples with a context window of 8k, based on the Ziya2-13B-Base model that had been pre-trained with 300B tokens.

人类反馈学习 Reinforcement learning from Human Feedback

基于SFT阶段的模型,Ziya2-13B-Chat针对多种问答、写作以及模型安全性的任务上进行了人类偏好的对齐。我们自行采集了数万条高质量人类偏好数据,使用Ziya2-13B-Base训练了人类偏好反馈模型,在各任务的偏好数据上达到了72%以上的准确率。

Based on SFT model, Ziya2-13B-Chat was aligned for human preferences on a variety of Q&A, writing, and safety tasks. We collected tens of thousands of high-quality human preference data on our own and trained a human preference feedback model using Ziya2-13B-Base, achieving over 72% accuracy on preference data across tasks.

| 任务类型 task | 偏好识别准确率 Acc |

|---|---|

| 日常问答 Daily QA | 76.8% |

| 知识问答 Knowledge Quizzing | 76.7% |

| 日常写作 Daily Writing | 82.3% |

| 任务型写作 Task-based Writing | 72.7% |

| 故事写作 Story Writing | 75.1% |

| 角色扮演 Role-playinh | 77.6% |

| 安全类 Safety & Harmlessness | 72.0% |

基于 Fengshen-RLHF 框架,Ziya2-13B-Chat使用以上人类偏好反馈模型进行了人类反馈强化学习,使模型输出更贴合人类偏好的同时具有更高的安全性。

Using Fengshen-RLHF Framework, Ziya2-13B-Chat used the above feedback model for reinforcement learning, making itself more closely match human preferences with higher security.

效果评估 Performance

我们在涵盖了常识问答、写作、数学推理、自然语言理解、安全等多种任务的通用能力测试集上进行了人工评估。最终,Ziya2-13B-Chat模型与Ziya-LlaMA-13B-v1.1模型在side-by-side评测下取得了66.5%的胜率,并对人类反馈强化学习前的版本取得了58.4%的胜率。

We conducted human evaluations of Ziya2-13B-Chat on a variety of tasks covering knowledge quizzing, writing, mathematical reasoning, natural language understanding, security, etc. Ziya2-13B-Chat achieved a 66.5% win rate against Ziya-LlaMA-13B-v1.1 under side-by-side comparison, and a 58.4% win rate against the version before performing RLHF.

| Better | Worse | Same | Win Rate | |

|---|---|---|---|---|

| v.s. Ziya-LlaMA-13B-v1.1 | 53.2% | 20.3% | 26.5% | 66.5% |

| v.s. w/o RLHF | 37.5% | 20.8% | 41.7% | 58.4% |

使用 Usage

Ziya2-13B-Chat采用"<human>:"和"<bot>:"作为用户和模型的角色识别Prompt,使用"\n"分隔不同角色对话内容。 在推理时,需要将"<human>:"和"<bot>:"作为前缀分别拼接至用户问题和模型回复的前面,并使用"\n"串连各对话内容。

Ziya2-13B-Chat adopts "<human>:" and "<bot>:" as the role recognition prompts for users and models, and uses "\n" to separate the contents of different roles. When doing inference, "<human>:" and "<bot>:" need to be concatenated as prefixes in front of the user's question and the model's reply respectively, and "\n" is used to join the contents of each role.

以下为具体使用方法:

Following are the details of how to use it:

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

device = torch.device("cuda")

messages = [{"role": "user", "content": "手机如果贴膜贴了一张防指纹的钢化膜,那屏幕指纹解锁还有效吗?"}]

user_prefix = "<human>:"

assistant_prefix = "<bot>:"

separator = "\n"

prompt = []

for item in messages:

prefix = user_prefix if item["role"] == "user" else assistant_prefix

prompt.append(f"{prefix}{item['content']}")

prompt.append(assistant_prefix)

prompt = separator.join(prompt)

model_path="IDEA-CCNL/Ziya2-13B-Chat"

model = AutoModelForCausalLM.from_pretrained(model_path,torch_dtype=torch.bfloat16).to(device)

tokenizer = AutoTokenizer.from_pretrained(model_path, use_fast=False)

input_ids = tokenizer(prompt, return_tensors="pt").input_ids.to(device)

generate_ids = model.generate(

input_ids,

max_new_tokens=512,

do_sample = True,

top_p = 0.9,

temperature = 0.85,

repetition_penalty=1.05,

eos_token_id=tokenizer.encode("</s>"),

)

output = tokenizer.batch_decode(generate_ids)[0]

print(output)

上面是简单的问答示例,其他更多prompt和玩法,感兴趣的朋友可以下载下来自行发掘。

The above is a simple example of question answering. For more prompts and creative ways to use the model, interested individuals can download it and explore further on their own.

引用 Citation

如果您在您的工作中使用了我们的模型,可以引用我们的论文:

If you are using the resource for your work, please cite the our paper:

@article{fengshenbang,

author = {Jiaxing Zhang and Ruyi Gan and Junjie Wang and Yuxiang Zhang and Lin Zhang and Ping Yang and Xinyu Gao and Ziwei Wu and Xiaoqun Dong and Junqing He and Jianheng Zhuo and Qi Yang and Yongfeng Huang and Xiayu Li and Yanghan Wu and Junyu Lu and Xinyu Zhu and Weifeng Chen and Ting Han and Kunhao Pan and Rui Wang and Hao Wang and Xiaojun Wu and Zhongshen Zeng and Chongpei Chen},

title = {Fengshenbang 1.0: Being the Foundation of Chinese Cognitive Intelligence},

journal = {CoRR},

volume = {abs/2209.02970},

year = {2022}

}

You can also cite our website:

欢迎引用我们的网站:

@misc{Fengshenbang-LM,

title={Fengshenbang-LM},

author={IDEA-CCNL},

year={2021},

howpublished={\url{https://github.com/IDEA-CCNL/Fengshenbang-LM}},

}

- Downloads last month

- 19