YOLOv5 Model for Face and Hands Detection

Overview

This repository contains a YOLOv5 model trained for detecting faces and hands. The model is based on the YOLOv5 architecture and has been fine-tuned on a custom dataset.

Model Information

- Model Name: yolov5-face-hands

- Framework: PyTorch

- Version: 1.0.0

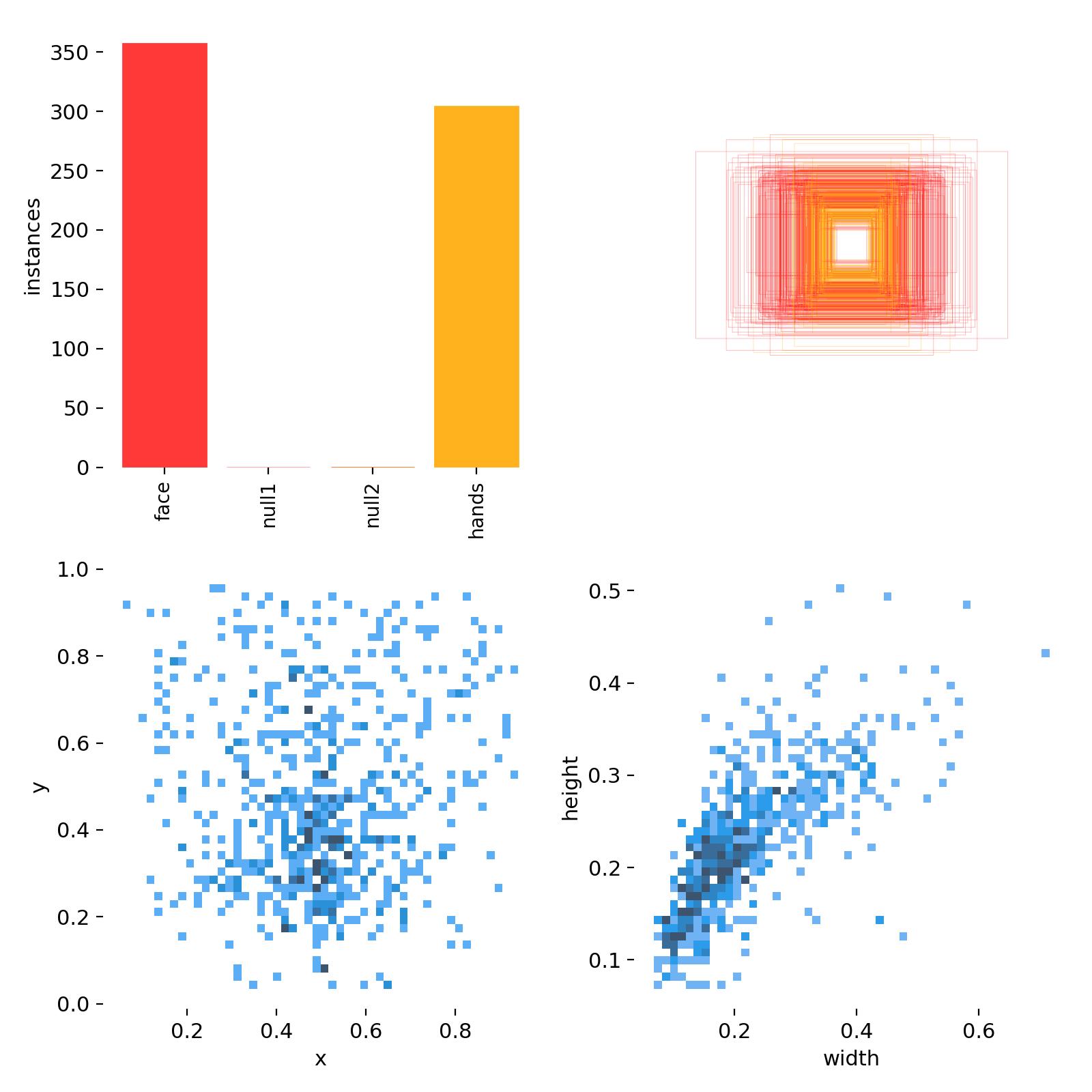

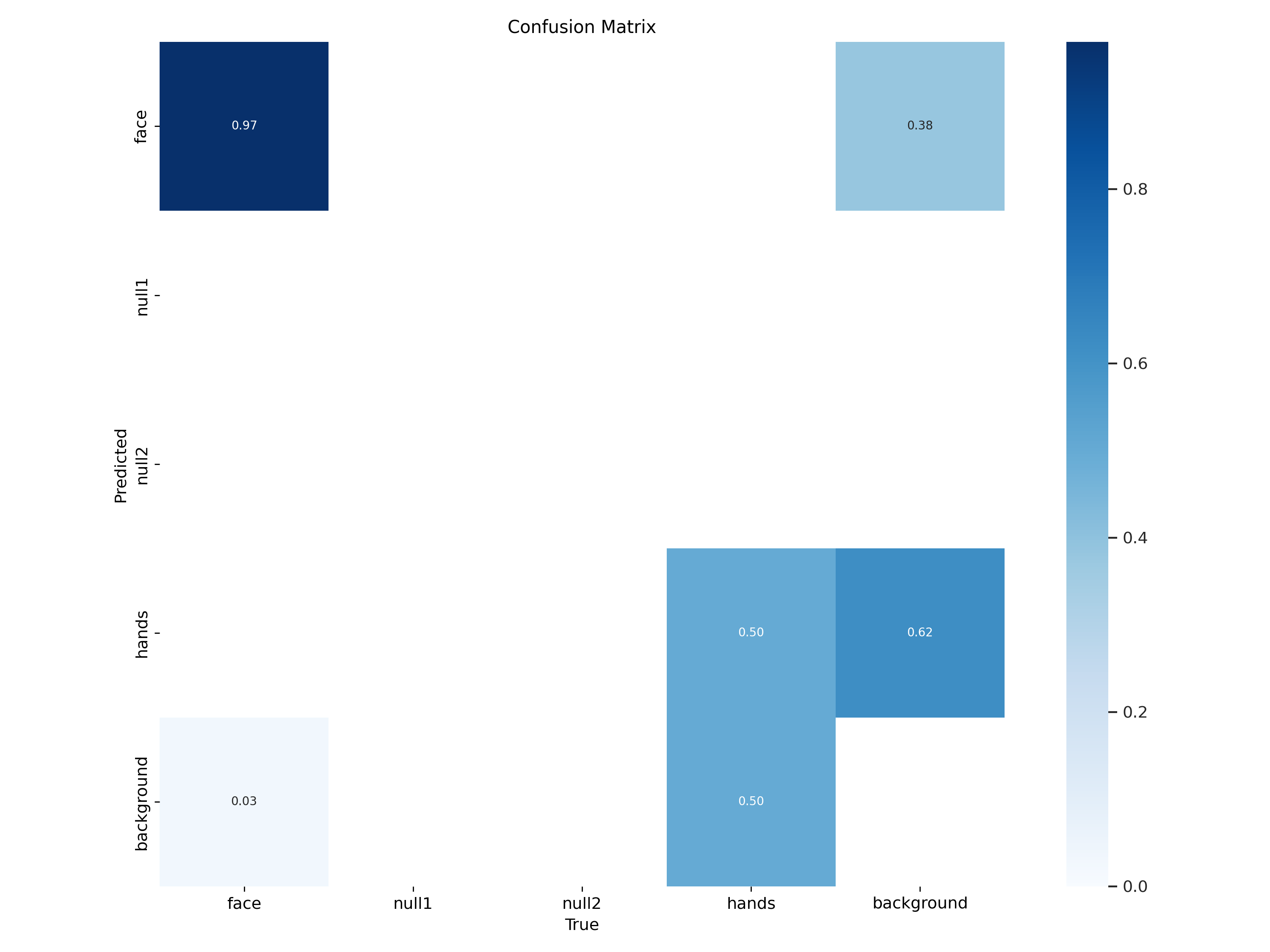

- Model List ["face", "null1", "null2", "hands"]

- The list model used is 0 and 3 ["0", "1", "2", "3"]

Usage

Installation

pip install torch torchvision

pip install yolov5

Load Model

import torch

# Load the YOLOv5 model

model = torch.hub.load('ultralytics/yolov5', 'custom', path='path/to/your/model.pt', force_reload=True)

# Set device (GPU or CPU)

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model.to(device)

# Set model to evaluation mode

model.eval()

Inference

import cv2

# Load and preprocess an image

image_path = 'path/to/your/image.jpg'

image = cv2.imread(image_path)

results = model(image)

# Display results (customize based on your needs)

results.show()

# Extract bounding box information

bboxes = results.xyxy[0].cpu().numpy()

for bbox in bboxes:

label_index = int(bbox[5])

label_mapping = ["face", "null1", "null2", "hands"]

label = label_mapping[label_index]

confidence = bbox[4]

print(f"Detected {label} with confidence {confidence:.2f}")

License

This model is released under the MIT License. See LICENSE for more details.

Citation

If you find this model useful, please consider citing the YOLOv5 repository:

@misc{jati2023customyolov5,

author = {Damar Jati},

title = {Custom YOLOv5 Model for Face and Hands Detection},

year = {2023},

orcid: {\url{https://orcid.org/0009-0002-0758-2712}}

publisher = {Hugging Face Model Hub},

howpublished = {\url{https://huggingface.co/DamarJati/face-hand-YOLOv5}}

}