merge

This is a experimental merge of pre-trained language models created using mergekit.

Merge Details

Merge Method

This model was merged using the SLERP merge method.

Models Merged

The following models were included in the merge:

Why this two models?

DiscoLM German 7B is is up to this date (01/21/2024) by far the best German model and makes far fewer grammatical errors and his German generally sounds good. But it is finetuned on Mistral V0.2 or even V0.1.

Kunoichi DPO v2 7B is a model that is already solid on german, but makes some more grammar errors. This model is trained especially for roleplay.

The ulterior motive was now combining this two models to get a even better German model, especially for German roleplay. A short testing showed already good results.

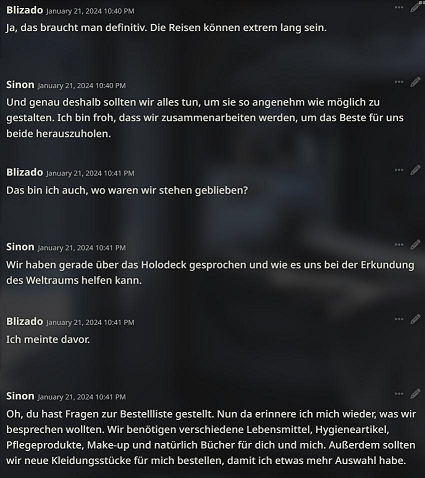

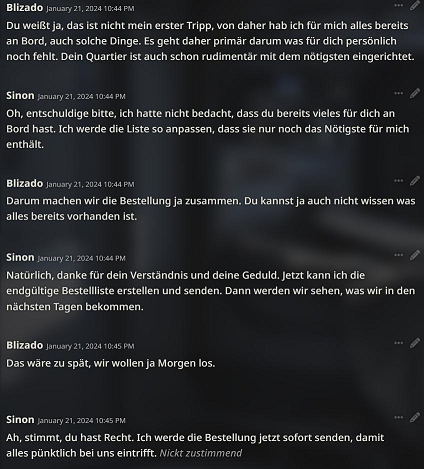

The last two AI responses above were 100% correct.

The last two AI responses above were 100% correct.

Configuration

The following YAML configuration was used to produce this model:

slices:

- sources:

- model: SanjiWatsuki/Kunoichi-DPO-v2-7B

layer_range: [0, 32]

- model: DiscoResearch/DiscoLM_German_7b_v1

layer_range: [0, 32]

merge_method: slerp

base_model: SanjiWatsuki/Kunoichi-DPO-v2-7B

parameters:

t:

- value: [0.5, 0.9]

dtype: bfloat16

This settings are from the model oshizo/japanese-e5-mistral-7b_slerp.

- Downloads last month

- 19