Spaces:

Runtime error

Runtime error

Commit

•

f0533a5

1

Parent(s):

a3f1b31

Upload 33 files

Browse files- LICENSE +21 -0

- README.md +165 -12

- assets/motivation.jpg +0 -0

- assets/the_great_wall.jpg +0 -0

- assets/user_study.jpg +0 -0

- assets/vbench.jpg +0 -0

- diffusion_schedulers/__init__.py +2 -0

- diffusion_schedulers/scheduling_cosine_ddpm.py +137 -0

- diffusion_schedulers/scheduling_flow_matching.py +298 -0

- pyramid_dit/__init__.py +3 -0

- pyramid_dit/modeling_embedding.py +390 -0

- pyramid_dit/modeling_mmdit_block.py +672 -0

- pyramid_dit/modeling_normalization.py +179 -0

- pyramid_dit/modeling_pyramid_mmdit.py +487 -0

- pyramid_dit/modeling_text_encoder.py +140 -0

- pyramid_dit/pyramid_dit_for_video_gen_pipeline.py +672 -0

- requirements.txt +32 -0

- trainer_misc/__init__.py +25 -0

- trainer_misc/communicate.py +58 -0

- trainer_misc/sp_utils.py +98 -0

- trainer_misc/utils.py +382 -0

- utils.py +457 -0

- video_generation_demo.ipynb +181 -0

- video_vae/__init__.py +2 -0

- video_vae/context_parallel_ops.py +172 -0

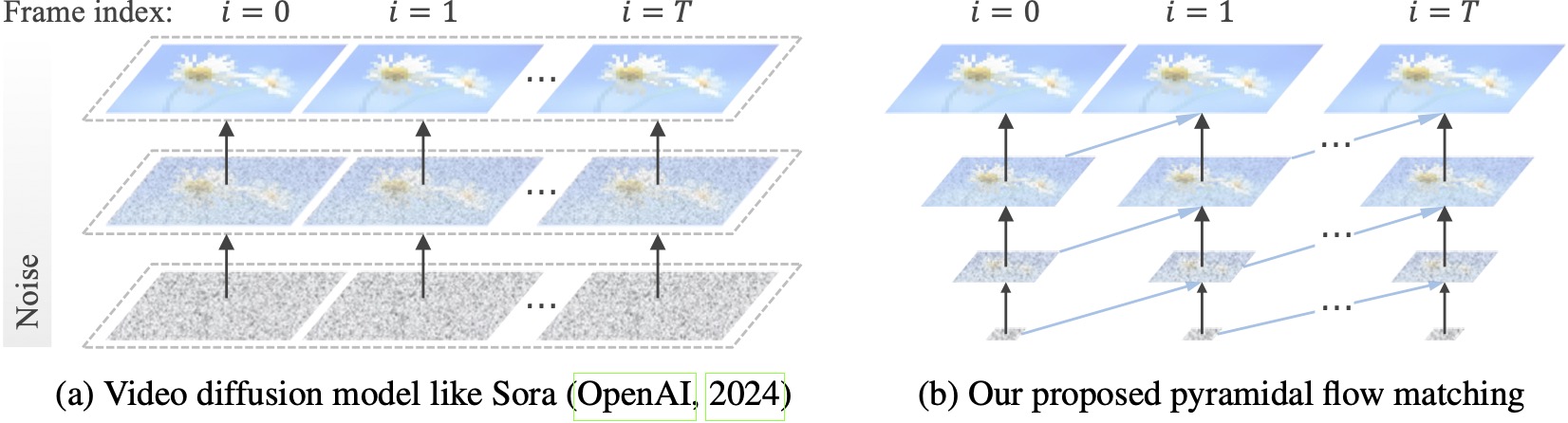

- video_vae/modeling_block.py +760 -0

- video_vae/modeling_causal_conv.py +139 -0

- video_vae/modeling_causal_vae.py +625 -0

- video_vae/modeling_discriminator.py +122 -0

- video_vae/modeling_enc_dec.py +422 -0

- video_vae/modeling_loss.py +192 -0

- video_vae/modeling_lpips.py +120 -0

- video_vae/modeling_resnet.py +729 -0

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2024 Yang Jin

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

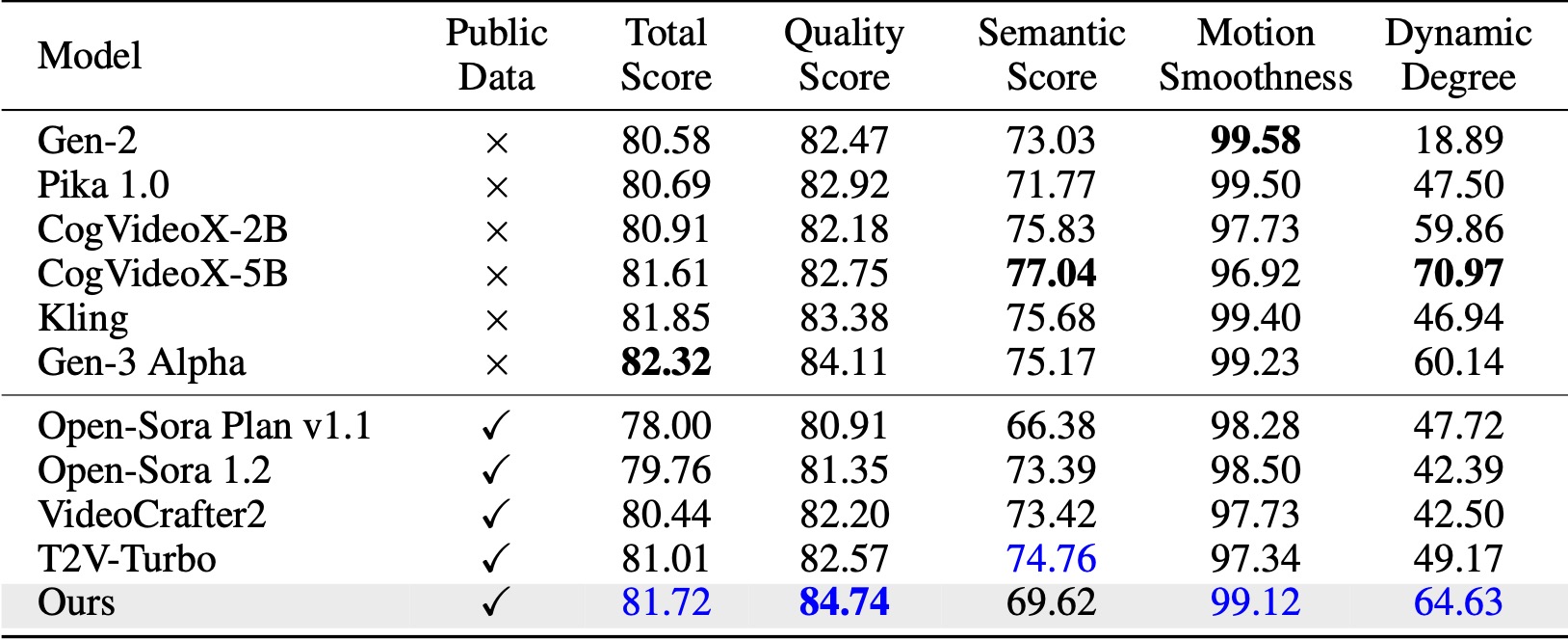

+

in the Software without restriction, including without limitation the rights

|

| 8 |

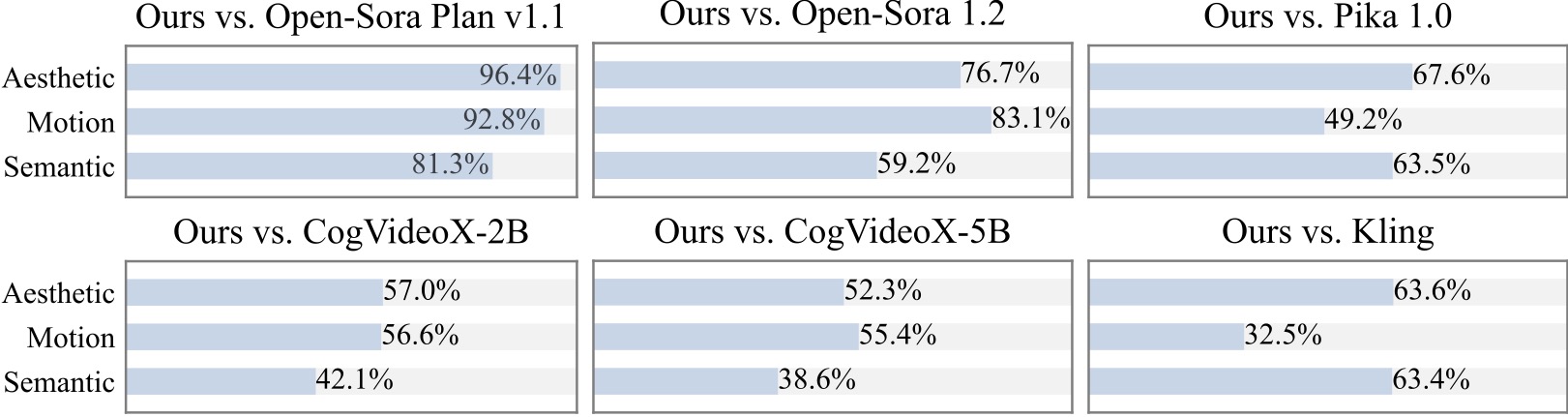

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,12 +1,165 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<div align="center">

|

| 2 |

+

|

| 3 |

+

# ⚡️Pyramid Flow⚡️

|

| 4 |

+

|

| 5 |

+

[[Paper]](https://arxiv.org/abs/2410.05954) [[Project Page ✨]](https://pyramid-flow.github.io) [[Model 🤗]](https://huggingface.co/rain1011/pyramid-flow-sd3)

|

| 6 |

+

|

| 7 |

+

</div>

|

| 8 |

+

|

| 9 |

+

This is the official repository for Pyramid Flow, a training-efficient **Autoregressive Video Generation** method based on **Flow Matching**. By training only on **open-source datasets**, it can generate high-quality 10-second videos at 768p resolution and 24 FPS, and naturally supports image-to-video generation.

|

| 10 |

+

|

| 11 |

+

<table class="center" border="0" style="width: 100%; text-align: left;">

|

| 12 |

+

<tr>

|

| 13 |

+

<th>10s, 768p, 24fps</th>

|

| 14 |

+

<th>5s, 768p, 24fps</th>

|

| 15 |

+

<th>Image-to-video</th>

|

| 16 |

+

</tr>

|

| 17 |

+

<tr>

|

| 18 |

+

<td><video src="https://github.com/user-attachments/assets/9935da83-ae56-4672-8747-0f46e90f7b2b" autoplay muted loop playsinline></video></td>

|

| 19 |

+

<td><video src="https://github.com/user-attachments/assets/3412848b-64db-4d9e-8dbf-11403f6d02c5" autoplay muted loop playsinline></video></td>

|

| 20 |

+

<td><video src="https://github.com/user-attachments/assets/3bd7251f-7b2c-4bee-951d-656fdb45f427" autoplay muted loop playsinline></video></td>

|

| 21 |

+

</tr>

|

| 22 |

+

</table>

|

| 23 |

+

|

| 24 |

+

## News

|

| 25 |

+

|

| 26 |

+

* `COMING SOON` ⚡️⚡️⚡️ Training code for both the Video VAE and DiT; New model checkpoints trained from scratch.

|

| 27 |

+

|

| 28 |

+

> We are training Pyramid Flow from scratch to fix human structure issues related to the currently adopted SD3 initialization and hope to release it in the next few days.

|

| 29 |

+

* `2024.10.10` 🚀🚀🚀 We release the [technical report](https://arxiv.org/abs/2410.05954), [project page](https://pyramid-flow.github.io) and [model checkpoint](https://huggingface.co/rain1011/pyramid-flow-sd3) of Pyramid Flow.

|

| 30 |

+

|

| 31 |

+

## Introduction

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

Existing video diffusion models operate at full resolution, spending a lot of computation on very noisy latents. By contrast, our method harnesses the flexibility of flow matching ([Lipman et al., 2023](https://openreview.net/forum?id=PqvMRDCJT9t); [Liu et al., 2023](https://openreview.net/forum?id=XVjTT1nw5z); [Albergo & Vanden-Eijnden, 2023](https://openreview.net/forum?id=li7qeBbCR1t)) to interpolate between latents of different resolutions and noise levels, allowing for simultaneous generation and decompression of visual content with better computational efficiency. The entire framework is end-to-end optimized with a single DiT ([Peebles & Xie, 2023](http://openaccess.thecvf.com/content/ICCV2023/html/Peebles_Scalable_Diffusion_Models_with_Transformers_ICCV_2023_paper.html)), generating high-quality 10-second videos at 768p resolution and 24 FPS within 20.7k A100 GPU training hours.

|

| 36 |

+

|

| 37 |

+

## Usage

|

| 38 |

+

|

| 39 |

+

You can directly download the model from [Huggingface](https://huggingface.co/rain1011/pyramid-flow-sd3). We provide both model checkpoints for 768p and 384p video generation. The 384p checkpoint supports 5-second video generation at 24FPS, while the 768p checkpoint supports up to 10-second video generation at 24FPS.

|

| 40 |

+

|

| 41 |

+

```python

|

| 42 |

+

from huggingface_hub import snapshot_download

|

| 43 |

+

|

| 44 |

+

model_path = 'PATH' # The local directory to save downloaded checkpoint

|

| 45 |

+

snapshot_download("rain1011/pyramid-flow-sd3", local_dir=model_path, local_dir_use_symlinks=False, repo_type='model')

|

| 46 |

+

```

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

To use our model, please follow the inference code in `video_generation_demo.ipynb` at [this link](https://github.com/jy0205/Pyramid-Flow/blob/main/video_generation_demo.ipynb). We further simplify it into the following two-step procedure. First, load the downloaded model:

|

| 50 |

+

|

| 51 |

+

```python

|

| 52 |

+

import torch

|

| 53 |

+

from PIL import Image

|

| 54 |

+

from pyramid_dit import PyramidDiTForVideoGeneration

|

| 55 |

+

from diffusers.utils import load_image, export_to_video

|

| 56 |

+

|

| 57 |

+

torch.cuda.set_device(0)

|

| 58 |

+

model_dtype, torch_dtype = 'bf16', torch.bfloat16 # Use bf16, fp16 or fp32

|

| 59 |

+

|

| 60 |

+

model = PyramidDiTForVideoGeneration(

|

| 61 |

+

'PATH', # The downloaded checkpoint dir

|

| 62 |

+

model_dtype,

|

| 63 |

+

model_variant='diffusion_transformer_768p', # 'diffusion_transformer_384p'

|

| 64 |

+

)

|

| 65 |

+

|

| 66 |

+

model.vae.to("cuda")

|

| 67 |

+

model.dit.to("cuda")

|

| 68 |

+

model.text_encoder.to("cuda")

|

| 69 |

+

model.vae.enable_tiling()

|

| 70 |

+

```

|

| 71 |

+

|

| 72 |

+

Then, you can try text-to-video generation on your own prompts:

|

| 73 |

+

|

| 74 |

+

```python

|

| 75 |

+

prompt = "A movie trailer featuring the adventures of the 30 year old space man wearing a red wool knitted motorcycle helmet, blue sky, salt desert, cinematic style, shot on 35mm film, vivid colors"

|

| 76 |

+

|

| 77 |

+

with torch.no_grad(), torch.cuda.amp.autocast(enabled=True, dtype=torch_dtype):

|

| 78 |

+

frames = model.generate(

|

| 79 |

+

prompt=prompt,

|

| 80 |

+

num_inference_steps=[20, 20, 20],

|

| 81 |

+

video_num_inference_steps=[10, 10, 10],

|

| 82 |

+

height=768,

|

| 83 |

+

width=1280,

|

| 84 |

+

temp=16, # temp=16: 5s, temp=31: 10s

|

| 85 |

+

guidance_scale=9.0, # The guidance for the first frame

|

| 86 |

+

video_guidance_scale=5.0, # The guidance for the other video latent

|

| 87 |

+

output_type="pil",

|

| 88 |

+

save_memory=True, # If you have enough GPU memory, set it to `False` to improve vae decoding speed

|

| 89 |

+

)

|

| 90 |

+

|

| 91 |

+

export_to_video(frames, "./text_to_video_sample.mp4", fps=24)

|

| 92 |

+

```

|

| 93 |

+

|

| 94 |

+

As an autoregressive model, our model also supports (text conditioned) image-to-video generation:

|

| 95 |

+

|

| 96 |

+

```python

|

| 97 |

+

image = Image.open('assets/the_great_wall.jpg').convert("RGB").resize((1280, 768))

|

| 98 |

+

prompt = "FPV flying over the Great Wall"

|

| 99 |

+

|

| 100 |

+

with torch.no_grad(), torch.cuda.amp.autocast(enabled=True, dtype=torch_dtype):

|

| 101 |

+

frames = model.generate_i2v(

|

| 102 |

+

prompt=prompt,

|

| 103 |

+

input_image=image,

|

| 104 |

+

num_inference_steps=[10, 10, 10],

|

| 105 |

+

temp=16,

|

| 106 |

+

video_guidance_scale=4.0,

|

| 107 |

+

output_type="pil",

|

| 108 |

+

save_memory=True, # If you have enough GPU memory, set it to `False` to improve vae decoding speed

|

| 109 |

+

)

|

| 110 |

+

|

| 111 |

+

export_to_video(frames, "./image_to_video_sample.mp4", fps=24)

|

| 112 |

+

```

|

| 113 |

+

|

| 114 |

+

Usage tips:

|

| 115 |

+

|

| 116 |

+

* The `guidance_scale` parameter controls the visual quality. We suggest using a guidance within [7, 9] for the 768p checkpoint during text-to-video generation, and 7 for the 384p checkpoint.

|

| 117 |

+

* The `video_guidance_scale` parameter controls the motion. A larger value increases the dynamic degree and mitigates the autoregressive generation degradation, while a smaller value stabilizes the video.

|

| 118 |

+

* For 10-second video generation, we recommend using a guidance scale of 7 and a video guidance scale of 5.

|

| 119 |

+

|

| 120 |

+

## Gallery

|

| 121 |

+

|

| 122 |

+

The following video examples are generated at 5s, 768p, 24fps. For more results, please visit our [project page](https://pyramid-flow.github.io).

|

| 123 |

+

|

| 124 |

+

<table class="center" border="0" style="width: 100%; text-align: left;">

|

| 125 |

+

<tr>

|

| 126 |

+

<td><video src="https://github.com/user-attachments/assets/5b44a57e-fa08-4554-84a2-2c7a99f2b343" autoplay muted loop playsinline></video></td>

|

| 127 |

+

<td><video src="https://github.com/user-attachments/assets/5afd5970-de72-40e2-900d-a20d18308e8e" autoplay muted loop playsinline></video></td>

|

| 128 |

+

</tr>

|

| 129 |

+

<tr>

|

| 130 |

+

<td><video src="https://github.com/user-attachments/assets/1d44daf8-017f-40e9-bf18-1e19c0a8983b" autoplay muted loop playsinline></video></td>

|

| 131 |

+

<td><video src="https://github.com/user-attachments/assets/7f5dd901-b7d7-48cc-b67a-3c5f9e1546d2" autoplay muted loop playsinline></video></td>

|

| 132 |

+

</tr>

|

| 133 |

+

</table>

|

| 134 |

+

|

| 135 |

+

## Comparison

|

| 136 |

+

|

| 137 |

+

On VBench ([Huang et al., 2024](https://huggingface.co/spaces/Vchitect/VBench_Leaderboard)), our method surpasses all the compared open-source baselines. Even with only public video data, it achieves comparable performance to commercial models like Kling ([Kuaishou, 2024](https://kling.kuaishou.com/en)) and Gen-3 Alpha ([Runway, 2024](https://runwayml.com/research/introducing-gen-3-alpha)), especially in the quality score (84.74 vs. 84.11 of Gen-3) and motion smoothness.

|

| 138 |

+

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

We conduct an additional user study with 20+ participants. As can be seen, our method is preferred over open-source models such as [Open-Sora](https://github.com/hpcaitech/Open-Sora) and [CogVideoX-2B](https://github.com/THUDM/CogVideo) especially in terms of motion smoothness.

|

| 142 |

+

|

| 143 |

+

|

| 144 |

+

|

| 145 |

+

## Acknowledgement

|

| 146 |

+

|

| 147 |

+

We are grateful for the following awesome projects when implementing Pyramid Flow:

|

| 148 |

+

|

| 149 |

+

* [SD3 Medium](https://huggingface.co/stabilityai/stable-diffusion-3-medium) and [Flux 1.0](https://huggingface.co/black-forest-labs/FLUX.1-dev): State-of-the-art image generation models based on flow matching.

|

| 150 |

+

* [Diffusion Forcing](https://boyuan.space/diffusion-forcing) and [GameNGen](https://gamengen.github.io): Next-token prediction meets full-sequence diffusion.

|

| 151 |

+

* [WebVid-10M](https://github.com/m-bain/webvid), [OpenVid-1M](https://github.com/NJU-PCALab/OpenVid-1M) and [Open-Sora Plan](https://github.com/PKU-YuanGroup/Open-Sora-Plan): Large-scale datasets for text-to-video generation.

|

| 152 |

+

* [CogVideoX](https://github.com/THUDM/CogVideo): An open-source text-to-video generation model that shares many training details.

|

| 153 |

+

* [Video-LLaMA2](https://github.com/DAMO-NLP-SG/VideoLLaMA2): An open-source video LLM for our video recaptioning.

|

| 154 |

+

|

| 155 |

+

## Citation

|

| 156 |

+

|

| 157 |

+

Consider giving this repository a star and cite Pyramid Flow in your publications if it helps your research.

|

| 158 |

+

```

|

| 159 |

+

@article{jin2024pyramidal,

|

| 160 |

+

title={Pyramidal Flow Matching for Efficient Video Generative Modeling},

|

| 161 |

+

author={Jin, Yang and Sun, Zhicheng and Li, Ningyuan and Xu, Kun and Xu, Kun and Jiang, Hao and Zhuang, Nan and Huang, Quzhe and Song, Yang and Mu, Yadong and Lin, Zhouchen},

|

| 162 |

+

jounal={arXiv preprint arXiv:2410.05954},

|

| 163 |

+

year={2024}

|

| 164 |

+

}

|

| 165 |

+

```

|

assets/motivation.jpg

ADDED

|

assets/the_great_wall.jpg

ADDED

|

assets/user_study.jpg

ADDED

|

assets/vbench.jpg

ADDED

|

diffusion_schedulers/__init__.py

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .scheduling_cosine_ddpm import DDPMCosineScheduler

|

| 2 |

+

from .scheduling_flow_matching import PyramidFlowMatchEulerDiscreteScheduler

|

diffusion_schedulers/scheduling_cosine_ddpm.py

ADDED

|

@@ -0,0 +1,137 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import math

|

| 2 |

+

from dataclasses import dataclass

|

| 3 |

+

from typing import List, Optional, Tuple, Union

|

| 4 |

+

|

| 5 |

+

import torch

|

| 6 |

+

|

| 7 |

+

from diffusers.configuration_utils import ConfigMixin, register_to_config

|

| 8 |

+

from diffusers.utils import BaseOutput

|

| 9 |

+

from diffusers.utils.torch_utils import randn_tensor

|

| 10 |

+

from diffusers.schedulers.scheduling_utils import SchedulerMixin

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

@dataclass

|

| 14 |

+

class DDPMSchedulerOutput(BaseOutput):

|

| 15 |

+

"""

|

| 16 |

+

Output class for the scheduler's step function output.

|

| 17 |

+

|

| 18 |

+

Args:

|

| 19 |

+

prev_sample (`torch.Tensor` of shape `(batch_size, num_channels, height, width)` for images):

|

| 20 |

+

Computed sample (x_{t-1}) of previous timestep. `prev_sample` should be used as next model input in the

|

| 21 |

+

denoising loop.

|

| 22 |

+

"""

|

| 23 |

+

|

| 24 |

+

prev_sample: torch.Tensor

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

class DDPMCosineScheduler(SchedulerMixin, ConfigMixin):

|

| 28 |

+

|

| 29 |

+

@register_to_config

|

| 30 |

+

def __init__(

|

| 31 |

+

self,

|

| 32 |

+

scaler: float = 1.0,

|

| 33 |

+

s: float = 0.008,

|

| 34 |

+

):

|

| 35 |

+

self.scaler = scaler

|

| 36 |

+

self.s = torch.tensor([s])

|

| 37 |

+

self._init_alpha_cumprod = torch.cos(self.s / (1 + self.s) * torch.pi * 0.5) ** 2

|

| 38 |

+

|

| 39 |

+

# standard deviation of the initial noise distribution

|

| 40 |

+

self.init_noise_sigma = 1.0

|

| 41 |

+

|

| 42 |

+

def _alpha_cumprod(self, t, device):

|

| 43 |

+

if self.scaler > 1:

|

| 44 |

+

t = 1 - (1 - t) ** self.scaler

|

| 45 |

+

elif self.scaler < 1:

|

| 46 |

+

t = t**self.scaler

|

| 47 |

+

alpha_cumprod = torch.cos(

|

| 48 |

+

(t + self.s.to(device)) / (1 + self.s.to(device)) * torch.pi * 0.5

|

| 49 |

+

) ** 2 / self._init_alpha_cumprod.to(device)

|

| 50 |

+

return alpha_cumprod.clamp(0.0001, 0.9999)

|

| 51 |

+

|

| 52 |

+

def scale_model_input(self, sample: torch.Tensor, timestep: Optional[int] = None) -> torch.Tensor:

|

| 53 |

+

"""

|

| 54 |

+

Ensures interchangeability with schedulers that need to scale the denoising model input depending on the

|

| 55 |

+

current timestep.

|

| 56 |

+

|

| 57 |

+

Args:

|

| 58 |

+

sample (`torch.Tensor`): input sample

|

| 59 |

+

timestep (`int`, optional): current timestep

|

| 60 |

+

|

| 61 |

+

Returns:

|

| 62 |

+

`torch.Tensor`: scaled input sample

|

| 63 |

+

"""

|

| 64 |

+

return sample

|

| 65 |

+

|

| 66 |

+

def set_timesteps(

|

| 67 |

+

self,

|

| 68 |

+

num_inference_steps: int = None,

|

| 69 |

+

timesteps: Optional[List[int]] = None,

|

| 70 |

+

device: Union[str, torch.device] = None,

|

| 71 |

+

):

|

| 72 |

+

"""

|

| 73 |

+

Sets the discrete timesteps used for the diffusion chain. Supporting function to be run before inference.

|

| 74 |

+

|

| 75 |

+

Args:

|

| 76 |

+

num_inference_steps (`Dict[float, int]`):

|

| 77 |

+

the number of diffusion steps used when generating samples with a pre-trained model. If passed, then

|

| 78 |

+

`timesteps` must be `None`.

|

| 79 |

+

device (`str` or `torch.device`, optional):

|

| 80 |

+

the device to which the timesteps are moved to. {2 / 3: 20, 0.0: 10}

|

| 81 |

+

"""

|

| 82 |

+

if timesteps is None:

|

| 83 |

+

timesteps = torch.linspace(1.0, 0.0, num_inference_steps + 1, device=device)

|

| 84 |

+

if not isinstance(timesteps, torch.Tensor):

|

| 85 |

+

timesteps = torch.Tensor(timesteps).to(device)

|

| 86 |

+

self.timesteps = timesteps

|

| 87 |

+

|

| 88 |

+

def step(

|

| 89 |

+

self,

|

| 90 |

+

model_output: torch.Tensor,

|

| 91 |

+

timestep: int,

|

| 92 |

+

sample: torch.Tensor,

|

| 93 |

+

generator=None,

|

| 94 |

+

return_dict: bool = True,

|

| 95 |

+

) -> Union[DDPMSchedulerOutput, Tuple]:

|

| 96 |

+

dtype = model_output.dtype

|

| 97 |

+

device = model_output.device

|

| 98 |

+

t = timestep

|

| 99 |

+

|

| 100 |

+

prev_t = self.previous_timestep(t)

|

| 101 |

+

|

| 102 |

+

alpha_cumprod = self._alpha_cumprod(t, device).view(t.size(0), *[1 for _ in sample.shape[1:]])

|

| 103 |

+

alpha_cumprod_prev = self._alpha_cumprod(prev_t, device).view(prev_t.size(0), *[1 for _ in sample.shape[1:]])

|

| 104 |

+

alpha = alpha_cumprod / alpha_cumprod_prev

|

| 105 |

+

|

| 106 |

+

mu = (1.0 / alpha).sqrt() * (sample - (1 - alpha) * model_output / (1 - alpha_cumprod).sqrt())

|

| 107 |

+

|

| 108 |

+

std_noise = randn_tensor(mu.shape, generator=generator, device=model_output.device, dtype=model_output.dtype)

|

| 109 |

+

std = ((1 - alpha) * (1.0 - alpha_cumprod_prev) / (1.0 - alpha_cumprod)).sqrt() * std_noise

|

| 110 |

+

pred = mu + std * (prev_t != 0).float().view(prev_t.size(0), *[1 for _ in sample.shape[1:]])

|

| 111 |

+

|

| 112 |

+

if not return_dict:

|

| 113 |

+

return (pred.to(dtype),)

|

| 114 |

+

|

| 115 |

+

return DDPMSchedulerOutput(prev_sample=pred.to(dtype))

|

| 116 |

+

|

| 117 |

+

def add_noise(

|

| 118 |

+

self,

|

| 119 |

+

original_samples: torch.Tensor,

|

| 120 |

+

noise: torch.Tensor,

|

| 121 |

+

timesteps: torch.Tensor,

|

| 122 |

+

) -> torch.Tensor:

|

| 123 |

+

device = original_samples.device

|

| 124 |

+

dtype = original_samples.dtype

|

| 125 |

+

alpha_cumprod = self._alpha_cumprod(timesteps, device=device).view(

|

| 126 |

+

timesteps.size(0), *[1 for _ in original_samples.shape[1:]]

|

| 127 |

+

)

|

| 128 |

+

noisy_samples = alpha_cumprod.sqrt() * original_samples + (1 - alpha_cumprod).sqrt() * noise

|

| 129 |

+

return noisy_samples.to(dtype=dtype)

|

| 130 |

+

|

| 131 |

+

def __len__(self):

|

| 132 |

+

return self.config.num_train_timesteps

|

| 133 |

+

|

| 134 |

+

def previous_timestep(self, timestep):

|

| 135 |

+

index = (self.timesteps - timestep[0]).abs().argmin().item()

|

| 136 |

+

prev_t = self.timesteps[index + 1][None].expand(timestep.shape[0])

|

| 137 |

+

return prev_t

|

diffusion_schedulers/scheduling_flow_matching.py

ADDED

|

@@ -0,0 +1,298 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from dataclasses import dataclass

|

| 2 |

+

from typing import Optional, Tuple, Union, List

|

| 3 |

+

import math

|

| 4 |

+

import numpy as np

|

| 5 |

+

import torch

|

| 6 |

+

|

| 7 |

+

from diffusers.configuration_utils import ConfigMixin, register_to_config

|

| 8 |

+

from diffusers.utils import BaseOutput, logging

|

| 9 |

+

from diffusers.utils.torch_utils import randn_tensor

|

| 10 |

+

from diffusers.schedulers.scheduling_utils import SchedulerMixin

|

| 11 |

+

from IPython import embed

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

@dataclass

|

| 15 |

+

class FlowMatchEulerDiscreteSchedulerOutput(BaseOutput):

|

| 16 |

+

"""

|

| 17 |

+

Output class for the scheduler's `step` function output.

|

| 18 |

+

|

| 19 |

+

Args:

|

| 20 |

+

prev_sample (`torch.FloatTensor` of shape `(batch_size, num_channels, height, width)` for images):

|

| 21 |

+

Computed sample `(x_{t-1})` of previous timestep. `prev_sample` should be used as next model input in the

|

| 22 |

+

denoising loop.

|

| 23 |

+

"""

|

| 24 |

+

|

| 25 |

+

prev_sample: torch.FloatTensor

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

class PyramidFlowMatchEulerDiscreteScheduler(SchedulerMixin, ConfigMixin):

|

| 29 |

+

"""

|

| 30 |

+

Euler scheduler.

|

| 31 |

+

|

| 32 |

+

This model inherits from [`SchedulerMixin`] and [`ConfigMixin`]. Check the superclass documentation for the generic

|

| 33 |

+

methods the library implements for all schedulers such as loading and saving.

|

| 34 |

+

|

| 35 |

+

Args:

|

| 36 |

+

num_train_timesteps (`int`, defaults to 1000):

|

| 37 |

+

The number of diffusion steps to train the model.

|

| 38 |

+

timestep_spacing (`str`, defaults to `"linspace"`):

|

| 39 |

+

The way the timesteps should be scaled. Refer to Table 2 of the [Common Diffusion Noise Schedules and

|

| 40 |

+

Sample Steps are Flawed](https://huggingface.co/papers/2305.08891) for more information.

|

| 41 |

+

shift (`float`, defaults to 1.0):

|

| 42 |

+

The shift value for the timestep schedule.

|

| 43 |

+

"""

|

| 44 |

+

|

| 45 |

+

_compatibles = []

|

| 46 |

+

order = 1

|

| 47 |

+

|

| 48 |

+

@register_to_config

|

| 49 |

+

def __init__(

|

| 50 |

+

self,

|

| 51 |

+

num_train_timesteps: int = 1000,

|

| 52 |

+

shift: float = 1.0, # Following Stable diffusion 3,

|

| 53 |

+

stages: int = 3,

|

| 54 |

+

stage_range: List = [0, 1/3, 2/3, 1],

|

| 55 |

+

gamma: float = 1/3,

|

| 56 |

+

):

|

| 57 |

+

|

| 58 |

+

self.timestep_ratios = {} # The timestep ratio for each stage

|

| 59 |

+

self.timesteps_per_stage = {} # The detailed timesteps per stage

|

| 60 |

+

self.sigmas_per_stage = {}

|

| 61 |

+

self.start_sigmas = {}

|

| 62 |

+

self.end_sigmas = {}

|

| 63 |

+

self.ori_start_sigmas = {}

|

| 64 |

+

|

| 65 |

+

# self.init_sigmas()

|

| 66 |

+

self.init_sigmas_for_each_stage()

|

| 67 |

+

self.sigma_min = self.sigmas[-1].item()

|

| 68 |

+

self.sigma_max = self.sigmas[0].item()

|

| 69 |

+

self.gamma = gamma

|

| 70 |

+

|

| 71 |

+

def init_sigmas(self):

|

| 72 |

+

"""

|

| 73 |

+

initialize the global timesteps and sigmas

|

| 74 |

+

"""

|

| 75 |

+

num_train_timesteps = self.config.num_train_timesteps

|

| 76 |

+

shift = self.config.shift

|

| 77 |

+

|

| 78 |

+

timesteps = np.linspace(1, num_train_timesteps, num_train_timesteps, dtype=np.float32)[::-1].copy()

|

| 79 |

+

timesteps = torch.from_numpy(timesteps).to(dtype=torch.float32)

|

| 80 |

+

|

| 81 |

+

sigmas = timesteps / num_train_timesteps

|

| 82 |

+

sigmas = shift * sigmas / (1 + (shift - 1) * sigmas)

|

| 83 |

+

|

| 84 |

+

self.timesteps = sigmas * num_train_timesteps

|

| 85 |

+

|

| 86 |

+

self._step_index = None

|

| 87 |

+

self._begin_index = None

|

| 88 |

+

|

| 89 |

+

self.sigmas = sigmas.to("cpu") # to avoid too much CPU/GPU communication

|

| 90 |

+

|

| 91 |

+

def init_sigmas_for_each_stage(self):

|

| 92 |

+

"""

|

| 93 |

+

Init the timesteps for each stage

|

| 94 |

+

"""

|

| 95 |

+

self.init_sigmas()

|

| 96 |

+

|

| 97 |

+

stage_distance = []

|

| 98 |

+

stages = self.config.stages

|

| 99 |

+

training_steps = self.config.num_train_timesteps

|

| 100 |

+

stage_range = self.config.stage_range

|

| 101 |

+

|

| 102 |

+

# Init the start and end point of each stage

|

| 103 |

+

for i_s in range(stages):

|

| 104 |

+

# To decide the start and ends point

|

| 105 |

+

start_indice = int(stage_range[i_s] * training_steps)

|

| 106 |

+

start_indice = max(start_indice, 0)

|

| 107 |

+

end_indice = int(stage_range[i_s+1] * training_steps)

|

| 108 |

+

end_indice = min(end_indice, training_steps)

|

| 109 |

+

start_sigma = self.sigmas[start_indice].item()

|

| 110 |

+

end_sigma = self.sigmas[end_indice].item() if end_indice < training_steps else 0.0

|

| 111 |

+

self.ori_start_sigmas[i_s] = start_sigma

|

| 112 |

+

|

| 113 |

+

if i_s != 0:

|

| 114 |

+

ori_sigma = 1 - start_sigma

|

| 115 |

+

gamma = self.config.gamma

|

| 116 |

+

corrected_sigma = (1 / (math.sqrt(1 + (1 / gamma)) * (1 - ori_sigma) + ori_sigma)) * ori_sigma

|

| 117 |

+

# corrected_sigma = 1 / (2 - ori_sigma) * ori_sigma

|

| 118 |

+

start_sigma = 1 - corrected_sigma

|

| 119 |

+

|

| 120 |

+

stage_distance.append(start_sigma - end_sigma)

|

| 121 |

+

self.start_sigmas[i_s] = start_sigma

|

| 122 |

+

self.end_sigmas[i_s] = end_sigma

|

| 123 |

+

|

| 124 |

+

# Determine the ratio of each stage according to flow length

|

| 125 |

+

tot_distance = sum(stage_distance)

|

| 126 |

+

for i_s in range(stages):

|

| 127 |

+

if i_s == 0:

|

| 128 |

+

start_ratio = 0.0

|

| 129 |

+

else:

|

| 130 |

+

start_ratio = sum(stage_distance[:i_s]) / tot_distance

|

| 131 |

+

if i_s == stages - 1:

|

| 132 |

+

end_ratio = 1.0

|

| 133 |

+

else:

|

| 134 |

+

end_ratio = sum(stage_distance[:i_s+1]) / tot_distance

|

| 135 |

+

|

| 136 |

+

self.timestep_ratios[i_s] = (start_ratio, end_ratio)

|

| 137 |

+

|

| 138 |

+

# Determine the timesteps and sigmas for each stage

|

| 139 |

+

for i_s in range(stages):

|

| 140 |

+

timestep_ratio = self.timestep_ratios[i_s]

|

| 141 |

+

timestep_max = self.timesteps[int(timestep_ratio[0] * training_steps)]

|

| 142 |

+

timestep_min = self.timesteps[min(int(timestep_ratio[1] * training_steps), training_steps - 1)]

|

| 143 |

+

timesteps = np.linspace(

|

| 144 |

+

timestep_max, timestep_min, training_steps + 1,

|

| 145 |

+

)

|

| 146 |

+

self.timesteps_per_stage[i_s] = torch.from_numpy(timesteps[:-1])

|

| 147 |

+

stage_sigmas = np.linspace(

|

| 148 |

+

1, 0, training_steps + 1,

|

| 149 |

+

)

|

| 150 |

+

self.sigmas_per_stage[i_s] = torch.from_numpy(stage_sigmas[:-1])

|

| 151 |

+

|

| 152 |

+

@property

|

| 153 |

+

def step_index(self):

|

| 154 |

+

"""

|

| 155 |

+

The index counter for current timestep. It will increase 1 after each scheduler step.

|

| 156 |

+

"""

|

| 157 |

+

return self._step_index

|

| 158 |

+

|

| 159 |

+

@property

|

| 160 |

+

def begin_index(self):

|

| 161 |

+

"""

|

| 162 |

+

The index for the first timestep. It should be set from pipeline with `set_begin_index` method.

|

| 163 |

+

"""

|

| 164 |

+

return self._begin_index

|

| 165 |

+

|

| 166 |

+

# Copied from diffusers.schedulers.scheduling_dpmsolver_multistep.DPMSolverMultistepScheduler.set_begin_index

|

| 167 |

+

def set_begin_index(self, begin_index: int = 0):

|

| 168 |

+

"""

|

| 169 |

+

Sets the begin index for the scheduler. This function should be run from pipeline before the inference.

|

| 170 |

+

|

| 171 |

+

Args:

|

| 172 |

+

begin_index (`int`):

|

| 173 |

+

The begin index for the scheduler.

|

| 174 |

+

"""

|

| 175 |

+

self._begin_index = begin_index

|

| 176 |

+

|

| 177 |

+

def _sigma_to_t(self, sigma):

|

| 178 |

+

return sigma * self.config.num_train_timesteps

|

| 179 |

+

|

| 180 |

+

def set_timesteps(self, num_inference_steps: int, stage_index: int, device: Union[str, torch.device] = None):

|

| 181 |

+

"""

|

| 182 |

+

Setting the timesteps and sigmas for each stage

|

| 183 |

+

"""

|

| 184 |

+

self.num_inference_steps = num_inference_steps

|

| 185 |

+

training_steps = self.config.num_train_timesteps

|

| 186 |

+

self.init_sigmas()

|

| 187 |

+

|

| 188 |

+

stage_timesteps = self.timesteps_per_stage[stage_index]

|

| 189 |

+

timestep_max = stage_timesteps[0].item()

|

| 190 |

+

timestep_min = stage_timesteps[-1].item()

|

| 191 |

+

|

| 192 |

+

timesteps = np.linspace(

|

| 193 |

+

timestep_max, timestep_min, num_inference_steps,

|

| 194 |

+

)

|

| 195 |

+

self.timesteps = torch.from_numpy(timesteps).to(device=device)

|

| 196 |

+

|

| 197 |

+

stage_sigmas = self.sigmas_per_stage[stage_index]

|

| 198 |

+

sigma_max = stage_sigmas[0].item()

|

| 199 |

+

sigma_min = stage_sigmas[-1].item()

|

| 200 |

+

|

| 201 |

+

ratios = np.linspace(

|

| 202 |

+

sigma_max, sigma_min, num_inference_steps

|

| 203 |

+

)

|

| 204 |

+

sigmas = torch.from_numpy(ratios).to(device=device)

|

| 205 |

+

self.sigmas = torch.cat([sigmas, torch.zeros(1, device=sigmas.device)])

|

| 206 |

+

|

| 207 |

+

self._step_index = None

|

| 208 |

+

|

| 209 |

+

def index_for_timestep(self, timestep, schedule_timesteps=None):

|

| 210 |

+

if schedule_timesteps is None:

|

| 211 |

+

schedule_timesteps = self.timesteps

|

| 212 |

+

|

| 213 |

+

indices = (schedule_timesteps == timestep).nonzero()

|

| 214 |

+

|

| 215 |

+

# The sigma index that is taken for the **very** first `step`

|

| 216 |

+

# is always the second index (or the last index if there is only 1)

|

| 217 |

+

# This way we can ensure we don't accidentally skip a sigma in

|

| 218 |

+

# case we start in the middle of the denoising schedule (e.g. for image-to-image)

|

| 219 |

+

pos = 1 if len(indices) > 1 else 0

|

| 220 |

+

|

| 221 |

+

return indices[pos].item()

|

| 222 |

+

|

| 223 |

+

def _init_step_index(self, timestep):

|

| 224 |

+

if self.begin_index is None:

|

| 225 |

+

if isinstance(timestep, torch.Tensor):

|

| 226 |

+

timestep = timestep.to(self.timesteps.device)

|

| 227 |

+

self._step_index = self.index_for_timestep(timestep)

|

| 228 |

+

else:

|

| 229 |

+

self._step_index = self._begin_index

|

| 230 |

+

|

| 231 |

+

def step(

|

| 232 |

+

self,

|

| 233 |

+

model_output: torch.FloatTensor,

|

| 234 |

+

timestep: Union[float, torch.FloatTensor],

|

| 235 |

+

sample: torch.FloatTensor,

|

| 236 |

+

generator: Optional[torch.Generator] = None,

|

| 237 |

+

return_dict: bool = True,

|

| 238 |

+

) -> Union[FlowMatchEulerDiscreteSchedulerOutput, Tuple]:

|

| 239 |

+

"""

|

| 240 |

+

Predict the sample from the previous timestep by reversing the SDE. This function propagates the diffusion

|

| 241 |

+

process from the learned model outputs (most often the predicted noise).

|

| 242 |

+

|

| 243 |

+

Args:

|

| 244 |

+

model_output (`torch.FloatTensor`):

|

| 245 |

+

The direct output from learned diffusion model.

|

| 246 |

+

timestep (`float`):

|

| 247 |

+

The current discrete timestep in the diffusion chain.

|

| 248 |

+

sample (`torch.FloatTensor`):

|

| 249 |

+

A current instance of a sample created by the diffusion process.

|

| 250 |

+

generator (`torch.Generator`, *optional*):

|

| 251 |

+

A random number generator.

|

| 252 |

+

return_dict (`bool`):

|

| 253 |

+

Whether or not to return a [`~schedulers.scheduling_euler_discrete.EulerDiscreteSchedulerOutput`] or

|

| 254 |

+

tuple.

|

| 255 |

+

|

| 256 |

+

Returns:

|

| 257 |

+

[`~schedulers.scheduling_euler_discrete.EulerDiscreteSchedulerOutput`] or `tuple`:

|

| 258 |

+

If return_dict is `True`, [`~schedulers.scheduling_euler_discrete.EulerDiscreteSchedulerOutput`] is

|

| 259 |

+

returned, otherwise a tuple is returned where the first element is the sample tensor.

|

| 260 |

+

"""

|

| 261 |

+

|

| 262 |

+

if (

|

| 263 |

+

isinstance(timestep, int)

|

| 264 |

+

or isinstance(timestep, torch.IntTensor)

|

| 265 |

+

or isinstance(timestep, torch.LongTensor)

|

| 266 |

+

):

|

| 267 |

+

raise ValueError(

|

| 268 |

+

(

|

| 269 |

+

"Passing integer indices (e.g. from `enumerate(timesteps)`) as timesteps to"

|

| 270 |

+

" `EulerDiscreteScheduler.step()` is not supported. Make sure to pass"

|

| 271 |

+

" one of the `scheduler.timesteps` as a timestep."

|

| 272 |

+

),

|

| 273 |

+

)

|

| 274 |

+

|

| 275 |

+

if self.step_index is None:

|

| 276 |

+

self._step_index = 0

|

| 277 |

+

|

| 278 |

+

# Upcast to avoid precision issues when computing prev_sample

|

| 279 |

+

sample = sample.to(torch.float32)

|

| 280 |

+

|

| 281 |

+

sigma = self.sigmas[self.step_index]

|

| 282 |

+

sigma_next = self.sigmas[self.step_index + 1]

|

| 283 |

+

|

| 284 |

+

prev_sample = sample + (sigma_next - sigma) * model_output

|

| 285 |

+

|

| 286 |

+

# Cast sample back to model compatible dtype

|

| 287 |

+

prev_sample = prev_sample.to(model_output.dtype)

|

| 288 |

+

|

| 289 |

+

# upon completion increase step index by one

|

| 290 |

+

self._step_index += 1

|

| 291 |

+

|

| 292 |

+

if not return_dict:

|

| 293 |

+

return (prev_sample,)

|

| 294 |

+

|

| 295 |

+

return FlowMatchEulerDiscreteSchedulerOutput(prev_sample=prev_sample)

|

| 296 |

+

|

| 297 |

+

def __len__(self):

|

| 298 |

+

return self.config.num_train_timesteps

|

pyramid_dit/__init__.py

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .modeling_pyramid_mmdit import PyramidDiffusionMMDiT

|

| 2 |

+

from .pyramid_dit_for_video_gen_pipeline import PyramidDiTForVideoGeneration

|

| 3 |

+

from .modeling_text_encoder import SD3TextEncoderWithMask

|

pyramid_dit/modeling_embedding.py

ADDED

|

@@ -0,0 +1,390 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import Any, Dict, Optional, Union

|

| 2 |

+

|

| 3 |

+

import torch

|

| 4 |

+

import torch.nn as nn

|

| 5 |

+

import numpy as np

|

| 6 |

+

import math

|

| 7 |

+

|

| 8 |

+

from diffusers.models.activations import get_activation

|

| 9 |

+

from einops import rearrange

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

def get_1d_sincos_pos_embed(

|

| 13 |

+

embed_dim, num_frames, cls_token=False, extra_tokens=0,

|

| 14 |

+

):

|

| 15 |

+

t = np.arange(num_frames, dtype=np.float32)

|

| 16 |

+

pos_embed = get_1d_sincos_pos_embed_from_grid(embed_dim, t) # (T, D)

|

| 17 |

+

if cls_token and extra_tokens > 0:

|

| 18 |

+

pos_embed = np.concatenate([np.zeros([extra_tokens, embed_dim]), pos_embed], axis=0)

|

| 19 |

+

return pos_embed

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

def get_2d_sincos_pos_embed(

|

| 23 |

+

embed_dim, grid_size, cls_token=False, extra_tokens=0, interpolation_scale=1.0, base_size=16

|

| 24 |

+

):

|

| 25 |

+

"""

|

| 26 |

+

grid_size: int of the grid height and width return: pos_embed: [grid_size*grid_size, embed_dim] or

|

| 27 |

+

[1+grid_size*grid_size, embed_dim] (w/ or w/o cls_token)

|

| 28 |

+

"""

|

| 29 |

+

if isinstance(grid_size, int):

|

| 30 |

+

grid_size = (grid_size, grid_size)

|

| 31 |

+

|

| 32 |

+

grid_h = np.arange(grid_size[0], dtype=np.float32) / (grid_size[0] / base_size) / interpolation_scale

|

| 33 |

+

grid_w = np.arange(grid_size[1], dtype=np.float32) / (grid_size[1] / base_size) / interpolation_scale

|

| 34 |

+

grid = np.meshgrid(grid_w, grid_h) # here w goes first

|

| 35 |

+

grid = np.stack(grid, axis=0)

|

| 36 |

+

|

| 37 |

+

grid = grid.reshape([2, 1, grid_size[1], grid_size[0]])

|

| 38 |

+

pos_embed = get_2d_sincos_pos_embed_from_grid(embed_dim, grid)

|

| 39 |

+

if cls_token and extra_tokens > 0:

|

| 40 |

+

pos_embed = np.concatenate([np.zeros([extra_tokens, embed_dim]), pos_embed], axis=0)

|

| 41 |

+

return pos_embed

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

def get_2d_sincos_pos_embed_from_grid(embed_dim, grid):

|

| 45 |

+

if embed_dim % 2 != 0:

|

| 46 |

+

raise ValueError("embed_dim must be divisible by 2")

|

| 47 |

+

|

| 48 |

+

# use half of dimensions to encode grid_h

|

| 49 |

+