Sofian Hadiwijaya

commited on

Commit

•

7e9d3a4

1

Parent(s):

42b94b0

add files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +5 -0

- Dockerfile +89 -0

- LICENSE +21 -0

- README.md +8 -8

- app.py +294 -0

- assets/BBOX_SHIFT.md +26 -0

- assets/demo/man/man.png +3 -0

- assets/demo/monalisa/monalisa.png +0 -0

- assets/demo/musk/musk.png +0 -0

- assets/demo/sit/sit.jpeg +0 -0

- assets/demo/sun1/sun.png +0 -0

- assets/demo/sun2/sun.png +0 -0

- assets/demo/video1/video1.png +0 -0

- assets/demo/yongen/yongen.jpeg +0 -0

- assets/figs/landmark_ref.png +0 -0

- assets/figs/musetalk_arc.jpg +0 -0

- configs/inference/test.yaml +10 -0

- data/audio/sun.wav +3 -0

- data/audio/yongen.wav +3 -0

- data/video/sun.mp4 +3 -0

- data/video/yongen.mp4 +3 -0

- entrypoint.sh +11 -0

- install_ffmpeg.sh +70 -0

- musetalk/models/unet.py +47 -0

- musetalk/models/vae.py +148 -0

- musetalk/utils/__init__.py +5 -0

- musetalk/utils/blending.py +59 -0

- musetalk/utils/dwpose/default_runtime.py +54 -0

- musetalk/utils/dwpose/rtmpose-l_8xb32-270e_coco-ubody-wholebody-384x288.py +257 -0

- musetalk/utils/face_detection/README.md +1 -0

- musetalk/utils/face_detection/__init__.py +7 -0

- musetalk/utils/face_detection/api.py +240 -0

- musetalk/utils/face_detection/detection/__init__.py +1 -0

- musetalk/utils/face_detection/detection/core.py +130 -0

- musetalk/utils/face_detection/detection/sfd/__init__.py +1 -0

- musetalk/utils/face_detection/detection/sfd/bbox.py +129 -0

- musetalk/utils/face_detection/detection/sfd/detect.py +114 -0

- musetalk/utils/face_detection/detection/sfd/net_s3fd.py +129 -0

- musetalk/utils/face_detection/detection/sfd/sfd_detector.py +59 -0

- musetalk/utils/face_detection/models.py +261 -0

- musetalk/utils/face_detection/utils.py +313 -0

- musetalk/utils/face_parsing/__init__.py +56 -0

- musetalk/utils/face_parsing/model.py +283 -0

- musetalk/utils/face_parsing/resnet.py +109 -0

- musetalk/utils/preprocessing.py +113 -0

- musetalk/utils/utils.py +61 -0

- musetalk/whisper/audio2feature.py +124 -0

- musetalk/whisper/whisper/__init__.py +116 -0

- musetalk/whisper/whisper/__main__.py +4 -0

- musetalk/whisper/whisper/assets/gpt2/merges.txt +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,8 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/demo/man/man.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

data/audio/sun.wav filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

data/audio/yongen.wav filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

data/video/sun.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

data/video/yongen.mp4 filter=lfs diff=lfs merge=lfs -text

|

Dockerfile

ADDED

|

@@ -0,0 +1,89 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM anchorxia/musev:latest

|

| 2 |

+

|

| 3 |

+

#MAINTAINER 维护者信息

|

| 4 |

+

LABEL MAINTAINER="zkangchen"

|

| 5 |

+

LABEL Email="[email protected]"

|

| 6 |

+

LABEL Description="musev gradio image, from docker pull anchorxia/musev:latest"

|

| 7 |

+

|

| 8 |

+

SHELL ["/bin/bash", "--login", "-c"]

|

| 9 |

+

|

| 10 |

+

# Set up a new user named "user" with user ID 1000

|

| 11 |

+

RUN useradd -m -u 1000 user

|

| 12 |

+

|

| 13 |

+

# Switch to the "user" user

|

| 14 |

+

USER user

|

| 15 |

+

|

| 16 |

+

# Set home to the user's home directory

|

| 17 |

+

ENV HOME=/home/user \

|

| 18 |

+

PATH=/home/user/.local/bin:$PATH

|

| 19 |

+

|

| 20 |

+

# Set the working directory to the user's home directory

|

| 21 |

+

WORKDIR $HOME/app

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

################################################# INSTALLING FFMPEG ##################################################

|

| 25 |

+

# RUN apt-get update ; apt-get install -y git build-essential gcc make yasm autoconf automake cmake libtool checkinstall libmp3lame-dev pkg-config libunwind-dev zlib1g-dev libssl-dev

|

| 26 |

+

|

| 27 |

+

# RUN apt-get update \

|

| 28 |

+

# && apt-get clean \

|

| 29 |

+

# && apt-get install -y --no-install-recommends libc6-dev libgdiplus wget software-properties-common

|

| 30 |

+

|

| 31 |

+

#RUN RUN apt-add-repository ppa:git-core/ppa && apt-get update && apt-get install -y git

|

| 32 |

+

|

| 33 |

+

# RUN wget https://www.ffmpeg.org/releases/ffmpeg-4.0.2.tar.gz

|

| 34 |

+

# RUN tar -xzf ffmpeg-4.0.2.tar.gz; rm -r ffmpeg-4.0.2.tar.gz

|

| 35 |

+

# RUN cd ./ffmpeg-4.0.2; ./configure --enable-gpl --enable-libmp3lame --enable-decoder=mjpeg,png --enable-encoder=png --enable-openssl --enable-nonfree

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

# RUN cd ./ffmpeg-4.0.2; make

|

| 39 |

+

# RUN cd ./ffmpeg-4.0.2; make install

|

| 40 |

+

######################################################################################################################

|

| 41 |

+

|

| 42 |

+

RUN echo "docker start"\

|

| 43 |

+

&& whoami \

|

| 44 |

+

&& which python \

|

| 45 |

+

&& pwd

|

| 46 |

+

|

| 47 |

+

RUN git clone -b main --recursive https://github.com/TMElyralab/MuseTalk.git

|

| 48 |

+

|

| 49 |

+

RUN chmod -R 777 /home/user/app/MuseTalk

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

RUN . /opt/conda/etc/profile.d/conda.sh \

|

| 54 |

+

&& echo "source activate musev" >> ~/.bashrc \

|

| 55 |

+

&& conda activate musev \

|

| 56 |

+

&& conda env list

|

| 57 |

+

# && conda install ffmpeg

|

| 58 |

+

|

| 59 |

+

RUN ffmpeg -codecs

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

WORKDIR /home/user/app/MuseTalk/

|

| 66 |

+

|

| 67 |

+

RUN pip install -r requirements.txt \

|

| 68 |

+

&& pip install --no-cache-dir -U openmim \

|

| 69 |

+

&& mim install mmengine \

|

| 70 |

+

&& mim install "mmcv>=2.0.1" \

|

| 71 |

+

&& mim install "mmdet>=3.1.0" \

|

| 72 |

+

&& mim install "mmpose>=1.1.0"

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

# Add entrypoint script

|

| 76 |

+

#RUN chmod 777 ./entrypoint.sh

|

| 77 |

+

RUN ls -l ./

|

| 78 |

+

|

| 79 |

+

EXPOSE 7860

|

| 80 |

+

|

| 81 |

+

# CMD ["/bin/bash", "-c", "python app.py"]

|

| 82 |

+

CMD ["./install_ffmpeg.sh"]

|

| 83 |

+

CMD ["./entrypoint.sh"]

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2024 TMElyralab

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,13 +1,13 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

colorTo: purple

|

| 6 |

-

sdk:

|

| 7 |

-

sdk_version: 4.44.1

|

| 8 |

-

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

-

license:

|

|

|

|

|

|

|

| 11 |

---

|

| 12 |

|

| 13 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

---

|

| 2 |

+

title: MuseTalkDemo

|

| 3 |

+

emoji: 🌍

|

| 4 |

+

colorFrom: gray

|

| 5 |

colorTo: purple

|

| 6 |

+

sdk: docker

|

|

|

|

|

|

|

| 7 |

pinned: false

|

| 8 |

+

license: creativeml-openrail-m

|

| 9 |

+

app_file: app.py

|

| 10 |

+

app_port: 7860

|

| 11 |

---

|

| 12 |

|

| 13 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

app.py

ADDED

|

@@ -0,0 +1,294 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import time

|

| 3 |

+

import pdb

|

| 4 |

+

|

| 5 |

+

import gradio as gr

|

| 6 |

+

import spaces

|

| 7 |

+

import numpy as np

|

| 8 |

+

import sys

|

| 9 |

+

import subprocess

|

| 10 |

+

|

| 11 |

+

from huggingface_hub import snapshot_download

|

| 12 |

+

import requests

|

| 13 |

+

|

| 14 |

+

import argparse

|

| 15 |

+

import os

|

| 16 |

+

from omegaconf import OmegaConf

|

| 17 |

+

import numpy as np

|

| 18 |

+

import cv2

|

| 19 |

+

import torch

|

| 20 |

+

import glob

|

| 21 |

+

import pickle

|

| 22 |

+

from tqdm import tqdm

|

| 23 |

+

import copy

|

| 24 |

+

from argparse import Namespace

|

| 25 |

+

import shutil

|

| 26 |

+

import gdown

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

def download_model():

|

| 30 |

+

if not os.path.exists(CheckpointsDir):

|

| 31 |

+

os.makedirs(CheckpointsDir)

|

| 32 |

+

print("Checkpoint Not Downloaded, start downloading...")

|

| 33 |

+

tic = time.time()

|

| 34 |

+

snapshot_download(

|

| 35 |

+

repo_id="TMElyralab/MuseTalk",

|

| 36 |

+

local_dir=CheckpointsDir,

|

| 37 |

+

max_workers=8,

|

| 38 |

+

local_dir_use_symlinks=True,

|

| 39 |

+

)

|

| 40 |

+

# weight

|

| 41 |

+

snapshot_download(

|

| 42 |

+

repo_id="stabilityai/sd-vae-ft-mse",

|

| 43 |

+

local_dir=CheckpointsDir,

|

| 44 |

+

max_workers=8,

|

| 45 |

+

local_dir_use_symlinks=True,

|

| 46 |

+

)

|

| 47 |

+

#dwpose

|

| 48 |

+

snapshot_download(

|

| 49 |

+

repo_id="yzd-v/DWPose",

|

| 50 |

+

local_dir=CheckpointsDir,

|

| 51 |

+

max_workers=8,

|

| 52 |

+

local_dir_use_symlinks=True,

|

| 53 |

+

)

|

| 54 |

+

#vae

|

| 55 |

+

url = "https://openaipublic.azureedge.net/main/whisper/models/65147644a518d12f04e32d6f3b26facc3f8dd46e5390956a9424a650c0ce22b9/tiny.pt"

|

| 56 |

+

response = requests.get(url)

|

| 57 |

+

# 确保请求成功

|

| 58 |

+

if response.status_code == 200:

|

| 59 |

+

# 指定文件保存的位置

|

| 60 |

+

file_path = f"{CheckpointsDir}/whisper/tiny.pt"

|

| 61 |

+

os.makedirs(f"{CheckpointsDir}/whisper/")

|

| 62 |

+

# 将文件内容写入指定位置

|

| 63 |

+

with open(file_path, "wb") as f:

|

| 64 |

+

f.write(response.content)

|

| 65 |

+

else:

|

| 66 |

+

print(f"请求失败,状态码:{response.status_code}")

|

| 67 |

+

#gdown face parse

|

| 68 |

+

url = "https://drive.google.com/uc?id=154JgKpzCPW82qINcVieuPH3fZ2e0P812"

|

| 69 |

+

os.makedirs(f"{CheckpointsDir}/face-parse-bisent/")

|

| 70 |

+

file_path = f"{CheckpointsDir}/face-parse-bisent/79999_iter.pth"

|

| 71 |

+

gdown.download(url, output, quiet=False)

|

| 72 |

+

#resnet

|

| 73 |

+

url = "https://download.pytorch.org/models/resnet18-5c106cde.pth"

|

| 74 |

+

response = requests.get(url)

|

| 75 |

+

# 确保请求成功

|

| 76 |

+

if response.status_code == 200:

|

| 77 |

+

# 指定文件保存的位置

|

| 78 |

+

file_path = f"{CheckpointsDir}/face-parse-bisent/resnet18-5c106cde.pth"

|

| 79 |

+

# 将文件内容写入指定位置

|

| 80 |

+

with open(file_path, "wb") as f:

|

| 81 |

+

f.write(response.content)

|

| 82 |

+

else:

|

| 83 |

+

print(f"请求失败,状态码:{response.status_code}")

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

toc = time.time()

|

| 87 |

+

|

| 88 |

+

print(f"download cost {toc-tic} seconds")

|

| 89 |

+

else:

|

| 90 |

+

print("Already download the model.")

|

| 91 |

+

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

download_model() # for huggingface deployment.

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

from musetalk.utils.utils import get_file_type,get_video_fps,datagen

|

| 98 |

+

from musetalk.utils.preprocessing import get_landmark_and_bbox,read_imgs,coord_placeholder

|

| 99 |

+

from musetalk.utils.blending import get_image

|

| 100 |

+

from musetalk.utils.utils import load_all_model

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

ProjectDir = os.path.abspath(os.path.dirname(__file__))

|

| 105 |

+

CheckpointsDir = os.path.join(ProjectDir, "checkpoints")

|

| 106 |

+

|

| 107 |

+

|

| 108 |

+

@spaces.GPU(duration=600)

|

| 109 |

+

@torch.no_grad()

|

| 110 |

+

def inference(audio_path,video_path,bbox_shift,progress=gr.Progress(track_tqdm=True)):

|

| 111 |

+

args_dict={"result_dir":'./results', "fps":25, "batch_size":8, "output_vid_name":'', "use_saved_coord":False}#same with inferenece script

|

| 112 |

+

args = Namespace(**args_dict)

|

| 113 |

+

|

| 114 |

+

input_basename = os.path.basename(video_path).split('.')[0]

|

| 115 |

+

audio_basename = os.path.basename(audio_path).split('.')[0]

|

| 116 |

+

output_basename = f"{input_basename}_{audio_basename}"

|

| 117 |

+

result_img_save_path = os.path.join(args.result_dir, output_basename) # related to video & audio inputs

|

| 118 |

+

crop_coord_save_path = os.path.join(result_img_save_path, input_basename+".pkl") # only related to video input

|

| 119 |

+

os.makedirs(result_img_save_path,exist_ok =True)

|

| 120 |

+

|

| 121 |

+

if args.output_vid_name=="":

|

| 122 |

+

output_vid_name = os.path.join(args.result_dir, output_basename+".mp4")

|

| 123 |

+

else:

|

| 124 |

+

output_vid_name = os.path.join(args.result_dir, args.output_vid_name)

|

| 125 |

+

############################################## extract frames from source video ##############################################

|

| 126 |

+

if get_file_type(video_path)=="video":

|

| 127 |

+

save_dir_full = os.path.join(args.result_dir, input_basename)

|

| 128 |

+

os.makedirs(save_dir_full,exist_ok = True)

|

| 129 |

+

cmd = f"ffmpeg -v fatal -i {video_path} -start_number 0 {save_dir_full}/%08d.png"

|

| 130 |

+

os.system(cmd)

|

| 131 |

+

input_img_list = sorted(glob.glob(os.path.join(save_dir_full, '*.[jpJP][pnPN]*[gG]')))

|

| 132 |

+

fps = get_video_fps(video_path)

|

| 133 |

+

else: # input img folder

|

| 134 |

+

input_img_list = glob.glob(os.path.join(video_path, '*.[jpJP][pnPN]*[gG]'))

|

| 135 |

+

input_img_list = sorted(input_img_list, key=lambda x: int(os.path.splitext(os.path.basename(x))[0]))

|

| 136 |

+

fps = args.fps

|

| 137 |

+

#print(input_img_list)

|

| 138 |

+

############################################## extract audio feature ##############################################

|

| 139 |

+

whisper_feature = audio_processor.audio2feat(audio_path)

|

| 140 |

+

whisper_chunks = audio_processor.feature2chunks(feature_array=whisper_feature,fps=fps)

|

| 141 |

+

############################################## preprocess input image ##############################################

|

| 142 |

+

if os.path.exists(crop_coord_save_path) and args.use_saved_coord:

|

| 143 |

+

print("using extracted coordinates")

|

| 144 |

+

with open(crop_coord_save_path,'rb') as f:

|

| 145 |

+

coord_list = pickle.load(f)

|

| 146 |

+

frame_list = read_imgs(input_img_list)

|

| 147 |

+

else:

|

| 148 |

+

print("extracting landmarks...time consuming")

|

| 149 |

+

coord_list, frame_list = get_landmark_and_bbox(input_img_list, bbox_shift)

|

| 150 |

+

with open(crop_coord_save_path, 'wb') as f:

|

| 151 |

+

pickle.dump(coord_list, f)

|

| 152 |

+

|

| 153 |

+

i = 0

|

| 154 |

+

input_latent_list = []

|

| 155 |

+

for bbox, frame in zip(coord_list, frame_list):

|

| 156 |

+

if bbox == coord_placeholder:

|

| 157 |

+

continue

|

| 158 |

+

x1, y1, x2, y2 = bbox

|

| 159 |

+

crop_frame = frame[y1:y2, x1:x2]

|

| 160 |

+

crop_frame = cv2.resize(crop_frame,(256,256),interpolation = cv2.INTER_LANCZOS4)

|

| 161 |

+

latents = vae.get_latents_for_unet(crop_frame)

|

| 162 |

+

input_latent_list.append(latents)

|

| 163 |

+

|

| 164 |

+

# to smooth the first and the last frame

|

| 165 |

+

frame_list_cycle = frame_list + frame_list[::-1]

|

| 166 |

+

coord_list_cycle = coord_list + coord_list[::-1]

|

| 167 |

+

input_latent_list_cycle = input_latent_list + input_latent_list[::-1]

|

| 168 |

+

############################################## inference batch by batch ##############################################

|

| 169 |

+

print("start inference")

|

| 170 |

+

video_num = len(whisper_chunks)

|

| 171 |

+

batch_size = args.batch_size

|

| 172 |

+

gen = datagen(whisper_chunks,input_latent_list_cycle,batch_size)

|

| 173 |

+

res_frame_list = []

|

| 174 |

+

for i, (whisper_batch,latent_batch) in enumerate(tqdm(gen,total=int(np.ceil(float(video_num)/batch_size)))):

|

| 175 |

+

|

| 176 |

+

tensor_list = [torch.FloatTensor(arr) for arr in whisper_batch]

|

| 177 |

+

audio_feature_batch = torch.stack(tensor_list).to(unet.device) # torch, B, 5*N,384

|

| 178 |

+

audio_feature_batch = pe(audio_feature_batch)

|

| 179 |

+

|

| 180 |

+

pred_latents = unet.model(latent_batch, timesteps, encoder_hidden_states=audio_feature_batch).sample

|

| 181 |

+

recon = vae.decode_latents(pred_latents)

|

| 182 |

+

for res_frame in recon:

|

| 183 |

+

res_frame_list.append(res_frame)

|

| 184 |

+

|

| 185 |

+

############################################## pad to full image ##############################################

|

| 186 |

+

print("pad talking image to original video")

|

| 187 |

+

for i, res_frame in enumerate(tqdm(res_frame_list)):

|

| 188 |

+

bbox = coord_list_cycle[i%(len(coord_list_cycle))]

|

| 189 |

+

ori_frame = copy.deepcopy(frame_list_cycle[i%(len(frame_list_cycle))])

|

| 190 |

+

x1, y1, x2, y2 = bbox

|

| 191 |

+

try:

|

| 192 |

+

res_frame = cv2.resize(res_frame.astype(np.uint8),(x2-x1,y2-y1))

|

| 193 |

+

except:

|

| 194 |

+

# print(bbox)

|

| 195 |

+

continue

|

| 196 |

+

|

| 197 |

+

combine_frame = get_image(ori_frame,res_frame,bbox)

|

| 198 |

+

cv2.imwrite(f"{result_img_save_path}/{str(i).zfill(8)}.png",combine_frame)

|

| 199 |

+

|

| 200 |

+

cmd_img2video = f"ffmpeg -y -v fatal -r {fps} -f image2 -i {result_img_save_path}/%08d.png -vcodec libx264 -vf format=rgb24,scale=out_color_matrix=bt709,format=yuv420p -crf 18 temp.mp4"

|

| 201 |

+

print(cmd_img2video)

|

| 202 |

+

os.system(cmd_img2video)

|

| 203 |

+

|

| 204 |

+

cmd_combine_audio = f"ffmpeg -y -v fatal -i {audio_path} -i temp.mp4 {output_vid_name}"

|

| 205 |

+

print(cmd_combine_audio)

|

| 206 |

+

os.system(cmd_combine_audio)

|

| 207 |

+

|

| 208 |

+

os.remove("temp.mp4")

|

| 209 |

+

shutil.rmtree(result_img_save_path)

|

| 210 |

+

print(f"result is save to {output_vid_name}")

|

| 211 |

+

return output_vid_name

|

| 212 |

+

|

| 213 |

+

|

| 214 |

+

|

| 215 |

+

# load model weights

|

| 216 |

+

audio_processor,vae,unet,pe = load_all_model()

|

| 217 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 218 |

+

timesteps = torch.tensor([0], device=device)

|

| 219 |

+

|

| 220 |

+

|

| 221 |

+

|

| 222 |

+

|

| 223 |

+

def check_video(video):

|

| 224 |

+

# Define the output video file name

|

| 225 |

+

dir_path, file_name = os.path.split(video)

|

| 226 |

+

if file_name.startswith("outputxxx_"):

|

| 227 |

+

return video

|

| 228 |

+

# Add the output prefix to the file name

|

| 229 |

+

output_file_name = "outputxxx_" + file_name

|

| 230 |

+

|

| 231 |

+

# Combine the directory path and the new file name

|

| 232 |

+

output_video = os.path.join(dir_path, output_file_name)

|

| 233 |

+

|

| 234 |

+

|

| 235 |

+

# Run the ffmpeg command to change the frame rate to 25fps

|

| 236 |

+

command = f"ffmpeg -i {video} -r 25 {output_video} -y"

|

| 237 |

+

subprocess.run(command, shell=True, check=True)

|

| 238 |

+

return output_video

|

| 239 |

+

|

| 240 |

+

|

| 241 |

+

|

| 242 |

+

|

| 243 |

+

css = """#input_img {max-width: 1024px !important} #output_vid {max-width: 1024px; max-height: 576px}"""

|

| 244 |

+

|

| 245 |

+

with gr.Blocks(css=css) as demo:

|

| 246 |

+

gr.Markdown(

|

| 247 |

+

"<div align='center'> <h1>MuseTalk: Real-Time High Quality Lip Synchronization with Latent Space Inpainting </span> </h1> \

|

| 248 |

+

<h2 style='font-weight: 450; font-size: 1rem; margin: 0rem'>\

|

| 249 |

+

</br>\

|

| 250 |

+

Yue Zhang <sup>\*</sup>,\

|

| 251 |

+

Minhao Liu<sup>\*</sup>,\

|

| 252 |

+

Zhaokang Chen,\

|

| 253 |

+

Bin Wu<sup>†</sup>,\

|

| 254 |

+

Yingjie He,\

|

| 255 |

+

Chao Zhan,\

|

| 256 |

+

Wenjiang Zhou\

|

| 257 |

+

(<sup>*</sup>Equal Contribution, <sup>†</sup>Corresponding Author, [email protected])\

|

| 258 |

+

Lyra Lab, Tencent Music Entertainment\

|

| 259 |

+

</h2> \

|

| 260 |

+

<a style='font-size:18px;color: #000000' href='https://github.com/TMElyralab/MuseTalk'>[Github Repo]</a>\

|

| 261 |

+

<a style='font-size:18px;color: #000000' href='https://github.com/TMElyralab/MuseTalk'>[Huggingface]</a>\

|

| 262 |

+

<a style='font-size:18px;color: #000000' href=''> [Technical report(Coming Soon)] </a>\

|

| 263 |

+

<a style='font-size:18px;color: #000000' href=''> [Project Page(Coming Soon)] </a> </div>"

|

| 264 |

+

)

|

| 265 |

+

|

| 266 |

+

with gr.Row():

|

| 267 |

+

with gr.Column():

|

| 268 |

+

audio = gr.Audio(label="Driven Audio",type="filepath")

|

| 269 |

+

video = gr.Video(label="Reference Video")

|

| 270 |

+

bbox_shift = gr.Number(label="BBox_shift,[-9,9]", value=-1)

|

| 271 |

+

btn = gr.Button("Generate")

|

| 272 |

+

out1 = gr.Video()

|

| 273 |

+

|

| 274 |

+

video.change(

|

| 275 |

+

fn=check_video, inputs=[video], outputs=[video]

|

| 276 |

+

)

|

| 277 |

+

btn.click(

|

| 278 |

+

fn=inference,

|

| 279 |

+

inputs=[

|

| 280 |

+

audio,

|

| 281 |

+

video,

|

| 282 |

+

bbox_shift,

|

| 283 |

+

],

|

| 284 |

+

outputs=out1,

|

| 285 |

+

)

|

| 286 |

+

|

| 287 |

+

# Set the IP and port

|

| 288 |

+

ip_address = "0.0.0.0" # Replace with your desired IP address

|

| 289 |

+

port_number = 7860 # Replace with your desired port number

|

| 290 |

+

|

| 291 |

+

|

| 292 |

+

demo.queue().launch(

|

| 293 |

+

share=False , debug=True, server_name=ip_address, server_port=port_number

|

| 294 |

+

)

|

assets/BBOX_SHIFT.md

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

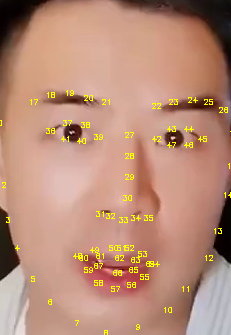

| 1 |

+

## Why is there a "bbox_shift" parameter?

|

| 2 |

+

When processing training data, we utilize the combination of face detection results (bbox) and facial landmarks to determine the region of the head segmentation box. Specifically, we use the upper bound of the bbox as the upper boundary of the segmentation box, the maximum y value of the facial landmarks coordinates as the lower boundary of the segmentation box, and the minimum and maximum x values of the landmarks coordinates as the left and right boundaries of the segmentation box. By processing the dataset in this way, we can ensure the integrity of the face.

|

| 3 |

+

|

| 4 |

+

However, we have observed that the masked ratio on the face varies across different images due to the varying face shapes of subjects. Furthermore, we found that the upper-bound of the mask mainly lies close to the landmark28, landmark29 and landmark30 landmark points (as shown in Fig.1), which correspond to proportions of 15%, 63%, and 22% in the dataset, respectively.

|

| 5 |

+

|

| 6 |

+

During the inference process, we discover that as the upper-bound of the mask gets closer to the mouth (near landmark30), the audio features contribute more to lip movements. Conversely, as the upper-bound of the mask moves away from the mouth (near landmark28), the audio features contribute more to generating details of facial appearance. Hence, we define this characteristic as a parameter that can adjust the contribution of audio features to generating lip movements, which users can modify according to their specific needs in practical scenarios.

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

Fig.1. Facial landmarks

|

| 11 |

+

### Step 0.

|

| 12 |

+

Running with the default configuration to obtain the adjustable value range.

|

| 13 |

+

```

|

| 14 |

+

python -m scripts.inference --inference_config configs/inference/test.yaml

|

| 15 |

+

```

|

| 16 |

+

```

|

| 17 |

+

********************************************bbox_shift parameter adjustment**********************************************************

|

| 18 |

+

Total frame:「838」 Manually adjust range : [ -9~9 ] , the current value: 0

|

| 19 |

+

*************************************************************************************************************************************

|

| 20 |

+

```

|

| 21 |

+

### Step 1.

|

| 22 |

+

Re-run the script within the above range.

|

| 23 |

+

```

|

| 24 |

+

python -m scripts.inference --inference_config configs/inference/test.yaml --bbox_shift xx # where xx is in [-9, 9].

|

| 25 |

+

```

|

| 26 |

+

In our experimental observations, we found that positive values (moving towards the lower half) generally increase mouth openness, while negative values (moving towards the upper half) generally decrease mouth openness. However, it's important to note that this is not an absolute rule, and users may need to adjust the parameter according to their specific needs and the desired effect.

|

assets/demo/man/man.png

ADDED

|

Git LFS Details

|

assets/demo/monalisa/monalisa.png

ADDED

|

assets/demo/musk/musk.png

ADDED

|

assets/demo/sit/sit.jpeg

ADDED

|

assets/demo/sun1/sun.png

ADDED

|

assets/demo/sun2/sun.png

ADDED

|

assets/demo/video1/video1.png

ADDED

|

assets/demo/yongen/yongen.jpeg

ADDED

|

assets/figs/landmark_ref.png

ADDED

|

assets/figs/musetalk_arc.jpg

ADDED

|

configs/inference/test.yaml

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

task_0:

|

| 2 |

+

video_path: "data/video/yongen.mp4"

|

| 3 |

+

audio_path: "data/audio/yongen.wav"

|

| 4 |

+

|

| 5 |

+

task_1:

|

| 6 |

+

video_path: "data/video/sun.mp4"

|

| 7 |

+

audio_path: "data/audio/sun.wav"

|

| 8 |

+

bbox_shift: -7

|

| 9 |

+

|

| 10 |

+

|

data/audio/sun.wav

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3f163b0fe2f278504c15cab74cd37b879652749e2a8a69f7848ad32c847d8007

|

| 3 |

+

size 1983572

|

data/audio/yongen.wav

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:2b775c363c968428d1d6df4456495e4c11f00e3204d3082e51caff415ec0e2ba

|

| 3 |

+

size 1536078

|

data/video/sun.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9f240982090f4255a7589e3cd67b4219be7820f9eb9a7461fc915eb5f0c8e075

|

| 3 |

+

size 2217973

|

data/video/yongen.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1effa976d410571cd185554779d6d43a6ba636e0e3401385db1d607daa46441f

|

| 3 |

+

size 1870923

|

entrypoint.sh

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

echo "entrypoint.sh"

|

| 4 |

+

whoami

|

| 5 |

+

which python

|

| 6 |

+

echo "pythonpath" $PYTHONPATH

|

| 7 |

+

|

| 8 |

+

source /opt/conda/etc/profile.d/conda.sh

|

| 9 |

+

conda activate musev

|

| 10 |

+

which python

|

| 11 |

+

python app.py

|

install_ffmpeg.sh

ADDED

|

@@ -0,0 +1,70 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

FFMPEG_PREFIX="$(echo $HOME/local)"

|

| 3 |

+

FFMPEG_SOURCES="$(echo $HOME/ffmpeg_sources)"

|

| 4 |

+

FFMPEG_BINDIR="$(echo $FFMPEG_PREFIX/bin)"

|

| 5 |

+

PATH=$FFMPEG_BINDIR:$PATH

|

| 6 |

+

|

| 7 |

+

mkdir -p $FFMPEG_PREFIX

|

| 8 |

+

mkdir -p $FFMPEG_SOURCES

|

| 9 |

+

|

| 10 |

+

cd $FFMPEG_SOURCES

|

| 11 |

+

wget http://www.tortall.net/projects/yasm/releases/yasm-1.2.0.tar.gz

|

| 12 |

+

tar xzvf yasm-1.2.0.tar.gz

|

| 13 |

+

cd yasm-1.2.0

|

| 14 |

+

./configure --prefix="$FFMPEG_PREFIX" --bindir="$FFMPEG_BINDIR"

|

| 15 |

+

make

|

| 16 |

+

make install

|

| 17 |

+

make distclean

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

cd $FFMPEG_SOURCES

|

| 22 |

+

wget http://download.videolan.org/pub/x264/snapshots/last_x264.tar.bz2

|

| 23 |

+

tar xjvf last_x264.tar.bz2

|

| 24 |

+

cd x264-snapshot*

|

| 25 |

+

./configure --prefix="$FFMPEG_PREFIX" --bindir="$FFMPEG_BINDIR" --enable-static

|

| 26 |

+

make

|

| 27 |

+

make install

|

| 28 |

+

make distclean

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

cd $FFMPEG_SOURCES

|

| 33 |

+

wget -O fdk-aac.tar.gz https://github.com/mstorsjo/fdk-aac/tarball/master

|

| 34 |

+

tar xzvf fdk-aac.tar.gz

|

| 35 |

+

cd mstorsjo-fdk-aac*

|

| 36 |

+

autoreconf -fiv

|

| 37 |

+

./configure --prefix="$FFMPEG_PREFIX" --disable-shared

|

| 38 |

+

make

|

| 39 |

+

make install

|

| 40 |

+

make distclean

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

cd $FFMPEG_SOURCES

|

| 45 |

+

wget http://webm.googlecode.com/files/libvpx-v1.3.0.tar.bz2

|

| 46 |

+

tar xjvf libvpx-v1.3.0.tar.bz2

|

| 47 |

+

cd libvpx-v1.3.0

|

| 48 |

+

./configure --prefix="$FFMPEG_PREFIX" --disable-examples

|

| 49 |

+

make

|

| 50 |

+

make install

|

| 51 |

+

make clean

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

cd $FFMPEG_SOURCES

|

| 56 |

+

wget https://github.com/FFmpeg/FFmpeg/tarball/master -O ffmpeg.tar.gz

|

| 57 |

+

rm -rf FFmpeg-FFmpeg*

|

| 58 |

+

tar -zxvf ffmpeg.tar.gz

|

| 59 |

+

cd FFmpeg-FFmpeg*

|

| 60 |

+

PKG_CONFIG_PATH="$FFMPEG_PREFIX/lib/pkgconfig"

|

| 61 |

+

export PKG_CONFIG_PATH

|

| 62 |

+

./configure --prefix="$FFMPEG_PREFIX" --extra-cflags="-I$FFMPEG_PREFIX/include" \

|

| 63 |

+

--extra-ldflags="-L$FFMPEG_PREFIX/lib" --bindir="$FFMPEG_BINDIR" --extra-libs="-ldl" --enable-gpl \

|

| 64 |

+

--enable-libass --enable-libfdk-aac --enable-libmp3lame --enable-libtheora \

|

| 65 |

+

--enable-libvorbis --enable-libvpx --enable-libx264 --enable-nonfree \

|

| 66 |

+

--enable-libopencore-amrnb --enable-libopencore-amrwb --enable-version3 --enable-libvo-amrwbenc

|

| 67 |

+

make

|

| 68 |

+

make install

|

| 69 |

+

make distclean

|

| 70 |

+

hash -r

|

musetalk/models/unet.py

ADDED

|

@@ -0,0 +1,47 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import torch.nn as nn

|

| 3 |

+

import math

|

| 4 |

+

import json

|

| 5 |

+

|

| 6 |

+

from diffusers import UNet2DConditionModel

|

| 7 |

+

import sys

|

| 8 |

+

import time

|

| 9 |

+

import numpy as np

|

| 10 |

+

import os

|

| 11 |

+

|

| 12 |

+

class PositionalEncoding(nn.Module):

|

| 13 |

+

def __init__(self, d_model=384, max_len=5000):

|

| 14 |

+

super(PositionalEncoding, self).__init__()

|

| 15 |

+

pe = torch.zeros(max_len, d_model)

|

| 16 |

+

position = torch.arange(0, max_len, dtype=torch.float).unsqueeze(1)

|

| 17 |

+

div_term = torch.exp(torch.arange(0, d_model, 2).float() * (-math.log(10000.0) / d_model))

|

| 18 |

+

pe[:, 0::2] = torch.sin(position * div_term)

|

| 19 |

+

pe[:, 1::2] = torch.cos(position * div_term)

|

| 20 |

+

pe = pe.unsqueeze(0)

|

| 21 |

+

self.register_buffer('pe', pe)

|

| 22 |

+

|

| 23 |

+

def forward(self, x):

|

| 24 |

+

b, seq_len, d_model = x.size()

|

| 25 |

+

pe = self.pe[:, :seq_len, :]

|

| 26 |

+

x = x + pe.to(x.device)

|

| 27 |

+

return x

|

| 28 |

+

|

| 29 |

+

class UNet():

|

| 30 |

+

def __init__(self,

|

| 31 |

+

unet_config,

|

| 32 |

+

model_path,

|

| 33 |

+

use_float16=False,

|

| 34 |

+

):

|

| 35 |

+

with open(unet_config, 'r') as f:

|

| 36 |

+

unet_config = json.load(f)

|

| 37 |

+

self.model = UNet2DConditionModel(**unet_config)

|

| 38 |

+

self.pe = PositionalEncoding(d_model=384)

|

| 39 |

+

self.device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 40 |

+

self.weights = torch.load(model_path) if torch.cuda.is_available() else torch.load(model_path, map_location=self.device)

|

| 41 |

+

self.model.load_state_dict(self.weights)

|

| 42 |

+

if use_float16:

|

| 43 |

+

self.model = self.model.half()

|

| 44 |

+

self.model.to(self.device)

|

| 45 |

+

|

| 46 |

+

if __name__ == "__main__":

|

| 47 |

+

unet = UNet()

|

musetalk/models/vae.py

ADDED

|

@@ -0,0 +1,148 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from diffusers import AutoencoderKL

|

| 2 |

+

import torch

|

| 3 |

+

import torchvision.transforms as transforms

|

| 4 |

+

import torch.nn.functional as F

|

| 5 |

+

import cv2

|

| 6 |

+

import numpy as np

|

| 7 |

+

from PIL import Image

|

| 8 |

+

import os

|

| 9 |

+

|

| 10 |

+

class VAE():

|

| 11 |

+

"""

|

| 12 |

+

VAE (Variational Autoencoder) class for image processing.

|

| 13 |

+

"""

|

| 14 |

+

|

| 15 |

+

def __init__(self, model_path="./models/sd-vae-ft-mse/", resized_img=256, use_float16=False):

|

| 16 |

+

"""

|

| 17 |

+

Initialize the VAE instance.

|

| 18 |

+

|

| 19 |

+

:param model_path: Path to the trained model.

|

| 20 |

+

:param resized_img: The size to which images are resized.

|

| 21 |

+

:param use_float16: Whether to use float16 precision.

|

| 22 |

+

"""

|

| 23 |

+

self.model_path = model_path

|

| 24 |

+

self.vae = AutoencoderKL.from_pretrained(self.model_path)

|

| 25 |

+

|

| 26 |

+

self.device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 27 |

+

self.vae.to(self.device)

|

| 28 |

+

|

| 29 |

+

if use_float16:

|

| 30 |

+

self.vae = self.vae.half()

|

| 31 |

+

self._use_float16 = True

|

| 32 |

+

else:

|

| 33 |

+

self._use_float16 = False

|

| 34 |

+

|

| 35 |

+

self.scaling_factor = self.vae.config.scaling_factor

|

| 36 |

+

self.transform = transforms.Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5])

|

| 37 |

+

self._resized_img = resized_img

|

| 38 |

+

self._mask_tensor = self.get_mask_tensor()

|

| 39 |

+

|

| 40 |

+

def get_mask_tensor(self):

|

| 41 |

+

"""

|

| 42 |

+

Creates a mask tensor for image processing.

|

| 43 |

+

:return: A mask tensor.

|

| 44 |

+

"""

|

| 45 |

+

mask_tensor = torch.zeros((self._resized_img,self._resized_img))

|

| 46 |

+

mask_tensor[:self._resized_img//2,:] = 1

|

| 47 |

+

mask_tensor[mask_tensor< 0.5] = 0

|

| 48 |

+

mask_tensor[mask_tensor>= 0.5] = 1

|

| 49 |

+

return mask_tensor

|

| 50 |

+

|

| 51 |

+

def preprocess_img(self,img_name,half_mask=False):

|

| 52 |

+

"""

|

| 53 |

+

Preprocess an image for the VAE.

|

| 54 |

+

|

| 55 |

+

:param img_name: The image file path or a list of image file paths.

|

| 56 |

+

:param half_mask: Whether to apply a half mask to the image.

|

| 57 |

+

:return: A preprocessed image tensor.

|

| 58 |

+

"""

|

| 59 |

+

window = []

|

| 60 |

+

if isinstance(img_name, str):

|

| 61 |

+

window_fnames = [img_name]

|

| 62 |

+

for fname in window_fnames:

|

| 63 |

+

img = cv2.imread(fname)

|

| 64 |

+

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

|

| 65 |

+

img = cv2.resize(img, (self._resized_img, self._resized_img),

|

| 66 |

+

interpolation=cv2.INTER_LANCZOS4)

|

| 67 |

+

window.append(img)

|

| 68 |

+

else:

|

| 69 |

+

img = cv2.cvtColor(img_name, cv2.COLOR_BGR2RGB)

|

| 70 |

+

window.append(img)

|

| 71 |

+

|

| 72 |

+

x = np.asarray(window) / 255.

|

| 73 |

+

x = np.transpose(x, (3, 0, 1, 2))

|

| 74 |

+

x = torch.squeeze(torch.FloatTensor(x))

|

| 75 |

+

if half_mask:

|

| 76 |

+

x = x * (self._mask_tensor>0.5)

|

| 77 |

+

x = self.transform(x)

|

| 78 |

+

|

| 79 |

+

x = x.unsqueeze(0) # [1, 3, 256, 256] torch tensor

|

| 80 |

+

x = x.to(self.vae.device)

|

| 81 |

+

|

| 82 |

+

return x

|

| 83 |

+

|

| 84 |

+

def encode_latents(self,image):

|

| 85 |

+

"""

|

| 86 |

+

Encode an image into latent variables.

|

| 87 |

+

|

| 88 |

+

:param image: The image tensor to encode.

|

| 89 |

+

:return: The encoded latent variables.

|

| 90 |

+

"""

|

| 91 |

+

with torch.no_grad():

|

| 92 |

+

init_latent_dist = self.vae.encode(image.to(self.vae.dtype)).latent_dist

|

| 93 |

+

init_latents = self.scaling_factor * init_latent_dist.sample()

|

| 94 |

+

return init_latents

|

| 95 |

+

|

| 96 |

+

def decode_latents(self, latents):

|

| 97 |

+

"""

|

| 98 |

+

Decode latent variables back into an image.

|

| 99 |

+

:param latents: The latent variables to decode.

|

| 100 |

+

:return: A NumPy array representing the decoded image.

|

| 101 |

+

"""

|

| 102 |

+

latents = (1/ self.scaling_factor) * latents

|

| 103 |

+

image = self.vae.decode(latents.to(self.vae.dtype)).sample

|

| 104 |

+

image = (image / 2 + 0.5).clamp(0, 1)

|

| 105 |

+

image = image.detach().cpu().permute(0, 2, 3, 1).float().numpy()

|

| 106 |

+

image = (image * 255).round().astype("uint8")

|

| 107 |

+

image = image[...,::-1] # RGB to BGR

|

| 108 |

+

return image

|

| 109 |

+

|

| 110 |

+

def get_latents_for_unet(self,img):

|

| 111 |

+

"""

|

| 112 |

+

Prepare latent variables for a U-Net model.

|

| 113 |

+

:param img: The image to process.

|

| 114 |

+

:return: A concatenated tensor of latents for U-Net input.

|

| 115 |

+

"""

|

| 116 |

+

|

| 117 |

+

ref_image = self.preprocess_img(img,half_mask=True) # [1, 3, 256, 256] RGB, torch tensor

|

| 118 |

+

masked_latents = self.encode_latents(ref_image) # [1, 4, 32, 32], torch tensor

|

| 119 |

+

ref_image = self.preprocess_img(img,half_mask=False) # [1, 3, 256, 256] RGB, torch tensor

|

| 120 |

+

ref_latents = self.encode_latents(ref_image) # [1, 4, 32, 32], torch tensor

|

| 121 |

+

latent_model_input = torch.cat([masked_latents, ref_latents], dim=1)

|

| 122 |

+

return latent_model_input

|

| 123 |

+

|

| 124 |

+

if __name__ == "__main__":

|

| 125 |

+

vae_mode_path = "./models/sd-vae-ft-mse/"

|

| 126 |

+

vae = VAE(model_path = vae_mode_path,use_float16=False)

|

| 127 |

+

img_path = "./results/sun001_crop/00000.png"

|

| 128 |

+

|

| 129 |

+

crop_imgs_path = "./results/sun001_crop/"

|

| 130 |

+

latents_out_path = "./results/latents/"

|

| 131 |

+

if not os.path.exists(latents_out_path):

|

| 132 |

+

os.mkdir(latents_out_path)

|

| 133 |

+

|

| 134 |

+

files = os.listdir(crop_imgs_path)

|

| 135 |

+

files.sort()

|

| 136 |

+

files = [file for file in files if file.split(".")[-1] == "png"]

|

| 137 |

+

|

| 138 |

+

for file in files:

|

| 139 |

+

index = file.split(".")[0]

|

| 140 |

+

img_path = crop_imgs_path + file

|

| 141 |

+

latents = vae.get_latents_for_unet(img_path)

|

| 142 |

+

print(img_path,"latents",latents.size())

|

| 143 |

+

#torch.save(latents,os.path.join(latents_out_path,index+".pt"))

|

| 144 |

+

#reload_tensor = torch.load('tensor.pt')

|

| 145 |

+

#print(reload_tensor.size())

|

| 146 |

+

|

| 147 |

+

|

| 148 |

+

|

musetalk/utils/__init__.py

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import sys

|

| 2 |

+

from os.path import abspath, dirname

|

| 3 |

+

current_dir = dirname(abspath(__file__))

|

| 4 |

+

parent_dir = dirname(current_dir)

|

| 5 |

+

sys.path.append(parent_dir+'/utils')

|

musetalk/utils/blending.py

ADDED

|

@@ -0,0 +1,59 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from PIL import Image

|

| 2 |

+

import numpy as np