Upload 9 files

Browse files- app.py +203 -0

- examples/1.jpg +0 -0

- examples/2.jpg +0 -0

- examples/3.jpg +0 -0

- examples/4.jpg +0 -0

- examples/5.jpg +0 -0

- examples/6.jpg +0 -0

- examples/7.jpg +0 -0

- examples/8.jpg +0 -0

app.py

ADDED

|

@@ -0,0 +1,203 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import gradio as gr

|

| 3 |

+

import requests

|

| 4 |

+

import json

|

| 5 |

+

from PIL import Image

|

| 6 |

+

|

| 7 |

+

def get_attributes(json):

|

| 8 |

+

liveness = "GENUINE" if json.get('liveness') >= 0.5 else "FAKE"

|

| 9 |

+

attr = json.get('attribute')

|

| 10 |

+

age = attr.get('age')

|

| 11 |

+

gender = attr.get('gender')

|

| 12 |

+

emotion = attr.get('emotion')

|

| 13 |

+

ethnicity = attr.get('ethnicity')

|

| 14 |

+

|

| 15 |

+

mask = [attr.get('face_mask')]

|

| 16 |

+

if attr.get('glasses') == 'USUAL':

|

| 17 |

+

mask.append('GLASSES')

|

| 18 |

+

if attr.get('glasses') == 'DARK':

|

| 19 |

+

mask.append('SUNGLASSES')

|

| 20 |

+

|

| 21 |

+

eye = []

|

| 22 |

+

if attr.get('eye_left') >= 0.3:

|

| 23 |

+

eye.append('LEFT')

|

| 24 |

+

if attr.get('eye_right') >= 0.3:

|

| 25 |

+

eye.append('RIGHT')

|

| 26 |

+

facehair = attr.get('facial_hair')

|

| 27 |

+

haircolor = attr.get('hair_color')

|

| 28 |

+

hairtype = attr.get('hair_type')

|

| 29 |

+

headwear = attr.get('headwear')

|

| 30 |

+

|

| 31 |

+

activity = []

|

| 32 |

+

if attr.get('food_consumption') >= 0.5:

|

| 33 |

+

activity.append('EATING')

|

| 34 |

+

if attr.get('phone_recording') >= 0.5:

|

| 35 |

+

activity.append('PHONE_RECORDING')

|

| 36 |

+

if attr.get('phone_use') >= 0.5:

|

| 37 |

+

activity.append('PHONE_USE')

|

| 38 |

+

if attr.get('seatbelt') >= 0.5:

|

| 39 |

+

activity.append('SEATBELT')

|

| 40 |

+

if attr.get('smoking') >= 0.5:

|

| 41 |

+

activity.append('SMOKING')

|

| 42 |

+

|

| 43 |

+

pitch = attr.get('pitch')

|

| 44 |

+

roll = attr.get('roll')

|

| 45 |

+

yaw = attr.get('yaw')

|

| 46 |

+

quality = attr.get('quality')

|

| 47 |

+

return liveness, age, gender, emotion, ethnicity, mask, eye, facehair, haircolor, hairtype, headwear, activity, pitch, roll, yaw, quality

|

| 48 |

+

|

| 49 |

+

def compare_face(frame1, frame2):

|

| 50 |

+

url = "https://recognito.p.rapidapi.com/api/face"

|

| 51 |

+

try:

|

| 52 |

+

files = {'image1': open(frame1, 'rb'), 'image2': open(frame2, 'rb')}

|

| 53 |

+

headers = {"X-RapidAPI-Key": os.environ.get("API_KEY")}

|

| 54 |

+

|

| 55 |

+

r = requests.post(url=url, files=files, headers=headers)

|

| 56 |

+

except:

|

| 57 |

+

raise gr.Error("Please select images files!")

|

| 58 |

+

|

| 59 |

+

faces = None

|

| 60 |

+

|

| 61 |

+

try:

|

| 62 |

+

image1 = Image.open(frame1)

|

| 63 |

+

image2 = Image.open(frame2)

|

| 64 |

+

|

| 65 |

+

face1 = Image.new('RGBA',(150, 150), (80,80,80,0))

|

| 66 |

+

face2 = Image.new('RGBA',(150, 150), (80,80,80,0))

|

| 67 |

+

|

| 68 |

+

liveness1, age1, gender1, emotion1, ethnicity1, mask1, eye1, facehair1, haircolor1, hairtype1, headwear1, activity1, pitch1, roll1, yaw1, quality1 = [None] * 16

|

| 69 |

+

liveness2, age2, gender2, emotion2, ethnicity2, mask2, eye2, facehair2, haircolor2, hairtype2, headwear2, activity2, pitch2, roll2, yaw2, quality2 = [None] * 16

|

| 70 |

+

res1 = r.json().get('image1')

|

| 71 |

+

|

| 72 |

+

if res1 is not None and res1:

|

| 73 |

+

face = res1.get('detection')

|

| 74 |

+

x1 = face.get('x')

|

| 75 |

+

y1 = face.get('y')

|

| 76 |

+

x2 = x1 + face.get('w')

|

| 77 |

+

y2 = y1 + face.get('h')

|

| 78 |

+

if x1 < 0:

|

| 79 |

+

x1 = 0

|

| 80 |

+

if y1 < 0:

|

| 81 |

+

y1 = 0

|

| 82 |

+

if x2 >= image1.width:

|

| 83 |

+

x2 = image1.width - 1

|

| 84 |

+

if y2 >= image1.height:

|

| 85 |

+

y2 = image1.height - 1

|

| 86 |

+

|

| 87 |

+

face1 = image1.crop((x1, y1, x2, y2))

|

| 88 |

+

face_image_ratio = face1.width / float(face1.height)

|

| 89 |

+

resized_w = int(face_image_ratio * 150)

|

| 90 |

+

resized_h = 150

|

| 91 |

+

|

| 92 |

+

face1 = face1.resize((int(resized_w), int(resized_h)))

|

| 93 |

+

liveness1, age1, gender1, emotion1, ethnicity1, mask1, eye1, facehair1, haircolor1, hairtype1, headwear1, activity1, pitch1, roll1, yaw1, quality1 = get_attributes(res1)

|

| 94 |

+

|

| 95 |

+

res2 = r.json().get('image2')

|

| 96 |

+

if res2 is not None and res2:

|

| 97 |

+

face = res2.get('detection')

|

| 98 |

+

x1 = face.get('x')

|

| 99 |

+

y1 = face.get('y')

|

| 100 |

+

x2 = x1 + face.get('w')

|

| 101 |

+

y2 = y1 + face.get('h')

|

| 102 |

+

|

| 103 |

+

if x1 < 0:

|

| 104 |

+

x1 = 0

|

| 105 |

+

if y1 < 0:

|

| 106 |

+

y1 = 0

|

| 107 |

+

if x2 >= image2.width:

|

| 108 |

+

x2 = image2.width - 1

|

| 109 |

+

if y2 >= image2.height:

|

| 110 |

+

y2 = image2.height - 1

|

| 111 |

+

|

| 112 |

+

face2 = image2.crop((x1, y1, x2, y2))

|

| 113 |

+

face_image_ratio = face2.width / float(face2.height)

|

| 114 |

+

resized_w = int(face_image_ratio * 150)

|

| 115 |

+

resized_h = 150

|

| 116 |

+

|

| 117 |

+

face2 = face2.resize((int(resized_w), int(resized_h)))

|

| 118 |

+

liveness2, age2, gender2, emotion2, ethnicity2, mask2, eye2, facehair2, haircolor2, hairtype2, headwear2, activity2, pitch2, roll2, yaw2, quality2 = get_attributes(res2)

|

| 119 |

+

except:

|

| 120 |

+

pass

|

| 121 |

+

|

| 122 |

+

matching_result = ""

|

| 123 |

+

if face1 is not None and face2 is not None:

|

| 124 |

+

matching_score = r.json().get('matching_score')

|

| 125 |

+

if matching_score is not None:

|

| 126 |

+

matching_result = """<br/><br/><br/><h1 style="text-align: center;color: #05ee3c;">SAME<br/>PERSON</h1>""" if matching_score >= 0.7 else """<br/><br/><br/><h1 style="text-align: center;color: red;">DIFFERENT<br/>PERSON</h1>"""

|

| 127 |

+

|

| 128 |

+

return [r.json(), [face1, face2], matching_result,

|

| 129 |

+

liveness1, age1, gender1, emotion1, ethnicity1, mask1, eye1, facehair1, haircolor1, hairtype1, headwear1, activity1, pitch1, roll1, yaw1, quality1,

|

| 130 |

+

liveness2, age2, gender2, emotion2, ethnicity2, mask2, eye2, facehair2, haircolor2, hairtype2, headwear2, activity2, pitch2, roll2, yaw2, quality2]

|

| 131 |

+

|

| 132 |

+

with gr.Blocks() as demo:

|

| 133 |

+

gr.Markdown(

|

| 134 |

+

"""

|

| 135 |

+

# Recognito Face Analysis

|

| 136 |

+

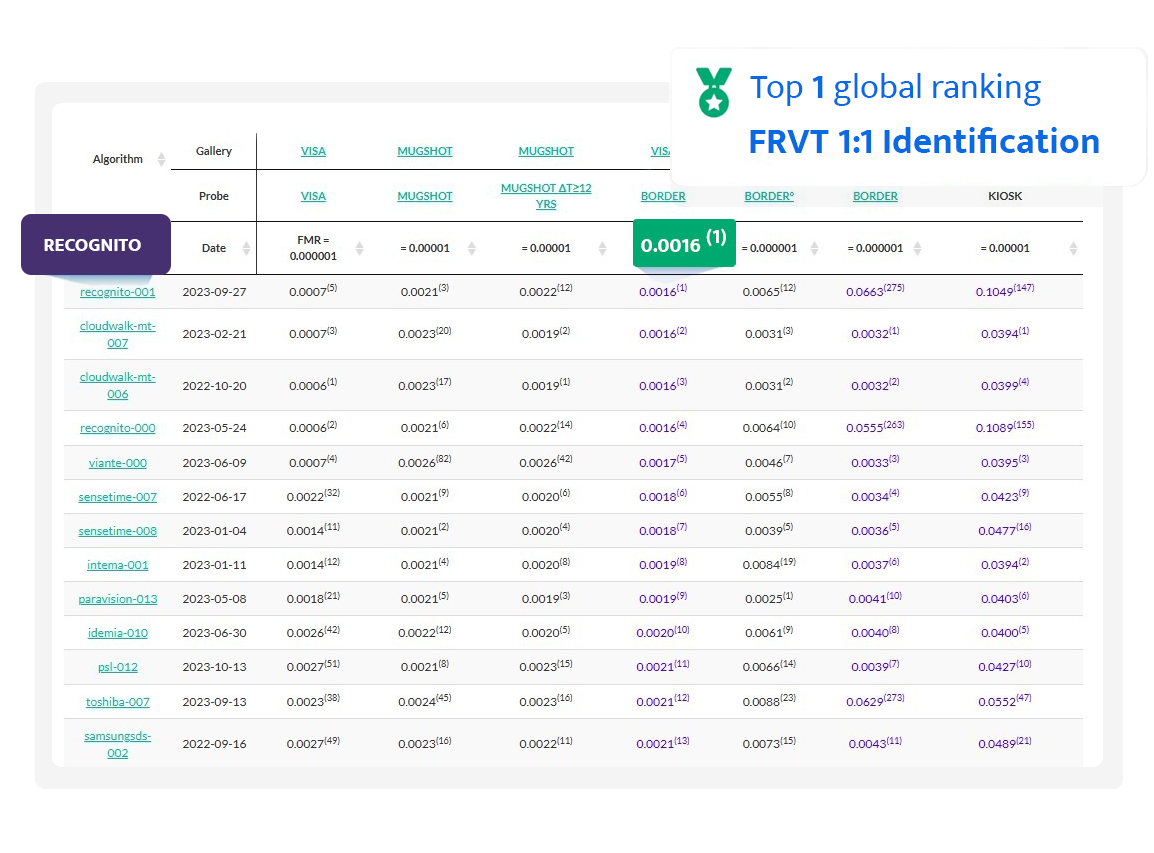

NIST FRVT Top #1 Face Recognition Algorithm Developer<br/>

|

| 137 |

+

|

| 138 |

+

Contact us at https://recognito.vision<br/>

|

| 139 |

+

"""

|

| 140 |

+

)

|

| 141 |

+

with gr.Row():

|

| 142 |

+

with gr.Column(scale=1):

|

| 143 |

+

compare_face_input1 = gr.Image(label="Image1", type='filepath', height=270)

|

| 144 |

+

gr.Examples(['examples/1.jpg', 'examples/2.jpg', 'examples/3.jpg', 'examples/4.jpg'],

|

| 145 |

+

inputs=compare_face_input1)

|

| 146 |

+

compare_face_input2 = gr.Image(label="Image2", type='filepath', height=270)

|

| 147 |

+

gr.Examples(['examples/5.jpg', 'examples/6.jpg', 'examples/7.jpg', 'examples/8.jpg'],

|

| 148 |

+

inputs=compare_face_input2)

|

| 149 |

+

compare_face_button = gr.Button("Face Analysis & Verification", variant="primary", size="lg")

|

| 150 |

+

|

| 151 |

+

with gr.Column(scale=2):

|

| 152 |

+

with gr.Row():

|

| 153 |

+

compare_face_output = gr.Gallery(label="Faces", height=230, columns=[2], rows=[1])

|

| 154 |

+

with gr.Column(variant="panel"):

|

| 155 |

+

compare_result = gr.Markdown("")

|

| 156 |

+

|

| 157 |

+

with gr.Row():

|

| 158 |

+

with gr.Column(variant="panel"):

|

| 159 |

+

gr.Markdown("<b>Image 1<b/>")

|

| 160 |

+

liveness1 = gr.CheckboxGroup(["GENUINE", "FAKE"], label="Liveness")

|

| 161 |

+

age1 = gr.Number(0, label="Age")

|

| 162 |

+

gender1 = gr.CheckboxGroup(["MALE", "FEMALE"], label="Gender")

|

| 163 |

+

emotion1 = gr.CheckboxGroup(["HAPPINESS", "ANGER", "FEAR", "NEUTRAL", "SADNESS", "SURPRISE"], label="Emotion")

|

| 164 |

+

ethnicity1 = gr.CheckboxGroup(["ASIAN", "BLACK", "CAUCASIAN", "EAST_INDIAN"], label="Ethnicity")

|

| 165 |

+

mask1 = gr.CheckboxGroup(["LOWER_FACE_MASK", "FULL_FACE_MASK", "OTHER_MASK", "GLASSES", "SUNGLASSES"], label="Mask & Glasses")

|

| 166 |

+

eye1 = gr.CheckboxGroup(["LEFT", "RIGHT"], label="Eye Open")

|

| 167 |

+

facehair1 = gr.CheckboxGroup(["BEARD", "BRISTLE", "MUSTACHE", "SHAVED"], label="Facial Hair")

|

| 168 |

+

haircolor1 = gr.CheckboxGroup(["BLACK", "BLOND", "BROWN"], label="Hair Color")

|

| 169 |

+

hairtype1 = gr.CheckboxGroup(["BALD", "SHORT", "MEDIUM", "LONG"], label="Hair Type")

|

| 170 |

+

headwear1 = gr.CheckboxGroup(["B_CAP", "CAP", "HAT", "HELMET", "HOOD"], label="Head Wear")

|

| 171 |

+

activity1 = gr.CheckboxGroup(["EATING", "PHONE_RECORDING", "PHONE_USE", "SMOKING", "SEATBELT"], label="Activity")

|

| 172 |

+

with gr.Row():

|

| 173 |

+

pitch1 = gr.Number(0, label="Pitch")

|

| 174 |

+

roll1 = gr.Number(0, label="Roll")

|

| 175 |

+

yaw1 = gr.Number(0, label="Yaw")

|

| 176 |

+

quality1 = gr.Number(0, label="Quality")

|

| 177 |

+

with gr.Column(variant="panel"):

|

| 178 |

+

gr.Markdown("<b>Image 2<b/>")

|

| 179 |

+

liveness2 = gr.CheckboxGroup(["GENUINE", "FAKE"], label="Liveness")

|

| 180 |

+

age2 = gr.Number(0, label="Age")

|

| 181 |

+

gender2 = gr.CheckboxGroup(["MALE", "FEMALE"], label="Gender")

|

| 182 |

+

emotion2 = gr.CheckboxGroup(["HAPPINESS", "ANGER", "FEAR", "NEUTRAL", "SADNESS", "SURPRISE"], label="Emotion")

|

| 183 |

+

ethnicity2 = gr.CheckboxGroup(["ASIAN", "BLACK", "CAUCASIAN", "EAST_INDIAN"], label="Ethnicity")

|

| 184 |

+

mask2 = gr.CheckboxGroup(["LOWER_FACE_MASK", "FULL_FACE_MASK", "OTHER_MASK", "GLASSES", "SUNGLASSES"], label="Mask & Glasses")

|

| 185 |

+

eye2 = gr.CheckboxGroup(["LEFT", "RIGHT"], label="Eye Open")

|

| 186 |

+

facehair2 = gr.CheckboxGroup(["BEARD", "BRISTLE", "MUSTACHE", "SHAVED"], label="Facial Hair")

|

| 187 |

+

haircolor2 = gr.CheckboxGroup(["BLACK", "BLOND", "BROWN"], label="Hair Color")

|

| 188 |

+

hairtype2 = gr.CheckboxGroup(["BALD", "SHORT", "MEDIUM", "LONG"], label="Hair Type")

|

| 189 |

+

headwear2 = gr.CheckboxGroup(["B_CAP", "CAP", "HAT", "HELMET", "HOOD"], label="Head Wear")

|

| 190 |

+

activity2 = gr.CheckboxGroup(["EATING", "PHONE_RECORDING", "PHONE_USE", "SMOKING", "SEATBELT"], label="Activity")

|

| 191 |

+

with gr.Row():

|

| 192 |

+

pitch2 = gr.Number(0, label="Pitch")

|

| 193 |

+

roll2 = gr.Number(0, label="Roll")

|

| 194 |

+

yaw2 = gr.Number(0, label="Yaw")

|

| 195 |

+

quality2 = gr.Number(0, label="Quality")

|

| 196 |

+

|

| 197 |

+

compare_result_output = gr.JSON(label='Result', visible=False)

|

| 198 |

+

|

| 199 |

+

compare_face_button.click(compare_face, inputs=[compare_face_input1, compare_face_input2], outputs=[compare_result_output, compare_face_output, compare_result,

|

| 200 |

+

liveness1, age1, gender1, emotion1, ethnicity1, mask1, eye1, facehair1, haircolor1, hairtype1, headwear1, activity1, pitch1, roll1, yaw1, quality1,

|

| 201 |

+

liveness2, age2, gender2, emotion2, ethnicity2, mask2, eye2, facehair2, haircolor2, hairtype2, headwear2, activity2, pitch2, roll2, yaw2, quality2])

|

| 202 |

+

|

| 203 |

+

demo.launch(server_name="0.0.0.0", server_port=7860, show_api=False)

|

examples/1.jpg

ADDED

|

examples/2.jpg

ADDED

|

examples/3.jpg

ADDED

|

examples/4.jpg

ADDED

|

examples/5.jpg

ADDED

|

examples/6.jpg

ADDED

|

examples/7.jpg

ADDED

|

examples/8.jpg

ADDED

|