adding examples

Browse files- .gitattributes +1 -0

- README.md +5 -13

- app.py +77 -68

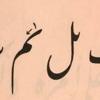

- images/Farsi_1.jpg +0 -0

- images/Farsi_2.jpg +0 -0

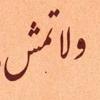

- images/Ruqaa_1.jpg +0 -0

- images/Ruqaa_2.jpg +0 -0

- images/Ruqaa_3.jpg +0 -0

.gitattributes

CHANGED

|

@@ -26,3 +26,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 26 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 27 |

*.zstandard filter=lfs diff=lfs merge=lfs -text

|

| 28 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 26 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 27 |

*.zstandard filter=lfs diff=lfs merge=lfs -text

|

| 28 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

weights.h5 filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -1,13 +1,5 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

sdk: gradio

|

| 7 |

-

sdk_version: 2.9.1

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

-

license: mit

|

| 11 |

-

---

|

| 12 |

-

|

| 13 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces#reference

|

|

|

|

| 1 |

+

The weights of the model aren't here, download them first and put them in the same directory as `acsr.py`

|

| 2 |

+

|

| 3 |

+

```bash

|

| 4 |

+

$ wget 'https://raw.githubusercontent.com/mhmoodlan/arabic-font-classification/master/codebase/code/font_classifier/weights/FontModel_RuFaDataset_cnn_weights(4).h5' -O weights.h5

|

| 5 |

+

```

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

app.py

CHANGED

|

@@ -1,68 +1,77 @@

|

|

| 1 |

-

|

| 2 |

-

# %%

|

| 3 |

-

import gradio as gr

|

| 4 |

-

import numpy as np

|

| 5 |

-

# import random as rn

|

| 6 |

-

# import os

|

| 7 |

-

import tensorflow as tf

|

| 8 |

-

import cv2

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

#%%

|

| 14 |

-

def parse_image(image):

|

| 15 |

-

image = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

|

| 16 |

-

image = cv2.resize(image, (100, 100))

|

| 17 |

-

image = image.astype(np.float32)

|

| 18 |

-

image = image / 255.0

|

| 19 |

-

image = np.expand_dims(image, axis=0)

|

| 20 |

-

image = np.expand_dims(image, axis=-1)

|

| 21 |

-

return image

|

| 22 |

-

|

| 23 |

-

#%%

|

| 24 |

-

|

| 25 |

-

def cnn(input_shape, output_shape):

|

| 26 |

-

num_classes = output_shape[0]

|

| 27 |

-

dropout_seed = 708090

|

| 28 |

-

kernel_seed = 42

|

| 29 |

-

|

| 30 |

-

model = tf.keras.models.Sequential([

|

| 31 |

-

tf.keras.layers.Conv2D(16, 3, activation='relu', input_shape=input_shape, kernel_initializer=tf.keras.initializers.GlorotUniform(seed=kernel_seed)),

|

| 32 |

-

tf.keras.layers.MaxPooling2D(),

|

| 33 |

-

tf.keras.layers.Dropout(0.1, seed=dropout_seed),

|

| 34 |

-

tf.keras.layers.Conv2D(32, 5, activation='relu', kernel_initializer=tf.keras.initializers.GlorotUniform(seed=kernel_seed)),

|

| 35 |

-

tf.keras.layers.MaxPooling2D(),

|

| 36 |

-

tf.keras.layers.Dropout(0.1, seed=dropout_seed),

|

| 37 |

-

tf.keras.layers.Conv2D(64, 10, activation='relu', kernel_initializer=tf.keras.initializers.GlorotUniform(seed=kernel_seed)),

|

| 38 |

-

tf.keras.layers.MaxPooling2D(),

|

| 39 |

-

tf.keras.layers.Dropout(0.1, seed=dropout_seed),

|

| 40 |

-

tf.keras.layers.Flatten(),

|

| 41 |

-

tf.keras.layers.Dense(128, activation='relu', kernel_regularizer='l2', kernel_initializer=tf.keras.initializers.GlorotUniform(seed=kernel_seed)),

|

| 42 |

-

tf.keras.layers.Dropout(0.2, seed=dropout_seed),

|

| 43 |

-

tf.keras.layers.Dense(16, activation='relu', kernel_regularizer='l2', kernel_initializer=tf.keras.initializers.GlorotUniform(seed=kernel_seed)),

|

| 44 |

-

tf.keras.layers.Dropout(0.2, seed=dropout_seed),

|

| 45 |

-

tf.keras.layers.Dense(num_classes, activation='sigmoid', kernel_initializer=tf.keras.initializers.GlorotUniform(seed=kernel_seed))

|

| 46 |

-

])

|

| 47 |

-

|

| 48 |

-

return model

|

| 49 |

-

|

| 50 |

-

#%%

|

| 51 |

-

model = cnn((100, 100, 1), (1,))

|

| 52 |

-

model.compile(loss=tf.keras.losses.BinaryCrossentropy(from_logits=False), optimizer='Adam', metrics='accuracy')

|

| 53 |

-

|

| 54 |

-

model.load_weights('weights.h5')

|

| 55 |

-

|

| 56 |

-

#%%

|

| 57 |

-

def segment(image):

|

| 58 |

-

image = parse_image(image)

|

| 59 |

-

# print(image.shape)

|

| 60 |

-

output = model.predict(image)

|

| 61 |

-

# print(output)

|

| 62 |

-

labels = {

|

| 63 |

-

"farsi" : 1-float(output),

|

| 64 |

-

"ruqaa" : float(output)

|

| 65 |

-

}

|

| 66 |

-

return labels

|

| 67 |

-

|

| 68 |

-

iface = gr.Interface(fn=segment,

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

# %%

|

| 3 |

+

import gradio as gr

|

| 4 |

+

import numpy as np

|

| 5 |

+

# import random as rn

|

| 6 |

+

# import os

|

| 7 |

+

import tensorflow as tf

|

| 8 |

+

import cv2

|

| 9 |

+

|

| 10 |

+

tf.config.experimental.set_visible_devices([], 'GPU')

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

#%%

|

| 14 |

+

def parse_image(image):

|

| 15 |

+

image = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

|

| 16 |

+

image = cv2.resize(image, (100, 100))

|

| 17 |

+

image = image.astype(np.float32)

|

| 18 |

+

image = image / 255.0

|

| 19 |

+

image = np.expand_dims(image, axis=0)

|

| 20 |

+

image = np.expand_dims(image, axis=-1)

|

| 21 |

+

return image

|

| 22 |

+

|

| 23 |

+

#%%

|

| 24 |

+

|

| 25 |

+

def cnn(input_shape, output_shape):

|

| 26 |

+

num_classes = output_shape[0]

|

| 27 |

+

dropout_seed = 708090

|

| 28 |

+

kernel_seed = 42

|

| 29 |

+

|

| 30 |

+

model = tf.keras.models.Sequential([

|

| 31 |

+

tf.keras.layers.Conv2D(16, 3, activation='relu', input_shape=input_shape, kernel_initializer=tf.keras.initializers.GlorotUniform(seed=kernel_seed)),

|

| 32 |

+

tf.keras.layers.MaxPooling2D(),

|

| 33 |

+

tf.keras.layers.Dropout(0.1, seed=dropout_seed),

|

| 34 |

+

tf.keras.layers.Conv2D(32, 5, activation='relu', kernel_initializer=tf.keras.initializers.GlorotUniform(seed=kernel_seed)),

|

| 35 |

+

tf.keras.layers.MaxPooling2D(),

|

| 36 |

+

tf.keras.layers.Dropout(0.1, seed=dropout_seed),

|

| 37 |

+

tf.keras.layers.Conv2D(64, 10, activation='relu', kernel_initializer=tf.keras.initializers.GlorotUniform(seed=kernel_seed)),

|

| 38 |

+

tf.keras.layers.MaxPooling2D(),

|

| 39 |

+

tf.keras.layers.Dropout(0.1, seed=dropout_seed),

|

| 40 |

+

tf.keras.layers.Flatten(),

|

| 41 |

+

tf.keras.layers.Dense(128, activation='relu', kernel_regularizer='l2', kernel_initializer=tf.keras.initializers.GlorotUniform(seed=kernel_seed)),

|

| 42 |

+

tf.keras.layers.Dropout(0.2, seed=dropout_seed),

|

| 43 |

+

tf.keras.layers.Dense(16, activation='relu', kernel_regularizer='l2', kernel_initializer=tf.keras.initializers.GlorotUniform(seed=kernel_seed)),

|

| 44 |

+

tf.keras.layers.Dropout(0.2, seed=dropout_seed),

|

| 45 |

+

tf.keras.layers.Dense(num_classes, activation='sigmoid', kernel_initializer=tf.keras.initializers.GlorotUniform(seed=kernel_seed))

|

| 46 |

+

])

|

| 47 |

+

|

| 48 |

+

return model

|

| 49 |

+

|

| 50 |

+

#%%

|

| 51 |

+

model = cnn((100, 100, 1), (1,))

|

| 52 |

+

model.compile(loss=tf.keras.losses.BinaryCrossentropy(from_logits=False), optimizer='Adam', metrics='accuracy')

|

| 53 |

+

|

| 54 |

+

model.load_weights('weights.h5')

|

| 55 |

+

|

| 56 |

+

#%%

|

| 57 |

+

def segment(image):

|

| 58 |

+

image = parse_image(image)

|

| 59 |

+

# print(image.shape)

|

| 60 |

+

output = model.predict(image)

|

| 61 |

+

# print(output)

|

| 62 |

+

labels = {

|

| 63 |

+

"farsi" : 1-float(output),

|

| 64 |

+

"ruqaa" : float(output)

|

| 65 |

+

}

|

| 66 |

+

return labels

|

| 67 |

+

|

| 68 |

+

iface = gr.Interface(fn=segment,

|

| 69 |

+

inputs="image",

|

| 70 |

+

outputs="label",

|

| 71 |

+

examples=[["images/Farsi_1.jpg"],

|

| 72 |

+

["images/Farsi_2.jpg"],

|

| 73 |

+

["images/Ruqaa_1.jpg"],

|

| 74 |

+

["images/Ruqaa_2.jpg"],

|

| 75 |

+

["images/Ruqaa_3.jpg"],

|

| 76 |

+

]).launch()

|

| 77 |

+

# %%

|

images/Farsi_1.jpg

ADDED

|

images/Farsi_2.jpg

ADDED

|

images/Ruqaa_1.jpg

ADDED

|

images/Ruqaa_2.jpg

ADDED

|

images/Ruqaa_3.jpg

ADDED

|