Spaces:

Sleeping

Sleeping

jeevan

commited on

Commit

•

a1bd2bd

1

Parent(s):

0c1fe46

Mid term assignment complete pending url and video

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .chainlit/config.toml +84 -0

- .gitignore +9 -0

- Dockerfile +11 -0

- README.md +73 -11

- Roles.md +16 -0

- Tasks/Task 1/Task1.md +243 -0

- Tasks/Task 1/pre-processing.ipynb +1299 -0

- Tasks/Task 1/settingup-vectorstore-chunking-strategy.ipynb +0 -0

- Tasks/Task 2/Qdrant_cloud.png +0 -0

- Tasks/Task 2/Task2.md +58 -0

- Tasks/Task 3/Colab-task3-generate-dataset-ragas-eval.ipynb +0 -0

- Tasks/Task 3/RAGAS_Key_Metrics_Summary.csv +6 -0

- Tasks/Task 3/SDG-Generation-logs +0 -0

- Tasks/Task 3/Task3.md +38 -0

- Tasks/Task 3/ai-safety-ragas-evaluation-result.csv +0 -0

- Tasks/Task 3/ai-safety-sdg.csv +0 -0

- Tasks/Task 3/task3-del1.png +0 -0

- Tasks/Task 3/task3-del11.png +0 -0

- Tasks/Task 3/task3-generate-dataset-ragas-eval.ipynb +590 -0

- Tasks/Task 4/MtebRanking.png +0 -0

- Tasks/Task 4/colab-task4-finetuning-os-embed.ipynb +0 -0

- Tasks/Task 4/task4-finetuning-os-embed.ipynb +0 -0

- Tasks/Task 4/test_dataset (2).jsonl +1 -0

- Tasks/Task 4/test_questions.json +1 -0

- Tasks/Task 4/training_dataset (2).jsonl +0 -0

- Tasks/Task 4/training_questions.json +0 -0

- Tasks/Task 4/val_dataset (2).jsonl +1 -0

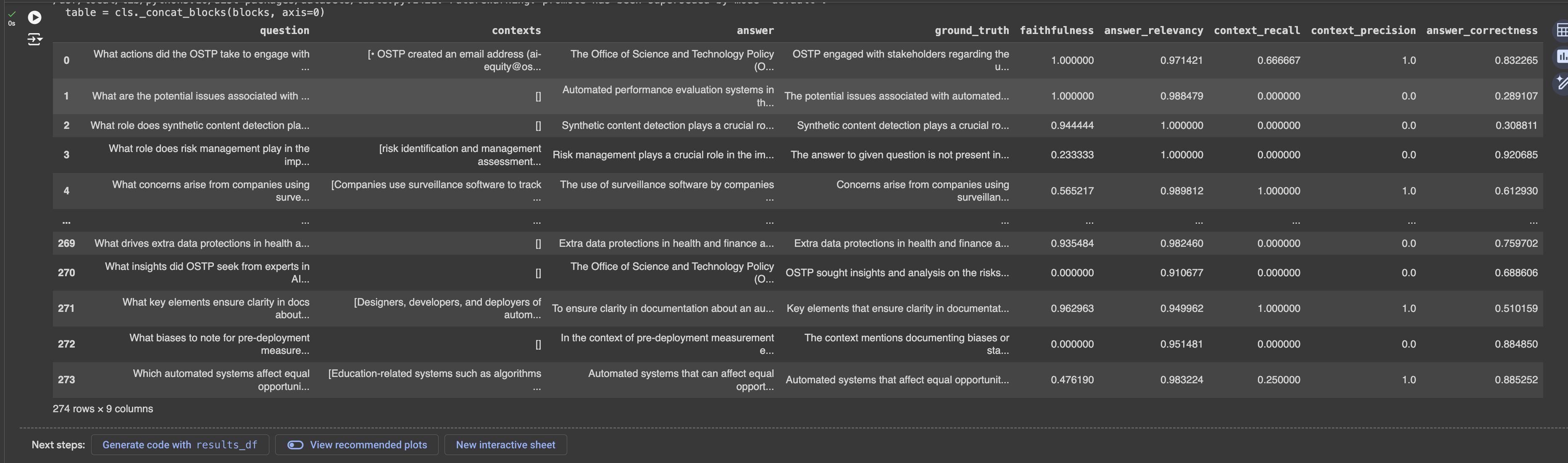

- Tasks/Task 4/val_questions.json +1 -0

- Tasks/Task 5/Colab-task5-assessing-performance.ipynb +0 -0

- Tasks/Task 5/Task5-ChunkingImprovement.png +0 -0

- Tasks/Task 5/Task5-ChunkingTableComparison.png +0 -0

- Tasks/Task 5/Task5-ComparisonBaseFineTuned.png +0 -0

- Tasks/Task 5/Task5-ComparisonBaseFineTunedImprovemant.png +0 -0

- Tasks/Task 5/Task5-SemanticVsRecurstive.png +0 -0

- Tasks/Task 5/Task5-Table.png +0 -0

- Tasks/Task 5/Task5-graph-comparision.png +0 -0

- Tasks/Task 5/Task5-graph-comparision2.png +0 -0

- Tasks/Task 5/base_chain_eval_results_df (1).csv +0 -0

- Tasks/Task 5/base_chain_eval_results_df.csv +0 -0

- Tasks/Task 5/ft_chain_eval_results_df (1).csv +0 -0

- Tasks/Task 5/ft_chain_eval_results_df.csv +0 -0

- Tasks/Task 5/task5-ai-safety-sdg.csv +0 -0

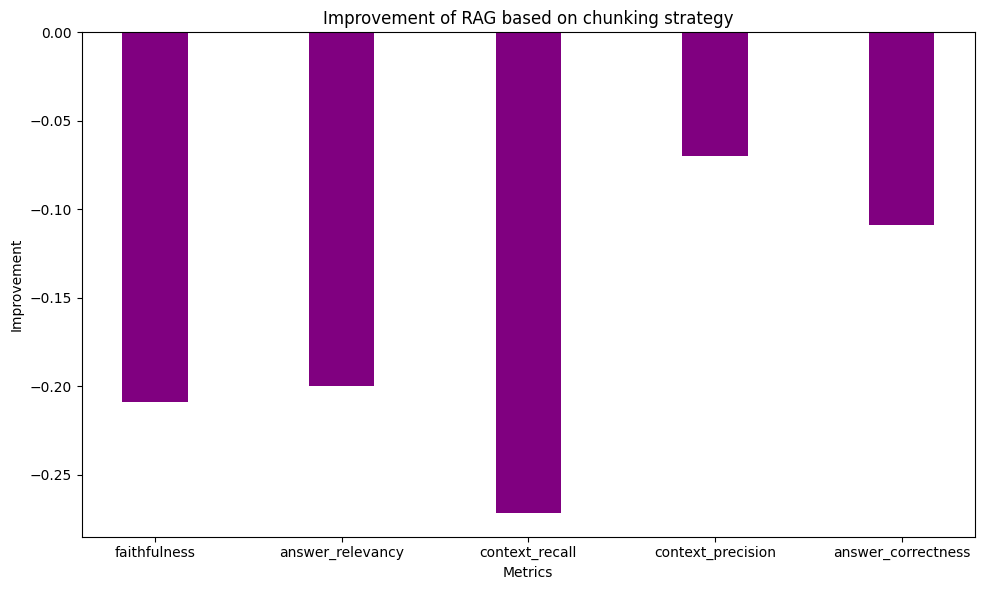

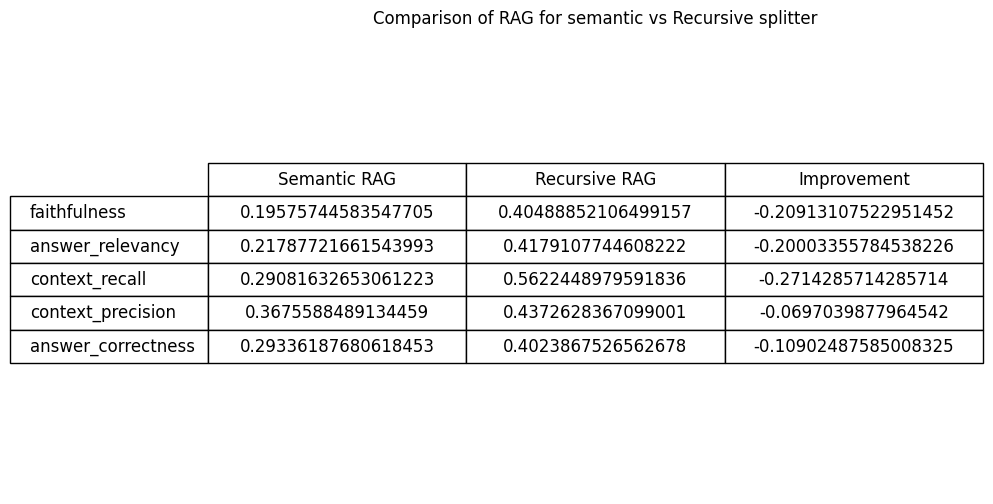

- Tasks/Task 5/task5-ai-safety-sdg2.csv +0 -0

- Tasks/Task 5/task5-assessing-performance.ipynb +709 -0

- Tasks/Task 5/task5-table-improvement.png +0 -0

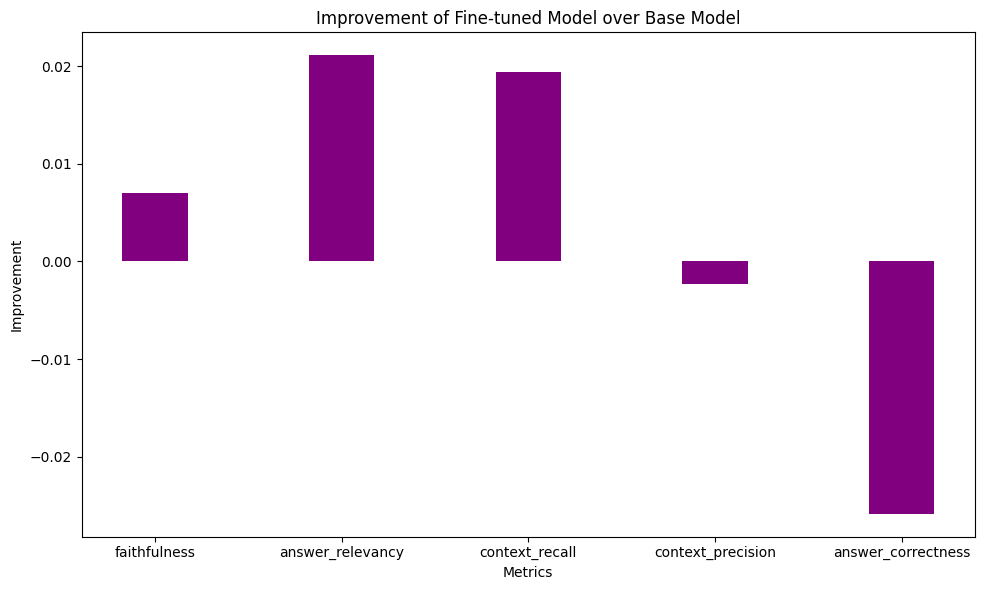

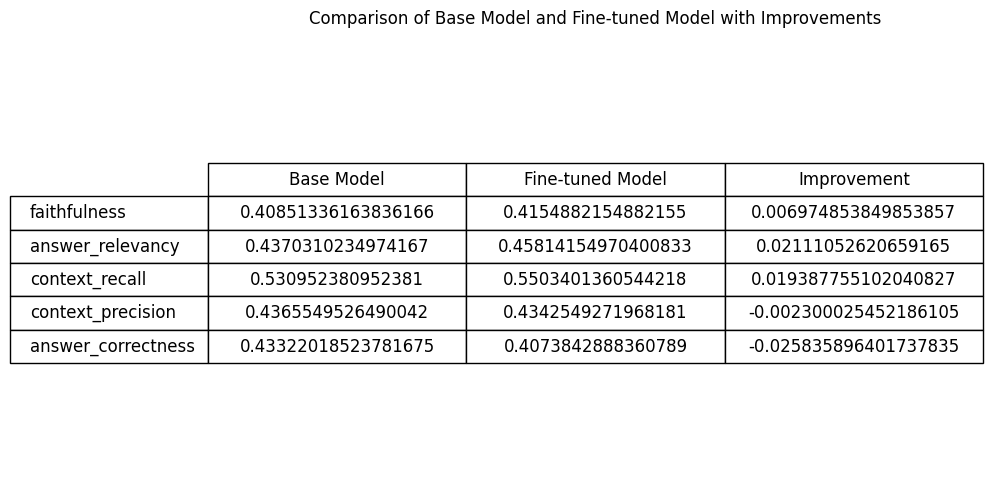

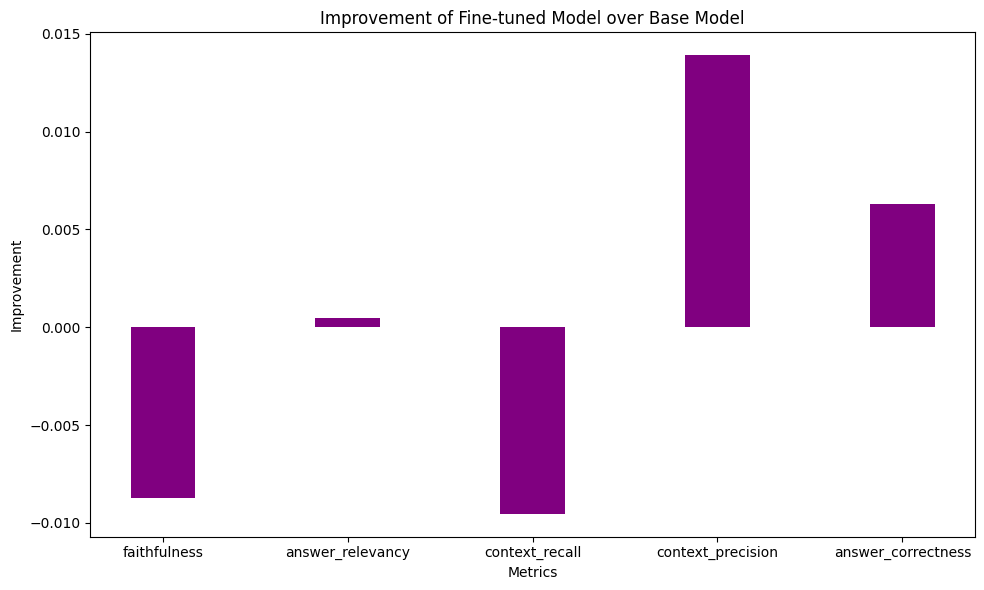

- Tasks/Task 6/task6.md +25 -0

- Tasks/deliverables.md +153 -0

- app.py +249 -0

- chainlit.md +3 -0

- embedding_model.py +58 -0

.chainlit/config.toml

ADDED

|

@@ -0,0 +1,84 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

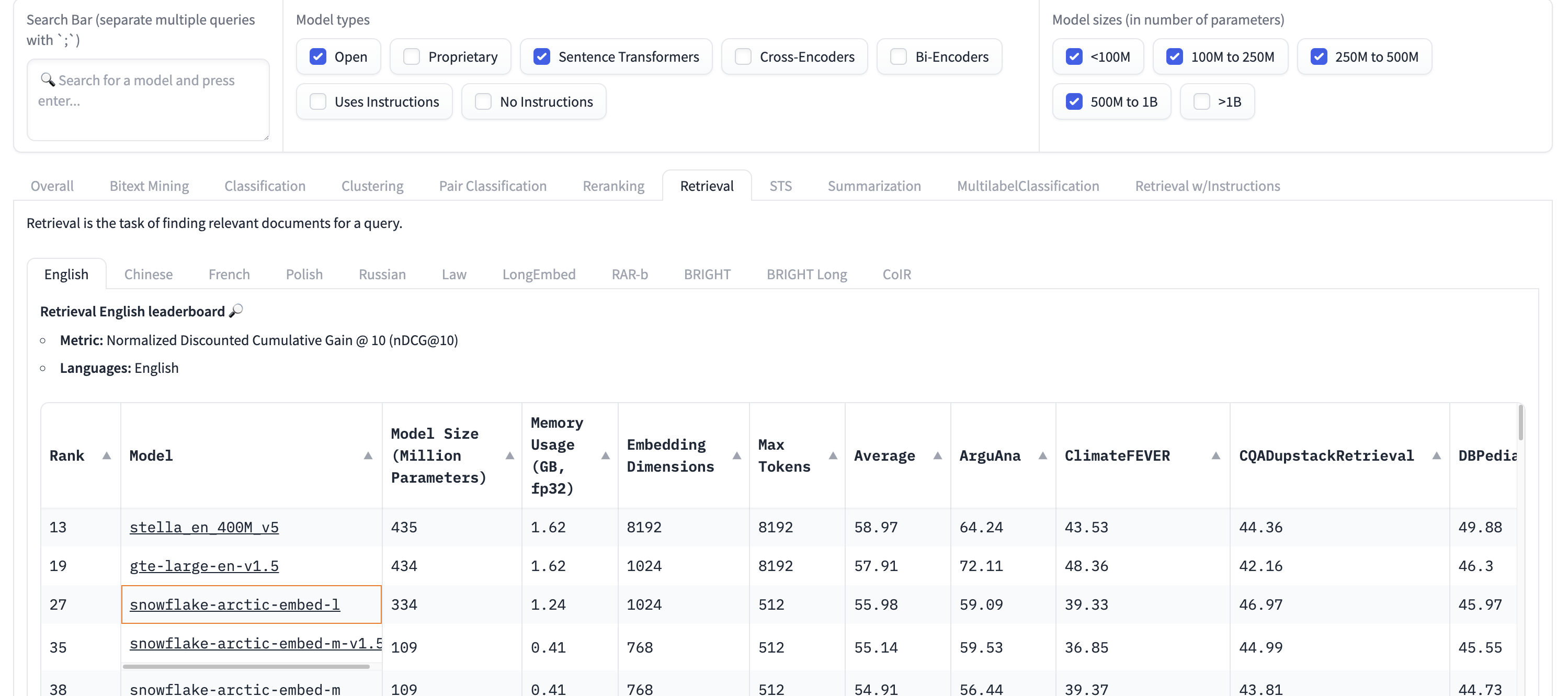

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[project]

|

| 2 |

+

# Whether to enable telemetry (default: true). No personal data is collected.

|

| 3 |

+

enable_telemetry = true

|

| 4 |

+

|

| 5 |

+

# List of environment variables to be provided by each user to use the app.

|

| 6 |

+

user_env = []

|

| 7 |

+

|

| 8 |

+

# Duration (in seconds) during which the session is saved when the connection is lost

|

| 9 |

+

session_timeout = 3600

|

| 10 |

+

|

| 11 |

+

# Enable third parties caching (e.g LangChain cache)

|

| 12 |

+

cache = false

|

| 13 |

+

|

| 14 |

+

# Follow symlink for asset mount (see https://github.com/Chainlit/chainlit/issues/317)

|

| 15 |

+

# follow_symlink = false

|

| 16 |

+

|

| 17 |

+

[features]

|

| 18 |

+

# Show the prompt playground

|

| 19 |

+

prompt_playground = true

|

| 20 |

+

|

| 21 |

+

# Process and display HTML in messages. This can be a security risk (see https://stackoverflow.com/questions/19603097/why-is-it-dangerous-to-render-user-generated-html-or-javascript)

|

| 22 |

+

unsafe_allow_html = false

|

| 23 |

+

|

| 24 |

+

# Process and display mathematical expressions. This can clash with "$" characters in messages.

|

| 25 |

+

latex = false

|

| 26 |

+

|

| 27 |

+

# Authorize users to upload files with messages

|

| 28 |

+

multi_modal = true

|

| 29 |

+

|

| 30 |

+

# Allows user to use speech to text

|

| 31 |

+

[features.speech_to_text]

|

| 32 |

+

enabled = false

|

| 33 |

+

# See all languages here https://github.com/JamesBrill/react-speech-recognition/blob/HEAD/docs/API.md#language-string

|

| 34 |

+

# language = "en-US"

|

| 35 |

+

|

| 36 |

+

[UI]

|

| 37 |

+

# Name of the app and chatbot.

|

| 38 |

+

name = "Chatbot"

|

| 39 |

+

|

| 40 |

+

# Show the readme while the conversation is empty.

|

| 41 |

+

show_readme_as_default = true

|

| 42 |

+

|

| 43 |

+

# Description of the app and chatbot. This is used for HTML tags.

|

| 44 |

+

# description = ""

|

| 45 |

+

|

| 46 |

+

# Large size content are by default collapsed for a cleaner ui

|

| 47 |

+

default_collapse_content = true

|

| 48 |

+

|

| 49 |

+

# The default value for the expand messages settings.

|

| 50 |

+

default_expand_messages = false

|

| 51 |

+

|

| 52 |

+

# Hide the chain of thought details from the user in the UI.

|

| 53 |

+

hide_cot = false

|

| 54 |

+

|

| 55 |

+

# Link to your github repo. This will add a github button in the UI's header.

|

| 56 |

+

# github = ""

|

| 57 |

+

|

| 58 |

+

# Specify a CSS file that can be used to customize the user interface.

|

| 59 |

+

# The CSS file can be served from the public directory or via an external link.

|

| 60 |

+

# custom_css = "/public/test.css"

|

| 61 |

+

|

| 62 |

+

# Override default MUI light theme. (Check theme.ts)

|

| 63 |

+

[UI.theme.light]

|

| 64 |

+

#background = "#FAFAFA"

|

| 65 |

+

#paper = "#FFFFFF"

|

| 66 |

+

|

| 67 |

+

[UI.theme.light.primary]

|

| 68 |

+

#main = "#F80061"

|

| 69 |

+

#dark = "#980039"

|

| 70 |

+

#light = "#FFE7EB"

|

| 71 |

+

|

| 72 |

+

# Override default MUI dark theme. (Check theme.ts)

|

| 73 |

+

[UI.theme.dark]

|

| 74 |

+

#background = "#FAFAFA"

|

| 75 |

+

#paper = "#FFFFFF"

|

| 76 |

+

|

| 77 |

+

[UI.theme.dark.primary]

|

| 78 |

+

#main = "#F80061"

|

| 79 |

+

#dark = "#980039"

|

| 80 |

+

#light = "#FFE7EB"

|

| 81 |

+

|

| 82 |

+

|

| 83 |

+

[meta]

|

| 84 |

+

generated_by = "0.7.700"

|

.gitignore

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

venv/

|

| 2 |

+

__pycache__/*

|

| 3 |

+

.env

|

| 4 |

+

download-hf-model.ipynb

|

| 5 |

+

temp

|

| 6 |

+

start_qdrant_services.sh

|

| 7 |

+

requirements copy.txt

|

| 8 |

+

Dockerfile copy

|

| 9 |

+

.venv/

|

Dockerfile

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM python:3.11.9

|

| 2 |

+

RUN useradd -m -u 1000 user

|

| 3 |

+

USER user

|

| 4 |

+

ENV HOME=/home/user \

|

| 5 |

+

PATH=/home/user/.local/bin:$PATH

|

| 6 |

+

WORKDIR $HOME/app

|

| 7 |

+

COPY --chown=user . $HOME/app

|

| 8 |

+

COPY ./requirements.txt ~/app/requirements.txt

|

| 9 |

+

RUN pip install -r requirements.txt

|

| 10 |

+

COPY . .

|

| 11 |

+

CMD ["chainlit", "run", "app.py", "--port", "7860"]

|

README.md

CHANGED

|

@@ -1,11 +1,73 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Midterm

|

| 2 |

+

|

| 3 |

+

## Background and Context

|

| 4 |

+

The CEO and corporate, with permission of the board, have assembled a crack data science and engineering team to take advantage of RAG, agents, and all of the latest open-source technologies emerging in the industry. This time it's for real though. This time, the company is aiming squarely at some Return On Investment - some ROI - on its research and development dollars.

|

| 5 |

+

|

| 6 |

+

## The Problem

|

| 7 |

+

You are an AI Solutions Engineer. You've worked directly with internal stakeholders to identify a problem: `people are concerned about the implications of AI, and no one seems to understand the right way to think about building ethical and useful AI applications for enterprises.`

|

| 8 |

+

|

| 9 |

+

This is a big problem and one that is rapidly changing. Several people you interviewed said that *they could benefit from a chatbot that helped them understand how the AI industry is evolving, especially as it relates to politics.* Many are interested due to the current election cycle, but others feel that some of the best guidance is likely to come from the government.

|

| 10 |

+

|

| 11 |

+

## Task 1: Dealing with the Data

|

| 12 |

+

You identify the following important documents that, if used for context, you believe will help people understand what’s happening now:

|

| 13 |

+

1. 2022: [Blueprint for an AI Bill of Rights: Making Automated Systems Work for the American People](https://www.whitehouse.gov/wp-content/uploads/2022/10/Blueprint-for-an-AI-Bill-of-Rights.pdf) (PDF)

|

| 14 |

+

2. 2024: [National Institute of Standards and Technology (NIST) Artificial Intelligent Risk Management Framework](https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.600-1.pdf) (PDF)

|

| 15 |

+

Your boss, the SVP of Technology, green-lighted this project to drive the adoption of AI throughout the enterprise. It will be a nice showpiece for the upcoming conference and the big AI initiative announcement the CEO is planning.

|

| 16 |

+

|

| 17 |

+

> Task 1: Review the two PDFs and decide how best to chunk up the data with a single strategy to optimally answer the variety of questions you expect to receive from people.

|

| 18 |

+

>

|

| 19 |

+

> *Hint: Create a list of potential questions that people are likely to ask!*

|

| 20 |

+

|

| 21 |

+

✅Deliverables:

|

| 22 |

+

1. Describe the default chunking strategy that you will use.

|

| 23 |

+

2. Articulate a chunking strategy that you would also like to test out.

|

| 24 |

+

3. Describe how and why you made these decisions

|

| 25 |

+

|

| 26 |

+

## Task 2: Building a Quick End-to-End Prototype

|

| 27 |

+

**You are an AI Systems Engineer.** The SVP of Technology has tasked you with spinning up a quick RAG prototype for answering questions that internal stakeholders have about AI, using the data provided in Task 1.

|

| 28 |

+

|

| 29 |

+

> Task 2: Build an end-to-end RAG application using an industry-standard open-source stack and your choice of commercial off-the-shelf models

|

| 30 |

+

|

| 31 |

+

✅Deliverables:

|

| 32 |

+

1. Build a prototype and deploy to a Hugging Face Space, and create a short (< 2 min) loom video demonstrating some initial testing inputs and outputs.

|

| 33 |

+

2. How did you choose your stack, and why did you select each tool the way you did?

|

| 34 |

+

|

| 35 |

+

## Task 3: Creating a Golden Test Data Set

|

| 36 |

+

**You are an AI Evaluation & Performance Engineer.** The AI Systems Engineer who built the initial RAG system has asked for your help and expertise in creating a "Golden Data Set."

|

| 37 |

+

|

| 38 |

+

> Task 3: Generate a synthetic test data set and baseline an initial evaluation

|

| 39 |

+

|

| 40 |

+

✅Deliverables:

|

| 41 |

+

1. Assess your pipeline using the RAGAS framework including key metrics faithfulness, answer relevancy, context precision, and context recall. Provide a table of your output results.

|

| 42 |

+

2. What conclusions can you draw about performance and effectiveness of your pipeline with this information?

|

| 43 |

+

|

| 44 |

+

## Task 4: Fine-Tuning Open-Source Embeddings

|

| 45 |

+

**You are an Machine Learning Engineer.** The AI Evaluation and Performance Engineer has asked for your help in fine-tuning the embedding model used in their recent RAG application build.

|

| 46 |

+

|

| 47 |

+

> Task 4: Generate synthetic fine-tuning data and complete fine-tuning of the open-source embedding model

|

| 48 |

+

|

| 49 |

+

✅Deliverables:

|

| 50 |

+

1. Swap out your existing embedding model for the new fine-tuned version. Provide a link to your fine-tuned embedding model on the Hugging Face Hub.

|

| 51 |

+

2. How did you choose the embedding model for this application?

|

| 52 |

+

|

| 53 |

+

## Task 5: Assessing Performance

|

| 54 |

+

**You are the AI Evaluation & Performance Engineer.** It's time to assess all options for this product.

|

| 55 |

+

|

| 56 |

+

> Task 5: Assess the performance of 1) the fine-tuned model, and 2) the two proposed chunking strategies

|

| 57 |

+

|

| 58 |

+

✅Deliverables:

|

| 59 |

+

1. Test the fine-tuned embedding model using the RAGAS frameworks to quantify any improvements. Provide results in a table.

|

| 60 |

+

2. Test the two chunking strategies using the RAGAS frameworks to quantify any improvements. Provide results in a table.

|

| 61 |

+

3. The AI Solutions Engineer asks you “Which one is the best to test with internal stakeholders next week, and why?”

|

| 62 |

+

|

| 63 |

+

## Task 6: Managing Your Boss and User Expectations

|

| 64 |

+

**You are the SVP of Technology.** Given the work done by your team so far, you're now sitting down with the AI Solutions Engineer. You have tasked the solutions engineer to test out the new application with at least 50 different internal stakeholders over the next month.

|

| 65 |

+

1. What is the story that you will give to the CEO to tell the whole company at the launch next month?

|

| 66 |

+

2. There appears to be important information not included in our build, for instance, the [270-day update](https://www.whitehouse.gov/briefing-room/statements-releases/2024/07/26/fact-sheet-biden-harris-administration-announces-new-ai-actions-and-receives-additional-major-voluntary-commitment-on-ai/) on the 2023 executive order on [Safe, Secure, and Trustworthy AI](https://www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/). How might you incorporate relevant white-house briefing information into future versions?

|

| 67 |

+

|

| 68 |

+

## Your Final Submission

|

| 69 |

+

Please include the following in your final submission:

|

| 70 |

+

1. A public link to a **written report** addressing each deliverable and answering each question.

|

| 71 |

+

2. A public link to any relevant **GitHub repo**

|

| 72 |

+

3. A public link to the **final version of your application** on Hugging Face

|

| 73 |

+

4. A public link to your **fine-tuned embedding model** on Hugging Face

|

Roles.md

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Roles

|

| 2 |

+

|

| 3 |

+

My Role - AI Solutions Engineer

|

| 4 |

+

|

| 5 |

+

Here are the condensed roles and responsibilities of an AI Solutions Engineer:

|

| 6 |

+

|

| 7 |

+

1. **Design and Develop AI Models**: Analyze business needs, develop, and fine-tune machine learning and AI models.

|

| 8 |

+

2. **System Integration**: Architect AI solutions that integrate with existing IT systems and platforms.

|

| 9 |

+

3. **Data Management**: Preprocess, clean, and manage data for training AI models; perform feature engineering.

|

| 10 |

+

4. **Deployment and Automation**: Implement CI/CD pipelines for deploying AI models and maintain automation.

|

| 11 |

+

5. **Monitor and Optimize Models**: Continuously evaluate, monitor, and retrain models to ensure optimal performance.

|

| 12 |

+

6. **Collaborate Cross-Functionally**: Work with data scientists, software engineers, and business teams for seamless integration.

|

| 13 |

+

7. **Ensure Scalability and Performance**: Optimize AI solutions for scalability, efficiency, and low latency.

|

| 14 |

+

8. **Security and Compliance**: Implement data privacy and security measures for AI solutions.

|

| 15 |

+

9. **Stay Updated on AI Trends**: Continuously research and apply the latest AI technologies and methodologies.

|

| 16 |

+

10. **User Training and Support**: Provide training and ongoing support to stakeholders using AI systems.

|

Tasks/Task 1/Task1.md

ADDED

|

@@ -0,0 +1,243 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

### Deliverable 1: Describe the Default Chunking Strategy You Will Use

|

| 2 |

+

|

| 3 |

+

The default chunking strategy that I will use is based on the **`RecursiveCharacterTextSplitter`** method. This splitter divides text into manageable chunks while maintaining semantic coherence, ensuring that the chunks do not break in the middle of thoughts or sentences. It allows for flexible and dynamic chunking based on the nature of the document.

|

| 4 |

+

|

| 5 |

+

#### Key Details of the Default Strategy:

|

| 6 |

+

- **Adaptive Chunk Sizes**: The splitter first attempts to split the text into large sections (e.g., paragraphs). If a chunk exceeds a certain length, it recursively breaks it down into smaller units (sentences), ensuring each chunk remains within the ideal size for embedding (e.g., 1,000 tokens).

|

| 7 |

+

- **Flexibility**: It works well for both structured and unstructured documents, making it suitable for a variety of AI-related documents like the *AI Bill of Rights* and *NIST RMF*.

|

| 8 |

+

- **Context Preservation**: Since it operates recursively, the splitter minimizes the risk of breaking meaningful content, preserving important relationships between concepts.

|

| 9 |

+

|

| 10 |

+

### Deliverable 2: Articulate a Chunking Strategy You Would Also Like to Test Out

|

| 11 |

+

|

| 12 |

+

In addition to the default strategy, I would like to test out a **Section- and Topic-Based Chunking Strategy** combined with **SemanticChunker**. This strategy would involve splitting the documents based on predefined sections or topics, allowing the chunking process to align more closely with the structure and meaning of the document.

|

| 13 |

+

|

| 14 |

+

#### Key Details of the Alternative Strategy:

|

| 15 |

+

- **Section-Based Chunking**: This strategy would first divide the document into sections or sub-sections based on headers, topics, or principles (e.g., the five principles in the *AI Bill of Rights* or the different phases in *NIST RMF*). This ensures that each chunk retains a logical structure.

|

| 16 |

+

- **SemanticChunker Integration**: The SemanticChunker further refines chunking by considering the content’s coherence, creating semantically meaningful segments rather than simply splitting based on length. This would work particularly well for documents like the *AI Bill of Rights*, where each principle is discussed with examples and cases.

|

| 17 |

+

- **Adaptability**: The strategy allows adaptation based on the specific document, improving retrieval for highly structured documents while maintaining the flexibility to handle less-structured ones.

|

| 18 |

+

|

| 19 |

+

### Deliverable 3: Describe How and Why You Made These Decisions

|

| 20 |

+

|

| 21 |

+

#### 1. **Default Chunking Strategy**:

|

| 22 |

+

- **Rationale**: The decision to use `RecursiveCharacterTextSplitter` as the default is driven by its versatility and efficiency. It balances chunk size and coherence without relying on predefined structures, which makes it robust across various document types, including both structured (like the AI Bill of Rights) and unstructured (user-uploaded PDFs). It is particularly useful for retrieval systems where chunk size impacts the performance of embedding models.

|

| 23 |

+

- **Why It Works**: This strategy allows for better handling of document diversity and ensures that chunks remain contextually rich, which is crucial for accurate retrieval in a conversational AI system.

|

| 24 |

+

|

| 25 |

+

#### 2. **Alternative Section-Based Chunking**:

|

| 26 |

+

- **Rationale**: The section-based chunking strategy is more targeted toward highly structured documents. For documents like the *NIST AI RMF*, which have clear sections and subsections, breaking the text down by these categories ensures that the system can retrieve contextually related chunks for more precise answers.

|

| 27 |

+

- **Why It’s Worth Testing**: This strategy enhances retrieval relevance by aligning chunks with specific sections and principles, making it easier to answer detailed or multi-part questions. In combination with the SemanticChunker, it provides the benefit of preserving meaning across larger contexts.

|

| 28 |

+

|

| 29 |

+

#### 3. **Combining Performance and Coherence**:

|

| 30 |

+

- **Decisions**: I made these decisions to ensure that both performance and coherence are maximized. The default method is fast, flexible, and works well across a variety of documents, while the section-based strategy is designed to improve the quality of responses in documents with clearly defined structures.

|

| 31 |

+

- **Efficiency Consideration**: By choosing a performant embedding model and efficient chunking strategies, I aimed to balance speed and relevance in the retrieval process, ensuring that the system remains scalable and responsive.

|

| 32 |

+

|

| 33 |

+

### Summary:

|

| 34 |

+

- **Default Strategy**: RecursiveCharacterTextSplitter for its adaptability across document types.

|

| 35 |

+

- **Test Strategy**: Section-based chunking with SemanticChunker for enhancing the accuracy of retrieval from structured documents.

|

| 36 |

+

- **Decision Rationale**: Both strategies were chosen to provide a balance between flexibility, coherence, and performance, ensuring that the system can effectively handle diverse document structures and retrieval needs.

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

# Problem Statement

|

| 43 |

+

|

| 44 |

+

People are concerned about the implications of AI, and no one seems to understand the right way to think about building ethical and useful AI applications for enterprises.

|

| 45 |

+

|

| 46 |

+

# Understanding the Data

|

| 47 |

+

|

| 48 |

+

## Blueprint for an AI Bill of rights

|

| 49 |

+

|

| 50 |

+

The "Blueprint for an AI Bill of Rights," published by the White House Office of Science and Technology Policy in October 2022, outlines a framework to ensure that automated systems, including those powered by AI, respect civil rights, privacy, and democratic values. The document is structured around five core principles:

|

| 51 |

+

|

| 52 |

+

Safe and Effective Systems: Automated systems should be designed with input from diverse communities and experts, undergo rigorous pre-deployment testing, and be monitored to ensure safety and effectiveness. This includes protecting users from foreseeable harm and ensuring that systems are not based on inappropriate or irrelevant data.

|

| 53 |

+

|

| 54 |

+

Algorithmic Discrimination Protections: Automated systems must be designed and used in ways that prevent discrimination based on race, gender, religion, and other legally protected categories. This principle includes proactive testing and continuous monitoring to prevent algorithmic bias and discrimination.

|

| 55 |

+

|

| 56 |

+

Data Privacy: Individuals should have control over how their data is collected and used, with automated systems adhering to privacy safeguards by default. This principle emphasizes informed consent, minimizing unnecessary data collection, and prohibiting the misuse of sensitive data, such as in areas of health or finance.

|

| 57 |

+

|

| 58 |

+

Notice and Explanation: People should be aware when automated systems are affecting their rights, opportunities, or access to services, and should be provided with understandable explanations of how these systems operate and influence outcomes.

|

| 59 |

+

|

| 60 |

+

Human Alternatives, Consideration, and Fallback: Users should have the ability to opt out of automated systems in favor of human alternatives where appropriate. There should be mechanisms for people to contest and resolve issues arising from decisions made by automated systems, especially in high-stakes areas like healthcare, education, and criminal justice.

|

| 61 |

+

|

| 62 |

+

The framework aims to protect the public from harmful outcomes of AI while allowing for innovation, recommending transparency, accountability, and fairness across sectors that deploy automated systems. However, the Blueprint is non-binding, meaning it does not constitute enforceable U.S. government policy but instead serves as a guide for best practices

|

| 63 |

+

|

| 64 |

+

## NIST AI Risk Management Framework

|

| 65 |

+

|

| 66 |

+

The document titled **NIST AI 600-1** outlines the **Artificial Intelligence Risk Management Framework (AI RMF)**, with a specific focus on managing risks related to **Generative Artificial Intelligence (GAI)**. Published by the **National Institute of Standards and Technology (NIST)** in July 2024, this framework provides a profile for organizations to manage the risks associated with GAI, consistent with President Biden's Executive Order (EO) 14110 on "Safe, Secure, and Trustworthy AI."

|

| 67 |

+

|

| 68 |

+

### Key aspects of the document include:

|

| 69 |

+

|

| 70 |

+

1. **AI Risk Management Framework (AI RMF)**: This framework offers organizations a voluntary guideline to integrate trustworthiness into AI systems. It addresses the unique risks associated with GAI, such as confabulation (AI hallucinations), bias, privacy, security, and misuse for malicious activities.

|

| 71 |

+

|

| 72 |

+

2. **Suggested Risk Management Actions**: The document provides detailed actions across various phases of AI development and deployment, such as governance, testing, monitoring, and decommissioning, to mitigate risks from GAI.

|

| 73 |

+

|

| 74 |

+

3. **Generative AI-Specific Risks**: The document discusses risks unique to GAI, including:

|

| 75 |

+

- **Data privacy risks** (e.g., personal data leakage, sensitive information memorization)

|

| 76 |

+

- **Environmental impacts** (e.g., high energy consumption during model training)

|

| 77 |

+

- **Harmful content generation** (e.g., violent or misleading content)

|

| 78 |

+

- **Bias amplification and model homogenization**

|

| 79 |

+

- **Security risks**, such as prompt injection and data poisoning

|

| 80 |

+

|

| 81 |

+

4. **Recommendations for Organizations**: It emphasizes proactive governance, transparency, human oversight, and tailored policies to manage AI risks throughout the entire lifecycle of AI systems.

|

| 82 |

+

|

| 83 |

+

This framework aims to ensure that organizations can deploy GAI systems in a responsible and secure manner while balancing innovation with potential societal impacts.

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

## Sample Questions from Internet

|

| 88 |

+

|

| 89 |

+

Here is the consolidated set of real user questions regarding AI, ethics, privacy, and risk management with source URLs:

|

| 90 |

+

|

| 91 |

+

1. **How can companies ensure AI does not violate data privacy laws?**

|

| 92 |

+

Users are concerned about how AI handles personal data, especially with incidents like data spillovers where information leaks unintentionally across systems.

|

| 93 |

+

Source: [Stanford HAI](https://hai.stanford.edu/news/privacy-ai-era-how-do-we-protect-our-personal-information), [Transcend](https://transcend.io/blog/ai-and-your-privacy-understanding-the-concerns).

|

| 94 |

+

|

| 95 |

+

2. **What steps can organizations take to minimize bias in AI models?**

|

| 96 |

+

Concerns about fairness in AI applications, particularly in hiring, lending, and law enforcement.

|

| 97 |

+

Source: [ISC2](https://www.isc2.org/Articles/AI-Ethics-Dilemmas-in-Cybersecurity), [JDSupra](https://www.jdsupra.com/legalnews/five-ethics-questions-to-ask-about-your-5303517/).

|

| 98 |

+

|

| 99 |

+

3. **How do we balance AI-driven cybersecurity with privacy?**

|

| 100 |

+

Striking a balance between enhancing security and avoiding over-collection of personal data.

|

| 101 |

+

Source: [ISC2](https://www.isc2.org/Articles/AI-Ethics-Dilemmas-in-Cybersecurity), [HBS Working Knowledge](https://hbswk.hbs.edu/item/navigating-consumer-data-privacy-in-an-ai-world).

|

| 102 |

+

|

| 103 |

+

4. **What are the legal consequences if an AI system makes an unethical decision?**

|

| 104 |

+

Understanding liability and compliance when AI systems cause ethical or legal violations.

|

| 105 |

+

Source: [JDSupra](https://www.jdsupra.com/legalnews/five-ethics-questions-to-ask-about-your-5303517/), [Transcend](https://transcend.io/blog/ai-and-your-privacy-understanding-the-concerns).

|

| 106 |

+

|

| 107 |

+

5. **How can organizations ensure transparency in AI decision-making?**

|

| 108 |

+

Ensuring explainability and transparency, especially in high-stakes applications like healthcare and criminal justice.

|

| 109 |

+

Source: [ISC2](https://www.isc2.org/Articles/AI-Ethics-Dilemmas-in-Cybersecurity), [HBS Working Knowledge](https://hbswk.hbs.edu/item/navigating-consumer-data-privacy-in-an-ai-world).

|

| 110 |

+

|

| 111 |

+

6. **How can we design AI systems to be ethics and compliance-oriented from the start?**

|

| 112 |

+

Building AI systems with ethical oversight and controls from the beginning.

|

| 113 |

+

Source: [JDSupra](https://www.jdsupra.com/legalnews/five-ethics-questions-to-ask-about-your-5303517/).

|

| 114 |

+

|

| 115 |

+

7. **What are the security risks posed by AI systems?**

|

| 116 |

+

Addressing the growing risks of security breaches and data leaks with AI technologies.

|

| 117 |

+

Source: [Transcend](https://transcend.io/blog/ai-and-your-privacy-understanding-the-concerns), [ISC2](https://www.isc2.org/Articles/AI-Ethics-Dilemmas-in-Cybersecurity).

|

| 118 |

+

|

| 119 |

+

8. **How can AI's impact on job displacement be managed ethically?**

|

| 120 |

+

Addressing ethical concerns around job displacement due to AI automation.

|

| 121 |

+

Source: [ISC2](https://www.isc2.org/Articles/AI-Ethics-Dilemmas-in-Cybersecurity).

|

| 122 |

+

|

| 123 |

+

9. **What measures should be in place to ensure AI systems are transparent and explainable?**

|

| 124 |

+

Ensuring that AI decisions are explainable, particularly in critical areas like healthcare and finance.

|

| 125 |

+

Source: [ISC2](https://www.isc2.org/Articles/AI-Ethics-Dilemmas-in-Cybersecurity), [HBS Working Knowledge](https://hbswk.hbs.edu/item/navigating-consumer-data-privacy-in-an-ai-world).

|

| 126 |

+

|

| 127 |

+

10. **How do companies comply with different AI regulations across regions like the EU and US?**

|

| 128 |

+

Navigating the differences between GDPR in Europe and US privacy laws.

|

| 129 |

+

Source: [Transcend](https://transcend.io/blog/ai-and-your-privacy-understanding-the-concerns), [HBS Working Knowledge](https://hbswk.hbs.edu/item/navigating-consumer-data-privacy-in-an-ai-world).

|

| 130 |

+

|

| 131 |

+

Do the organization's personnel and partners receive AI risk management training to enable them to perform their duties and responsibilities consistent with related policies, procedures, and agreements?

|

| 132 |

+

|

| 133 |

+

Will customer data be used to train artificial intelligence, machine learning, automation, or deep learning?

|

| 134 |

+

|

| 135 |

+

Does the organization have an AI Development and Management Policy?

|

| 136 |

+

|

| 137 |

+

Does the organization have policies and procedures in place to define and differentiate roles and responsibilities for human-AI configurations and oversight of AI systems?

|

| 138 |

+

|

| 139 |

+

Who is the third-party AI technology behind your product/service?

|

| 140 |

+

|

| 141 |

+

Has the third-party AI processor been appropriately vetted for risk? If so, what certifications have they obtained?

|

| 142 |

+

|

| 143 |

+

Does the organization implement post-deployment AI system monitoring, including mechanisms for capturing and evaluating user input and other relevant AI actors, appeal and override, decommissioning, incident response, recovery, and change management?

|

| 144 |

+

|

| 145 |

+

Does the organization communicate incidents and errors to relevant AI actors and affected communities and follow documented processes for tracking, responding to, and recovering from incidents and errors?

|

| 146 |

+

|

| 147 |

+

Does your company engage with generative AI/AGI tools internally or throughout your company's product line?

|

| 148 |

+

|

| 149 |

+

If generative AI/AGI is incorporated into the product, please describe any governance policies or procedures.

|

| 150 |

+

|

| 151 |

+

Describe the controls in place to ensure our data is transmitted securely and is logically and/or physically segmented from those of other customers.

|

| 152 |

+

|

| 153 |

+

These links provide direct access to discussions about AI ethics, privacy, and risk management.

|

| 154 |

+

|

| 155 |

+

## Document Structure

|

| 156 |

+

|

| 157 |

+

- Both of these documents follows a structure which will make easier to chunk the documents but the implemenation of such section/topic based strategy is complex and timeconsuming as this needs to be dynamic based on the document uploaded.

|

| 158 |

+

- We could chunk the pdf in sections then sub-sections then pages and sentence/para. This will nicely break the document preserving document structure.

|

| 159 |

+

- There is a chance the user may uploadd a document that is not structure which means going with the assumption that the document will always be in a structured format will not work.

|

| 160 |

+

|

| 161 |

+

|

| 162 |

+

# Dealing with the data

|

| 163 |

+

|

| 164 |

+

Considering all the above going with a generic approach to cover all uses would be sensible. We will go with usual `PyMuPDFLoader` library to load the pdf and chunk the documents using `RecursiveCharacterTextSplitter` to begin with.

|

| 165 |

+

|

| 166 |

+

Would like to use `PyPDFium2Loader` which is very slow compared to the `PyMuPDFLoader`. IF our use case requires the populating the vector store before hand we could go with this loader. The `PyPDFium2Loader` loader took 2mins 30secs to load these two pdf. Comparing the quality of the output there is not much difference between the two. So will use `PyMuPDFLoader`

|

| 167 |

+

|

| 168 |

+

To improve the quality of the retrival we can group the documents by similar context which provides better context retrival.

|

| 169 |

+

|

| 170 |

+

Chunking strategy

|

| 171 |

+

|

| 172 |

+

`RecursiveCharacterTextSplitter`

|

| 173 |

+

|

| 174 |

+

`SemanticChunker`

|

| 175 |

+

|

| 176 |

+

Improved Coherence: Chunks are more likely to contain complete thoughts or ideas.

|

| 177 |

+

Better Retrieval Relevance: By preserving context, retrieval accuracy may be enhanced.

|

| 178 |

+

Adaptability: The chunking method can be adjusted based on the nature of the documents and retrieval needs.

|

| 179 |

+

Potential for Better Understanding: LLMs or downstream tasks may perform better with more coherent text segments.

|

| 180 |

+

|

| 181 |

+

Advanced retrieval techniques tried

|

| 182 |

+

|

| 183 |

+

1. Context enrichment - Creates some duplicates need to investigate why for later

|

| 184 |

+

2. Contextual Compression - Creates better response but takes time. Will need to check if streaming helps.

|

| 185 |

+

|

| 186 |

+

More for later.

|

| 187 |

+

|

| 188 |

+

Experimented with above chunking strategy and found that `RecursiveCharacterTextSplitter` with contextual compression provides better results.

|

| 189 |

+

|

| 190 |

+

|

| 191 |

+

# Choise of embedding model

|

| 192 |

+

|

| 193 |

+

The quality of generation is directly proportional to the quality of the retrieval and at the same time we wanted to choose smaller model that is performant. I choose to use the `snowflake-arctic-embed-l` embedding model as it is small with 334 Million parameter with 1024 dimension support. Currently it is at 27 rank the MTEB leader board which suggest to me that it is efficient competing with other large models.

|

| 194 |

+

|

| 195 |

+

====

|

| 196 |

+

|

| 197 |

+

Your response effectively breaks down the documents and uses a clear, methodical approach to answering the task. However, to enhance the response further, consider the following improvements:

|

| 198 |

+

|

| 199 |

+

### 1. **Aligning Chunking Strategy with Context**

|

| 200 |

+

- **Current Strategy**: You mention using `RecursiveCharacterTextSplitter` and `SemanticChunker`, which is a good start.

|

| 201 |

+

- **Improvement**: Since both documents have well-defined sections and are structured (NIST RMF includes clear sections, and AI Bill of Rights is principles-based), it would be beneficial to first chunk based on sections and subsections, while combining it with context-based chunking. Instead of focusing on one chunking method, you can adapt based on the structure of each document.

|

| 202 |

+

- **Dynamic Chunking**: Also, mention how the method would dynamically adapt to less-structured documents if uploaded in the future, ensuring scalability.

|

| 203 |

+

|

| 204 |

+

### 2. **Specific Chunking Examples**

|

| 205 |

+

- **Blueprint for AI Bill of Rights**:

|

| 206 |

+

- Principles can form separate chunks (e.g., *Safe and Effective Systems*, *Algorithmic Discrimination Protections*).

|

| 207 |

+

- Subsections can also include further breaking down examples or cases cited under each principle.

|

| 208 |

+

- **NIST AI RMF**:

|

| 209 |

+

- As each section (such as "Suggested Actions to Manage Risks" or "GAI Risk Overview") has detailed subcategories, chunk them accordingly.

|

| 210 |

+

- Include how you will preserve context when chunking specific actions.

|

| 211 |

+

|

| 212 |

+

### 3. **Incorporating Expected Questions**

|

| 213 |

+

- You have already listed good examples of user questions. However, for the purpose of improving retrieval:

|

| 214 |

+

- **Enhance Contextual Retrieval**: Suggest tailoring your vector store to group similar questions by topic, such as data privacy, bias prevention, and AI safety. This allows better retrieval of relevant chunks across both documents when users ask questions.

|

| 215 |

+

- **Example**: A question about "data privacy" should retrieve answers both from the *Data Privacy* section of AI Bill of Rights and the *Data Privacy Risks* section of the NIST RMF, creating a more comprehensive answer.

|

| 216 |

+

|

| 217 |

+

### 4. **Document Summarization in the Vector Store**

|

| 218 |

+

- If possible, create summarizations for sections and topics within both documents and store them in your vector database. Summaries improve quick lookup without requiring a deep scan through every chunk.

|

| 219 |

+

|

| 220 |

+

### 5. **Advanced Techniques**

|

| 221 |

+

- **Context Enrichment**: Mention that it needs further investigation but is a promising avenue. Focus on eliminating duplication by refining preprocessing or filtering steps when enriching.

|

| 222 |

+

- **Contextual Compression**: Explain how you might use this to generate concise answers that retain meaning, which could be useful for long or dense document sections.

|

| 223 |

+

|

| 224 |

+

### 6. **Handling Duplicate Content**

|

| 225 |

+

- Add a comment about how duplicate information across different sections can be handled by maintaining a cache or reference of repeated content in different chunks to avoid redundancies.

|

| 226 |

+

|

| 227 |

+

### 7. **Performance and Efficiency**

|

| 228 |

+

- Since `PyPDFium2Loader` is slower, clarify that you will use it only if high-quality, OCR-accurate extraction is critical, but `PyMuPDFLoader` is your preferred option for efficiency and initial loading. This could be useful for streaming applications.

|

| 229 |

+

|

| 230 |

+

### Enhanced Structure for Response:

|

| 231 |

+

1. **Problem Statement**

|

| 232 |

+

- Continue with the problem definition, but expand on real-world implications of ethical and risk management in AI.

|

| 233 |

+

|

| 234 |

+

2. **Understanding the Data**

|

| 235 |

+

- Break the two documents down clearly into sections and discuss specific strategies for how chunking can preserve meaning within these sections.

|

| 236 |

+

|

| 237 |

+

3. **Advanced Retrieval & Chunking**

|

| 238 |

+

- Expand this section to include the chunking methods you've outlined, and specify the improvements you will explore (e.g., dynamic chunking, context-based grouping).

|

| 239 |

+

|

| 240 |

+

4. **Performance Considerations**

|

| 241 |

+

- Detail how you will balance quality and performance based on user needs and document types.

|

| 242 |

+

|

| 243 |

+

This would strengthen your approach, improving both the technical accuracy and user experience.

|

Tasks/Task 1/pre-processing.ipynb

ADDED

|

@@ -0,0 +1,1299 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|