Spaces:

Runtime error

Runtime error

Matthijs Hollemans

commited on

Commit

•

0ecd9fb

1

Parent(s):

e2a288e

add app

Browse files- .gitattributes +1 -0

- .gitignore +4 -0

- LICENSE +21 -0

- app.py +261 -0

- checkpoints/image2reverb_f22.ckpt +3 -0

- checkpoints/mono_odom_640x192/depth.pth +3 -0

- checkpoints/mono_odom_640x192/encoder.pth +3 -0

- checkpoints/mono_odom_640x192/pose.pth +3 -0

- checkpoints/mono_odom_640x192/pose_encoder.pth +3 -0

- checkpoints/mono_odom_640x192/poses.npy +3 -0

- examples/input.0c3f5013.png +0 -0

- examples/input.2238dc21.png +0 -0

- examples/input.321eef38.png +0 -0

- examples/input.4d280b40.png +0 -0

- examples/input.4e2f71f6.png +0 -0

- examples/input.5416407f.png +0 -0

- examples/input.67bc502e.png +0 -0

- examples/input.98773b90.png +0 -0

- examples/input.ac61500f.png +0 -0

- examples/input.c9ee9d49.png +0 -0

- image2reverb/dataset.py +96 -0

- image2reverb/layers.py +88 -0

- image2reverb/mel.py +20 -0

- image2reverb/model.py +207 -0

- image2reverb/networks.py +344 -0

- image2reverb/stft.py +23 -0

- image2reverb/util.py +167 -0

- model.jpg +0 -0

- requirements.txt +6 -0

.gitattributes

CHANGED

|

@@ -28,6 +28,7 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 28 |

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 29 |

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 30 |

*.wasm filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 31 |

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 28 |

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 29 |

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 30 |

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.wav filter=lfs diff=lfs merge=lfs -text

|

| 32 |

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.pyc

|

| 2 |

+

__pycache__/

|

| 3 |

+

.DS_Store

|

| 4 |

+

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2020

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

app.py

ADDED

|

@@ -0,0 +1,261 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Hacked together using the code from https://github.com/nikhilsinghmus/image2reverb

|

| 2 |

+

|

| 3 |

+

import os, types

|

| 4 |

+

import numpy as np

|

| 5 |

+

import gradio as gr

|

| 6 |

+

import soundfile as sf

|

| 7 |

+

import scipy

|

| 8 |

+

import librosa.display

|

| 9 |

+

from PIL import Image

|

| 10 |

+

|

| 11 |

+

import matplotlib

|

| 12 |

+

matplotlib.use("Agg")

|

| 13 |

+

import matplotlib.pyplot as plt

|

| 14 |

+

|

| 15 |

+

import torch

|

| 16 |

+

from torch.utils.data import Dataset

|

| 17 |

+

import torchvision.transforms as transforms

|

| 18 |

+

from pytorch_lightning import Trainer

|

| 19 |

+

|

| 20 |

+

from image2reverb.model import Image2Reverb

|

| 21 |

+

from image2reverb.stft import STFT

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

predicted_ir = None

|

| 25 |

+

predicted_spectrogram = None

|

| 26 |

+

predicted_depthmap = None

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

def test_step(self, batch, batch_idx):

|

| 30 |

+

spec, label, paths = batch

|

| 31 |

+

examples = [os.path.splitext(os.path.basename(s))[0] for _, s in zip(*paths)]

|

| 32 |

+

|

| 33 |

+

f, img = self.enc.forward(label)

|

| 34 |

+

|

| 35 |

+

shape = (

|

| 36 |

+

f.shape[0],

|

| 37 |

+

(self._latent_dimension - f.shape[1]) if f.shape[1] < self._latent_dimension else f.shape[1],

|

| 38 |

+

f.shape[2],

|

| 39 |

+

f.shape[3]

|

| 40 |

+

)

|

| 41 |

+

z = torch.cat((f, torch.randn(shape, device=model.device)), 1)

|

| 42 |

+

|

| 43 |

+

fake_spec = self.g(z)

|

| 44 |

+

|

| 45 |

+

stft = STFT()

|

| 46 |

+

y_f = [stft.inverse(s.squeeze()) for s in fake_spec]

|

| 47 |

+

|

| 48 |

+

# TODO: bit hacky

|

| 49 |

+

global predicted_ir, predicted_spectrogram, predicted_depthmap

|

| 50 |

+

predicted_ir = y_f[0]

|

| 51 |

+

|

| 52 |

+

s = fake_spec.squeeze().cpu().numpy()

|

| 53 |

+

predicted_spectrogram = np.exp((((s + 1) * 0.5) * 19.5) - 17.5) - 1e-8

|

| 54 |

+

|

| 55 |

+

img = (img + 1) * 0.5

|

| 56 |

+

predicted_depthmap = img.cpu().squeeze().permute(1, 2, 0)[:,:,-1].squeeze().numpy()

|

| 57 |

+

|

| 58 |

+

return {"test_audio": y_f, "test_examples": examples}

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

def test_epoch_end(self, outputs):

|

| 62 |

+

if not self.test_callback:

|

| 63 |

+

return

|

| 64 |

+

|

| 65 |

+

examples = []

|

| 66 |

+

audio = []

|

| 67 |

+

|

| 68 |

+

for output in outputs:

|

| 69 |

+

for i in range(len(output["test_examples"])):

|

| 70 |

+

audio.append(output["test_audio"][i])

|

| 71 |

+

examples.append(output["test_examples"][i])

|

| 72 |

+

|

| 73 |

+

self.test_callback(examples, audio)

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

checkpoint_path = "./checkpoints/image2reverb_f22.ckpt"

|

| 77 |

+

encoder_path = None

|

| 78 |

+

depthmodel_path = "./checkpoints/mono_odom_640x192"

|

| 79 |

+

constant_depth = None

|

| 80 |

+

latent_dimension = 512

|

| 81 |

+

|

| 82 |

+

model = Image2Reverb(encoder_path, depthmodel_path)

|

| 83 |

+

m = torch.load(checkpoint_path, map_location=model.device)

|

| 84 |

+

model.load_state_dict(m["state_dict"])

|

| 85 |

+

|

| 86 |

+

model.test_step = types.MethodType(test_step, model)

|

| 87 |

+

model.test_epoch_end = types.MethodType(test_epoch_end, model)

|

| 88 |

+

|

| 89 |

+

image_transforms = transforms.Compose([

|

| 90 |

+

transforms.Resize([224, 224], transforms.functional.InterpolationMode.BICUBIC),

|

| 91 |

+

transforms.ToTensor(),

|

| 92 |

+

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

|

| 93 |

+

])

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

class Image2ReverbDemoDataset(Dataset):

|

| 97 |

+

def __init__(self, image):

|

| 98 |

+

self.image = Image.fromarray(image)

|

| 99 |

+

self.stft = STFT()

|

| 100 |

+

|

| 101 |

+

def __getitem__(self, index):

|

| 102 |

+

img_tensor = image_transforms(self.image.convert("RGB"))

|

| 103 |

+

return torch.zeros(1, int(5.94 * 22050)), img_tensor, ("", "")

|

| 104 |

+

|

| 105 |

+

def __len__(self):

|

| 106 |

+

return 1

|

| 107 |

+

|

| 108 |

+

def name(self):

|

| 109 |

+

return "Image2ReverbDemo"

|

| 110 |

+

|

| 111 |

+

|

| 112 |

+

def convolve(audio, reverb):

|

| 113 |

+

# convolve audio with reverb

|

| 114 |

+

wet_audio = np.concatenate((audio, np.zeros(reverb.shape)))

|

| 115 |

+

wet_audio = scipy.signal.oaconvolve(wet_audio, reverb, "full")[:len(wet_audio)]

|

| 116 |

+

|

| 117 |

+

# normalize audio to roughly -1 dB peak and remove DC offset

|

| 118 |

+

wet_audio /= np.max(np.abs(wet_audio))

|

| 119 |

+

wet_audio -= np.mean(wet_audio)

|

| 120 |

+

wet_audio *= 0.9

|

| 121 |

+

return wet_audio

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

def predict(image, audio):

|

| 125 |

+

# image = numpy (height, width, channels)

|

| 126 |

+

# audio = tuple (sample_rate, frames) or (sample_rate, (frames, channels))

|

| 127 |

+

|

| 128 |

+

test_set = Image2ReverbDemoDataset(image)

|

| 129 |

+

test_loader = torch.utils.data.DataLoader(test_set, num_workers=0, batch_size=1)

|

| 130 |

+

trainer = Trainer(limit_test_batches=1)

|

| 131 |

+

trainer.test(model, test_loader, verbose=True)

|

| 132 |

+

|

| 133 |

+

# depthmap output

|

| 134 |

+

depthmap_fig = plt.figure()

|

| 135 |

+

plt.imshow(predicted_depthmap)

|

| 136 |

+

plt.close()

|

| 137 |

+

|

| 138 |

+

# spectrogram output

|

| 139 |

+

spectrogram_fig = plt.figure()

|

| 140 |

+

librosa.display.specshow(predicted_spectrogram, sr=22050, x_axis="time", y_axis="hz")

|

| 141 |

+

plt.close()

|

| 142 |

+

|

| 143 |

+

# plot the IR as a waveform

|

| 144 |

+

waveform_fig = plt.figure()

|

| 145 |

+

librosa.display.waveshow(predicted_ir, sr=22050, alpha=0.5)

|

| 146 |

+

plt.close()

|

| 147 |

+

|

| 148 |

+

# output audio as 16-bit signed integer

|

| 149 |

+

ir = (22050, (predicted_ir * 32767).astype(np.int16))

|

| 150 |

+

|

| 151 |

+

sample_rate, original_audio = audio

|

| 152 |

+

|

| 153 |

+

# incoming audio is 16-bit signed integer, convert to float and normalize

|

| 154 |

+

original_audio = original_audio.astype(np.float32) / 32768.0

|

| 155 |

+

original_audio /= np.max(np.abs(original_audio))

|

| 156 |

+

|

| 157 |

+

# resample reverb to sample_rate first, also normalize

|

| 158 |

+

reverb = predicted_ir.copy()

|

| 159 |

+

reverb = scipy.signal.resample_poly(reverb, up=sample_rate, down=22050)

|

| 160 |

+

reverb /= np.max(np.abs(reverb))

|

| 161 |

+

|

| 162 |

+

# stereo?

|

| 163 |

+

if len(original_audio.shape) > 1:

|

| 164 |

+

wet_left = convolve(original_audio[:, 0], reverb)

|

| 165 |

+

wet_right = convolve(original_audio[:, 1], reverb)

|

| 166 |

+

wet_audio = np.concatenate([wet_left[:, None], wet_right[:, None]], axis=1)

|

| 167 |

+

else:

|

| 168 |

+

wet_audio = convolve(original_audio, reverb)

|

| 169 |

+

|

| 170 |

+

# 50% dry-wet mix

|

| 171 |

+

mixed_audio = wet_audio * 0.5

|

| 172 |

+

mixed_audio[:len(original_audio), ...] += original_audio * 0.9 * 0.5

|

| 173 |

+

|

| 174 |

+

# convert back to 16-bit signed integer

|

| 175 |

+

wet_audio = (wet_audio * 32767).astype(np.int16)

|

| 176 |

+

mixed_audio = (mixed_audio * 32767).astype(np.int16)

|

| 177 |

+

|

| 178 |

+

convolved_audio_100 = (sample_rate, wet_audio)

|

| 179 |

+

convolved_audio_50 = (sample_rate, mixed_audio)

|

| 180 |

+

|

| 181 |

+

return depthmap_fig, spectrogram_fig, waveform_fig, ir, convolved_audio_100, convolved_audio_50

|

| 182 |

+

|

| 183 |

+

|

| 184 |

+

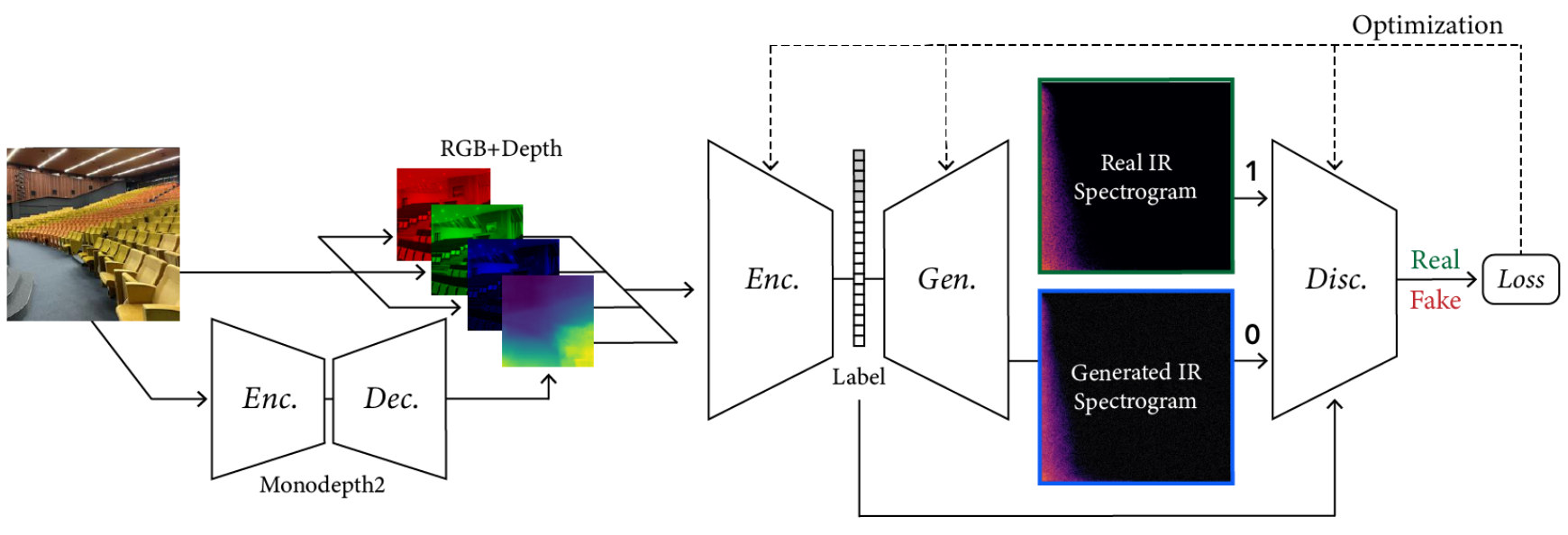

title = "Image2Reverb: Cross-Modal Reverb Impulse Response Synthesis"

|

| 185 |

+

|

| 186 |

+

description = """

|

| 187 |

+

<b>Image2Reverb</b> predicts the acoustic reverberation of a given environment from a 2D image. <a href="https://arxiv.org/abs/2103.14201">Read the paper</a>

|

| 188 |

+

|

| 189 |

+

How to use: Choose an image of a room or other environment and an audio file.

|

| 190 |

+

The model will predict what the reverb of the room sounds like and applies this to the audio file.

|

| 191 |

+

|

| 192 |

+

First, the image is resized to 224×224. The monodepth model is used to predict a depthmap, which is added as an

|

| 193 |

+

additional channel to the image input. A ResNet-based encoder then converts the image into features, and

|

| 194 |

+

finally a GAN predicts the spectrogram of the reverb's impulse response.

|

| 195 |

+

|

| 196 |

+

<center><img src="file/model.jpg" width="870" height="297" alt="model architecture"></center>

|

| 197 |

+

|

| 198 |

+

The predicted impulse response is mono 22050 kHz. It is upsampled to the sampling rate of the audio

|

| 199 |

+

file and applied to both channels if the audio is stereo.

|

| 200 |

+

Generating the impulse response involves a certain amount of randomness, making it sound a little

|

| 201 |

+

different every time you try it.

|

| 202 |

+

"""

|

| 203 |

+

|

| 204 |

+

article = """

|

| 205 |

+

<div style='margin:20px auto;'>

|

| 206 |

+

|

| 207 |

+

<p>Based on original work by Nikhil Singh, Jeff Mentch, Jerry Ng, Matthew Beveridge, Iddo Drori.

|

| 208 |

+

<a href="https://web.media.mit.edu/~nsingh1/image2reverb/">Project Page</a> |

|

| 209 |

+

<a href="https://arxiv.org/abs/2103.14201">Paper</a> |

|

| 210 |

+

<a href="https://github.com/nikhilsinghmus/image2reverb">GitHub</a></p>

|

| 211 |

+

|

| 212 |

+

<pre>

|

| 213 |

+

@InProceedings{Singh_2021_ICCV,

|

| 214 |

+

author = {Singh, Nikhil and Mentch, Jeff and Ng, Jerry and Beveridge, Matthew and Drori, Iddo},

|

| 215 |

+

title = {Image2Reverb: Cross-Modal Reverb Impulse Response Synthesis},

|

| 216 |

+

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

|

| 217 |

+

month = {October},

|

| 218 |

+

year = {2021},

|

| 219 |

+

pages = {286-295}

|

| 220 |

+

}

|

| 221 |

+

</pre>

|

| 222 |

+

|

| 223 |

+

<p>🌠 Example images from <a href="https://web.media.mit.edu/~nsingh1/image2reverb/">the original project page</a>.</p>

|

| 224 |

+

|

| 225 |

+

<p>🎶 Example sound from <a href="https://freesound.org/people/ashesanddreams/sounds/610414/">Ashes and Dreams @ freesound.org</a> (CC BY 4.0 license). This is a mono 48 kHz recording that has no reverb on it.</p>

|

| 226 |

+

|

| 227 |

+

</div>

|

| 228 |

+

"""

|

| 229 |

+

|

| 230 |

+

audio_example = "examples/ashesanddreams.wav"

|

| 231 |

+

|

| 232 |

+

examples = [

|

| 233 |

+

["examples/input.4e2f71f6.png", audio_example],

|

| 234 |

+

["examples/input.321eef38.png", audio_example],

|

| 235 |

+

["examples/input.2238dc21.png", audio_example],

|

| 236 |

+

["examples/input.4d280b40.png", audio_example],

|

| 237 |

+

["examples/input.0c3f5013.png", audio_example],

|

| 238 |

+

["examples/input.98773b90.png", audio_example],

|

| 239 |

+

["examples/input.ac61500f.png", audio_example],

|

| 240 |

+

["examples/input.5416407f.png", audio_example],

|

| 241 |

+

]

|

| 242 |

+

|

| 243 |

+

gr.Interface(

|

| 244 |

+

fn=predict,

|

| 245 |

+

inputs=[

|

| 246 |

+

gr.inputs.Image(label="Upload Image"),

|

| 247 |

+

gr.inputs.Audio(label="Upload Audio", source="upload"),

|

| 248 |

+

],

|

| 249 |

+

outputs=[

|

| 250 |

+

gr.Plot(label="Depthmap"),

|

| 251 |

+

gr.Plot(label="Impulse Response Spectrogram"),

|

| 252 |

+

gr.Plot(label="Impulse Response Waveform"),

|

| 253 |

+

gr.outputs.Audio(label="Impulse Response"),

|

| 254 |

+

gr.outputs.Audio(label="Output Audio (100% Wet)"),

|

| 255 |

+

gr.outputs.Audio(label="Output Audio (50% Dry, 50% Wet)"),

|

| 256 |

+

],

|

| 257 |

+

title=title,

|

| 258 |

+

description=description,

|

| 259 |

+

article=article,

|

| 260 |

+

examples=examples,

|

| 261 |

+

).launch()

|

checkpoints/image2reverb_f22.ckpt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d61422e95dc963e258b68536dc8135633a999c3a85a5a80925878ff75ca092e3

|

| 3 |

+

size 687498725

|

checkpoints/mono_odom_640x192/depth.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3a2f542e274a5b0567e3118bc16aea4c2f44ba09df4a08a6c3a47d6d98285b72

|

| 3 |

+

size 12617260

|

checkpoints/mono_odom_640x192/encoder.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:acbf2534608f06be40eecd5026c505ebd0c1d9442fe5864abba1b5d90bff2e3e

|

| 3 |

+

size 46819013

|

checkpoints/mono_odom_640x192/pose.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b4da0fe66fc1f781a05d8c4778f33ffa1851c219cb7fd561328479f5b439707e

|

| 3 |

+

size 5259718

|

checkpoints/mono_odom_640x192/pose_encoder.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:df8659ecf4363335c13ffc4510ff34556715c7f6435707622c3641a7fe055eb2

|

| 3 |

+

size 46856589

|

checkpoints/mono_odom_640x192/poses.npy

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:71a413ff381d4a58345e9152e0ca8d0b45a71e550df7730633a8cf7693edcced

|

| 3 |

+

size 76928

|

examples/input.0c3f5013.png

ADDED

|

examples/input.2238dc21.png

ADDED

|

examples/input.321eef38.png

ADDED

|

examples/input.4d280b40.png

ADDED

|

examples/input.4e2f71f6.png

ADDED

|

examples/input.5416407f.png

ADDED

|

examples/input.67bc502e.png

ADDED

|

examples/input.98773b90.png

ADDED

|

examples/input.ac61500f.png

ADDED

|

examples/input.c9ee9d49.png

ADDED

|

image2reverb/dataset.py

ADDED

|

@@ -0,0 +1,96 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import soundfile

|

| 3 |

+

import torch

|

| 4 |

+

import torchvision.transforms as transforms

|

| 5 |

+

from torch.utils.data import Dataset

|

| 6 |

+

from PIL import Image

|

| 7 |

+

from .stft import STFT

|

| 8 |

+

from .mel import LogMel

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

F_EXTENSIONS = [

|

| 12 |

+

".jpg", ".JPG", ".jpeg", ".JPEG",

|

| 13 |

+

".png", ".PNG", ".ppm", ".PPM", ".bmp", ".BMP", ".tiff", ".wav", ".WAV", ".aif", ".aiff", ".AIF", ".AIFF"

|

| 14 |

+

]

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

def is_image_audio_file(filename):

|

| 18 |

+

return any(filename.endswith(extension) for extension in F_EXTENSIONS)

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

def make_dataset(dir, extensions=F_EXTENSIONS):

|

| 22 |

+

images = []

|

| 23 |

+

assert os.path.isdir(dir), "%s is not a valid directory." % dir

|

| 24 |

+

|

| 25 |

+

for root, _, fnames in sorted(os.walk(dir)):

|

| 26 |

+

for fname in fnames:

|

| 27 |

+

if is_image_audio_file(fname):

|

| 28 |

+

path = os.path.join(root, fname)

|

| 29 |

+

images.append(path)

|

| 30 |

+

|

| 31 |

+

return images

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

class Image2ReverbDataset(Dataset):

|

| 35 |

+

def __init__(self, dataroot, phase="train", spec="stft"):

|

| 36 |

+

self.root = dataroot

|

| 37 |

+

self.stft = LogMel() if spec == "mel" else STFT()

|

| 38 |

+

|

| 39 |

+

### input A (images)

|

| 40 |

+

dir_A = "_A"

|

| 41 |

+

self.dir_A = os.path.join(self.root, phase + dir_A)

|

| 42 |

+

self.A_paths = sorted(make_dataset(self.dir_A))

|

| 43 |

+

|

| 44 |

+

### input B (audio)

|

| 45 |

+

dir_B = "_B"

|

| 46 |

+

self.dir_B = os.path.join(self.root, phase + dir_B)

|

| 47 |

+

self.B_paths = sorted(make_dataset(self.dir_B))

|

| 48 |

+

|

| 49 |

+

def __getitem__(self, index):

|

| 50 |

+

if index > len(self):

|

| 51 |

+

return None

|

| 52 |

+

### input A (images)

|

| 53 |

+

A_path = self.A_paths[index]

|

| 54 |

+

A = Image.open(A_path)

|

| 55 |

+

t = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

|

| 56 |

+

A_tensor = t(A.convert("RGB"))

|

| 57 |

+

|

| 58 |

+

### input B (audio)

|

| 59 |

+

B_path = self.B_paths[index]

|

| 60 |

+

B, _ = soundfile.read(B_path)

|

| 61 |

+

B_spec = self.stft.transform(B)

|

| 62 |

+

|

| 63 |

+

return B_spec, A_tensor, (B_path, A_path)

|

| 64 |

+

|

| 65 |

+

def __len__(self):

|

| 66 |

+

return len(self.A_paths)

|

| 67 |

+

|

| 68 |

+

def name(self):

|

| 69 |

+

return "Image2Reverb"

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

class Image2ReverbDemoDataset(Dataset):

|

| 73 |

+

def __init__(self, image_paths):

|

| 74 |

+

if isinstance(image_paths, str) and os.path.isdir(image_paths):

|

| 75 |

+

self.paths = sorted(make_dataset(image_paths, [".jpg", ".JPG", ".jpeg", ".JPEG", ".png", ".PNG", ".ppm", ".PPM", ".bmp", ".BMP", ".tiff"]))

|

| 76 |

+

else:

|

| 77 |

+

self.paths = sorted(image_paths)

|

| 78 |

+

|

| 79 |

+

self.stft = STFT()

|

| 80 |

+

|

| 81 |

+

def __getitem__(self, index):

|

| 82 |

+

if index > len(self):

|

| 83 |

+

return None

|

| 84 |

+

### input A (images)

|

| 85 |

+

path = self.paths[index]

|

| 86 |

+

img = Image.open(path)

|

| 87 |

+

t = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

|

| 88 |

+

img_tensor = t(img.convert("RGB"))

|

| 89 |

+

|

| 90 |

+

return torch.zeros(1, int(5.94 * 22050)), img_tensor, ("", path)

|

| 91 |

+

|

| 92 |

+

def __len__(self):

|

| 93 |

+

return len(self.paths)

|

| 94 |

+

|

| 95 |

+

def name(self):

|

| 96 |

+

return "Image2ReverbDemo"

|

image2reverb/layers.py

ADDED

|

@@ -0,0 +1,88 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import torch.nn as nn

|

| 3 |

+

import torch.nn.functional as F

|

| 4 |

+

from torch.nn.init import kaiming_normal_, calculate_gain

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

class PixelWiseNormLayer(nn.Module):

|

| 8 |

+

"""PixelNorm layer. Implementation is from https://github.com/shanexn/pytorch-pggan."""

|

| 9 |

+

def __init__(self):

|

| 10 |

+

super().__init__()

|

| 11 |

+

|

| 12 |

+

def forward(self, x):

|

| 13 |

+

return x/torch.sqrt(torch.mean(x ** 2, dim=1, keepdim=True) + 1e-8)

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

class MiniBatchAverageLayer(nn.Module):

|

| 17 |

+

"""Minibatch stat concatenation layer. Implementation is from https://github.com/shanexn/pytorch-pggan."""

|

| 18 |

+

def __init__(self, offset=1e-8):

|

| 19 |

+

super().__init__()

|

| 20 |

+

self.offset = offset

|

| 21 |

+

|

| 22 |

+

def forward(self, x):

|

| 23 |

+

stddev = torch.sqrt(torch.mean((x - torch.mean(x, dim=0, keepdim=True))**2, dim=0, keepdim=True) + self.offset)

|

| 24 |

+

inject_shape = list(x.size())[:]

|

| 25 |

+

inject_shape[1] = 1

|

| 26 |

+

inject = torch.mean(stddev, dim=1, keepdim=True)

|

| 27 |

+

inject = inject.expand(inject_shape)

|

| 28 |

+

return torch.cat((x, inject), dim=1)

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

class EqualizedLearningRateLayer(nn.Module):

|

| 32 |

+

"""Applies equalized learning rate to the preceding layer. Implementation is from https://github.com/shanexn/pytorch-pggan."""

|

| 33 |

+

def __init__(self, layer):

|

| 34 |

+

super().__init__()

|

| 35 |

+

self.layer_ = layer

|

| 36 |

+

|

| 37 |

+

kaiming_normal_(self.layer_.weight, a=calculate_gain("conv2d"))

|

| 38 |

+

self.layer_norm_constant_ = (torch.mean(self.layer_.weight.data ** 2)) ** 0.5

|

| 39 |

+

self.layer_.weight.data.copy_(self.layer_.weight.data / self.layer_norm_constant_)

|

| 40 |

+

|

| 41 |

+

self.bias_ = self.layer_.bias if self.layer_.bias else None

|

| 42 |

+

self.layer_.bias = None

|

| 43 |

+

|

| 44 |

+

def forward(self, x):

|

| 45 |

+

self.layer_norm_constant_ = self.layer_norm_constant_.type(torch.cuda.FloatTensor if torch.cuda.is_available() else torch.Tensor)

|

| 46 |

+

x = self.layer_norm_constant_ * x

|

| 47 |

+

if self.bias_ is not None:

|

| 48 |

+

x += self.bias.view(1, self.bias.size()[0], 1, 1)

|

| 49 |

+

return x

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

class ConvBlock(nn.Module):

|

| 53 |

+

"""Layer to perform a convolution followed by ELU

|

| 54 |

+

"""

|

| 55 |

+

def __init__(self, in_channels, out_channels):

|

| 56 |

+

super(ConvBlock, self).__init__()

|

| 57 |

+

|

| 58 |

+

self.conv = Conv3x3(in_channels, out_channels)

|

| 59 |

+

self.nonlin = nn.ELU(inplace=True)

|

| 60 |

+

|

| 61 |

+

def forward(self, x):

|

| 62 |

+

out = self.conv(x)

|

| 63 |

+

out = self.nonlin(out)

|

| 64 |

+

return out

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

class Conv3x3(nn.Module):

|

| 68 |

+

"""Layer to pad and convolve input

|

| 69 |

+

"""

|

| 70 |

+

def __init__(self, in_channels, out_channels, use_refl=True):

|

| 71 |

+

super(Conv3x3, self).__init__()

|

| 72 |

+

|

| 73 |

+

if use_refl:

|

| 74 |

+

self.pad = nn.ReflectionPad2d(1)

|

| 75 |

+

else:

|

| 76 |

+

self.pad = nn.ZeroPad2d(1)

|

| 77 |

+

self.conv = nn.Conv2d(int(in_channels), int(out_channels), 3)

|

| 78 |

+

|

| 79 |

+

def forward(self, x):

|

| 80 |

+

out = self.pad(x)

|

| 81 |

+

out = self.conv(out)

|

| 82 |

+

return out

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

def upsample(x):

|

| 86 |

+

"""Upsample input tensor by a factor of 2

|

| 87 |

+

"""

|

| 88 |

+

return F.interpolate(x, scale_factor=2, mode="nearest")

|

image2reverb/mel.py

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import numpy

|

| 2 |

+

import torch

|

| 3 |

+

import librosa

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

class LogMel(torch.nn.Module):

|

| 7 |

+

def __init__(self):

|

| 8 |

+

super().__init__()

|

| 9 |

+

self._eps = 1e-8

|

| 10 |

+

|

| 11 |

+

def transform(self, audio):

|

| 12 |

+

m = librosa.feature.melspectrogram(audio/numpy.abs(audio).max())

|

| 13 |

+

m = numpy.log(m + self._eps)

|

| 14 |

+

return torch.Tensor(((m - m.mean()) / m.std()) * 0.8).unsqueeze(0)

|

| 15 |

+

|

| 16 |

+

def inverse(self, spec):

|

| 17 |

+

s = spec.cpu().detach().numpy()

|

| 18 |

+

s = numpy.exp((s * 5) - 15.96) - self._eps # Empirical mean and standard deviation over test set

|

| 19 |

+

y = librosa.feature.inverse.mel_to_audio(s) # Reconstruct audio

|

| 20 |

+

return y/numpy.abs(y).max()

|

image2reverb/model.py

ADDED

|

@@ -0,0 +1,207 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import json

|

| 3 |

+

import numpy

|

| 4 |

+

import torch

|

| 5 |

+

from torch import nn

|

| 6 |

+

import torch.nn.functional as F

|

| 7 |

+

import pytorch_lightning as pl

|

| 8 |

+

import torchvision

|

| 9 |

+

import pyroomacoustics

|

| 10 |

+

from .networks import Encoder, Generator, Discriminator

|

| 11 |

+

from .stft import STFT

|

| 12 |

+

from .mel import LogMel

|

| 13 |

+

from .util import compare_t60

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

# Hyperparameters

|

| 17 |

+

G_LR = 4e-4

|

| 18 |

+

D_LR = 2e-4

|

| 19 |

+

ENC_LR = 1e-5

|

| 20 |

+

ADAM_BETA = (0.0, 0.99)

|

| 21 |

+

ADAM_EPS = 1e-8

|

| 22 |

+

LAMBDA = 100

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

class Image2Reverb(pl.LightningModule):

|

| 26 |

+

def __init__(self, encoder_path, depthmodel_path, latent_dimension=512, spec="stft", d_threshold=0.2, t60p=True, constant_depth = None, test_callback=None):

|

| 27 |

+

super().__init__()

|

| 28 |

+

self._latent_dimension = latent_dimension

|

| 29 |

+

self._d_threshold = d_threshold

|

| 30 |

+

self.constant_depth = constant_depth

|

| 31 |

+

self.t60p = t60p

|

| 32 |

+

self.confidence = {}

|

| 33 |

+

self.tau = 50

|

| 34 |

+

self.test_callback = test_callback

|

| 35 |

+

self._opt = (d_threshold != None) and (d_threshold > 0) and (d_threshold < 1)

|

| 36 |

+

self.enc = Encoder(encoder_path, depthmodel_path, constant_depth=self.constant_depth, device=self.device)

|

| 37 |

+

self.g = Generator(latent_dimension, spec == "mel")

|

| 38 |

+

self.d = Discriminator(365, spec == "mel")

|

| 39 |

+

self.validation_inputs = []

|

| 40 |

+

self.stft_type = spec

|

| 41 |

+

|

| 42 |

+

def forward(self, x):

|

| 43 |

+

f = self.enc.forward(x)[0]

|

| 44 |

+

z = torch.cat((f, torch.randn((f.shape[0], (self._latent_dimension - f.shape[1]) if f.shape[1] < self._latent_dimension else f.shape[1], f.shape[2], f.shape[3]), device=self.device)), 1)

|

| 45 |

+

return self.g(z)

|

| 46 |

+

|

| 47 |

+

def training_step(self, batch, batch_idx, optimizer_idx):

|

| 48 |

+

opts = None

|

| 49 |

+

if self._opt:

|

| 50 |

+

opts = self.optimizers()

|

| 51 |

+

|

| 52 |

+

spec, label, p = batch

|

| 53 |

+

spec.requires_grad = True # For the backward pass, seems necessary for now

|

| 54 |

+

|

| 55 |

+

# Forward passes through models

|

| 56 |

+

f = self.enc.forward(label)[0]

|

| 57 |

+

z = torch.cat((f, torch.randn((f.shape[0], (self._latent_dimension - f.shape[1]) if f.shape[1] < self._latent_dimension else f.shape[1], f.shape[2], f.shape[3]), device=self.device)), 1)

|

| 58 |

+

fake_spec = self.g(z)

|

| 59 |

+

d_fake = self.d(fake_spec.detach(), f)

|

| 60 |

+

d_real = self.d(spec, f)

|

| 61 |

+

|

| 62 |

+

# Train Generator or Encoder

|

| 63 |

+

if optimizer_idx == 0 or optimizer_idx == 1:

|

| 64 |

+

d_fake2 = self.d(fake_spec.detach(), f)

|

| 65 |

+

G_loss1 = F.mse_loss(d_fake2, torch.ones(d_fake2.shape, device=self.device))

|

| 66 |

+

G_loss2 = F.l1_loss(fake_spec, spec)

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

G_loss = G_loss1 + (LAMBDA * G_loss2)

|

| 70 |

+

if self.t60p:

|

| 71 |

+

t60_err = torch.Tensor([compare_t60(torch.exp(a).sum(-2).squeeze(), torch.exp(b).sum(-2).squeeze()) for a, b in zip(spec, fake_spec)]).to(self.device).mean()

|

| 72 |

+

G_loss += t60_err

|

| 73 |

+

self.log("t60", t60_err, on_step=True, on_epoch=True, prog_bar=True)

|

| 74 |

+

|

| 75 |

+

if self._opt:

|

| 76 |

+

self.manual_backward(G_loss, self.opts[optimizer_idx])

|

| 77 |

+

opts[optimizer_idx].step()

|

| 78 |

+

opts[optimizer_idx].zero_grad()

|

| 79 |

+

|

| 80 |

+

self.log("G", G_loss, on_step=True, on_epoch=True, prog_bar=True)

|

| 81 |

+

|

| 82 |

+

return G_loss

|

| 83 |

+

else: # Train Discriminator

|

| 84 |

+

l_fakeD = F.mse_loss(d_fake, torch.zeros(d_fake.shape, device=self.device))

|

| 85 |

+

l_realD = F.mse_loss(d_real, torch.ones(d_real.shape, device=self.device))

|

| 86 |

+

D_loss = (l_realD + l_fakeD)

|

| 87 |

+

|

| 88 |

+

if self._opt and (D_loss > self._d_threshold):

|

| 89 |

+

self.manual_backward(D_loss, self.opts[optimizer_idx])

|

| 90 |

+

opts[optimizer_idx].step()

|

| 91 |

+

opts[optimizer_idx].zero_grad()

|

| 92 |

+

|

| 93 |

+

self.log("D", D_loss, on_step=True, on_epoch=True, prog_bar=True)

|

| 94 |

+

|

| 95 |

+

return D_loss

|

| 96 |

+

|

| 97 |

+

def configure_optimizers(self):

|

| 98 |

+

g_optim = torch.optim.Adam(self.g.parameters(), lr=G_LR, betas=ADAM_BETA, eps=ADAM_EPS)

|

| 99 |

+

d_optim = torch.optim.Adam(self.d.parameters(), lr=D_LR, betas=ADAM_BETA, eps=ADAM_EPS)

|

| 100 |

+

enc_optim = torch.optim.Adam(self.enc.parameters(), lr=ENC_LR, betas=ADAM_BETA, eps=ADAM_EPS)

|

| 101 |

+

return [enc_optim, g_optim, d_optim], []

|

| 102 |

+

|

| 103 |

+

def validation_step(self, batch, batch_idx):

|

| 104 |

+

spec, label, paths = batch

|

| 105 |

+

examples = [os.path.basename(s[:s.rfind("_")]) for s, _ in zip(*paths)]

|

| 106 |

+

|

| 107 |

+

# Forward passes through models

|

| 108 |

+

f = self.enc.forward(label)[0]

|

| 109 |

+

z = torch.cat((f, torch.randn((f.shape[0], (self._latent_dimension - f.shape[1]) if f.shape[1] < self._latent_dimension else f.shape[1], f.shape[2], f.shape[3]), device=self.device)), 1)

|

| 110 |

+

fake_spec = self.g(z)

|

| 111 |

+

|

| 112 |

+

# Get audio

|

| 113 |

+

stft = LogMel() if self.stft_type == "mel" else STFT()

|

| 114 |

+

y_r = [stft.inverse(s.squeeze()) for s in spec]

|

| 115 |

+

y_f = [stft.inverse(s.squeeze()) for s in fake_spec]

|

| 116 |

+

|

| 117 |

+

# RT60 error (in percentages)

|

| 118 |

+

val_pct = 1

|

| 119 |

+

try:

|

| 120 |

+

f = lambda x : pyroomacoustics.experimental.rt60.measure_rt60(x, 22050)

|

| 121 |

+

t60_r = [f(y) for y in y_r if len(y)]

|

| 122 |

+

t60_f = [f(y) for y in y_f if len(y)]

|

| 123 |

+

val_pct = numpy.mean([((t_b - t_a)/t_a) for t_a, t_b in zip(t60_r, t60_f)])

|

| 124 |

+

except:

|

| 125 |

+

pass

|

| 126 |

+

|

| 127 |

+

return {"val_t60err": val_pct, "val_spec": fake_spec, "val_audio": torch.Tensor(y_f), "val_img": label, "val_examples": examples}

|

| 128 |

+

|

| 129 |

+

def validation_epoch_end(self, outputs):

|

| 130 |

+

if not len(outputs):

|

| 131 |

+

return

|

| 132 |

+

# Log mean T60 errors (in percentages)

|

| 133 |

+

val_t60errmean = torch.Tensor(numpy.array([output["val_t60err"] for output in outputs])).mean()

|

| 134 |

+

self.log("val_t60err", val_t60errmean, on_epoch=True, prog_bar=True)

|

| 135 |

+

|

| 136 |

+

# Log generated spectrogram images

|

| 137 |

+

grid = torchvision.utils.make_grid([torch.flip(x, [0]) for y in [output["val_spec"] for output in outputs] for x in y])

|

| 138 |

+

self.logger.experiment.add_image("generated_spectrograms", grid, self.current_epoch)

|

| 139 |

+

|

| 140 |

+

# Log model input images

|

| 141 |

+

grid = torchvision.utils.make_grid([x for y in [output["val_img"] for output in outputs] for x in y])

|

| 142 |

+

self.logger.experiment.add_image("input_images_with_depthmaps", grid, self.current_epoch)

|

| 143 |

+

|

| 144 |

+

# Log generated audio examples

|

| 145 |

+

for output in outputs:

|

| 146 |

+

for example, audio in zip(output["val_examples"], output["val_audio"]):

|

| 147 |

+

y = audio

|

| 148 |

+

self.logger.experiment.add_audio("generated_audio_%s" % example, y, self.current_epoch, sample_rate=22050)

|

| 149 |

+

|

| 150 |

+

def test_step(self, batch, batch_idx):

|

| 151 |

+

spec, label, paths = batch

|

| 152 |

+

examples = [os.path.basename(s[:s.rfind("_")]) for s, _ in zip(*paths)]

|

| 153 |

+

|

| 154 |

+

# Forward passes through models

|

| 155 |

+

f, img = self.enc.forward(label)

|

| 156 |

+

img = (img + 1) * 0.5

|

| 157 |

+

z = torch.cat((f, torch.randn((f.shape[0], (self._latent_dimension - f.shape[1]) if f.shape[1] < self._latent_dimension else f.shape[1], f.shape[2], f.shape[3]), device=self.device)), 1)

|

| 158 |

+

fake_spec = self.g(z)

|

| 159 |

+

|

| 160 |

+

# Get audio

|

| 161 |

+

stft = LogMel() if self.stft_type == "mel" else STFT()

|

| 162 |

+

y_r = [stft.inverse(s.squeeze()) for s in spec]

|

| 163 |

+

y_f = [stft.inverse(s.squeeze()) for s in fake_spec]

|

| 164 |

+

|

| 165 |

+

# RT60 error (in percentages)

|

| 166 |

+

val_pct = 1

|

| 167 |

+

f = lambda x : pyroomacoustics.experimental.rt60.measure_rt60(x, 22050)

|

| 168 |

+

val_pct = []

|

| 169 |

+

for y_real, y_fake in zip(y_r, y_f):

|

| 170 |

+

try:

|

| 171 |

+

t_a = f(y_real)

|

| 172 |

+

t_b = f(y_fake)

|

| 173 |

+

val_pct.append((t_b - t_a)/t_a)

|

| 174 |

+

except:

|

| 175 |

+

val_pct.append(numpy.nan)

|

| 176 |

+

|

| 177 |

+

return {"test_t60err": val_pct, "test_spec": fake_spec, "test_audio": y_f, "test_img": img, "test_examples": examples}

|

| 178 |

+

|

| 179 |

+

def test_epoch_end(self, outputs):

|

| 180 |

+

if not self.test_callback:

|

| 181 |

+

return

|

| 182 |

+

|

| 183 |

+

examples = []

|

| 184 |

+

t60 = []

|

| 185 |

+

spec_images = []

|

| 186 |

+

audio = []

|

| 187 |

+

input_images = []

|

| 188 |

+

input_depthmaps = []

|

| 189 |

+

|

| 190 |

+

for output in outputs:

|

| 191 |

+

for i in range(len(output["test_examples"])):

|

| 192 |

+

img = output["test_img"][i]

|

| 193 |

+

if img.shape[0] == 3:

|

| 194 |

+

rgb = img

|

| 195 |

+

img = torch.cat((rgb, torch.zeros((1, rgb.shape[1], rgb.shape[2]), device=self.device)), 0)

|

| 196 |

+

t60.append(output["test_t60err"][i])

|

| 197 |

+

spec_images.append(output["test_spec"][i].cpu().squeeze().detach().numpy())

|

| 198 |

+

audio.append(output["test_audio"][i])

|

| 199 |

+

input_images.append(img.cpu().squeeze().permute(1, 2, 0)[:,:,:-1].detach().numpy())

|

| 200 |

+

input_depthmaps.append(img.cpu().squeeze().permute(1, 2, 0)[:,:,-1].squeeze().detach().numpy())

|

| 201 |

+

examples.append(output["test_examples"][i])

|

| 202 |

+

|

| 203 |

+

self.test_callback(examples, t60, spec_images, audio, input_images, input_depthmaps)

|

| 204 |

+

|

| 205 |

+

@property

|

| 206 |

+

def automatic_optimization(self) -> bool:

|

| 207 |

+

return not self._opt

|

image2reverb/networks.py

ADDED

|

@@ -0,0 +1,344 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|