Upload 68 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +5 -0

- LICENSE +212 -0

- README.md +316 -0

- data/__init__.py +98 -0

- data/__pycache__/__init__.cpython-310.pyc +0 -0

- data/__pycache__/base_dataset.cpython-310.pyc +0 -0

- data/__pycache__/image_folder.cpython-310.pyc +0 -0

- data/__pycache__/unaligned_dataset.cpython-310.pyc +0 -0

- data/base_dataset.py +230 -0

- data/image_folder.py +66 -0

- data/single_dataset.py +40 -0

- data/singleimage_dataset.py +108 -0

- data/template_dataset.py +75 -0

- data/unaligned_dataset.py +78 -0

- docs/datasets.md +45 -0

- environment.yml +16 -0

- experiments/__init__.py +54 -0

- experiments/__main__.py +87 -0

- experiments/grumpifycat_launcher.py +28 -0

- experiments/placeholder_launcher.py +81 -0

- experiments/pretrained_launcher.py +61 -0

- experiments/singleimage_launcher.py +18 -0

- experiments/tmux_launcher.py +215 -0

- imgs/gif_cut.gif +3 -0

- imgs/grumpycat.jpg +0 -0

- imgs/horse2zebra_comparison.jpg +3 -0

- imgs/paris.jpg +0 -0

- imgs/patchnce.gif +3 -0

- imgs/results.gif +3 -0

- imgs/singleimage.gif +3 -0

- models/__init__.py +67 -0

- models/__pycache__/__init__.cpython-310.pyc +0 -0

- models/__pycache__/base_model.cpython-310.pyc +0 -0

- models/__pycache__/cut_model.cpython-310.pyc +0 -0

- models/__pycache__/cycle_gan_model.cpython-310.pyc +0 -0

- models/__pycache__/networks.cpython-310.pyc +0 -0

- models/__pycache__/patchnce.cpython-310.pyc +0 -0

- models/__pycache__/stylegan_networks.cpython-310.pyc +0 -0

- models/base_model.py +258 -0

- models/cut_model.py +214 -0

- models/cycle_gan_model.py +325 -0

- models/networks.py +1403 -0

- models/patchnce.py +55 -0

- models/sincut_model.py +79 -0

- models/stylegan_networks.py +914 -0

- models/template_model.py +99 -0

- options/__init__.py +1 -0

- options/__pycache__/__init__.cpython-310.pyc +0 -0

- options/__pycache__/base_options.cpython-310.pyc +0 -0

- options/__pycache__/train_options.cpython-310.pyc +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,8 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

imgs/gif_cut.gif filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

imgs/horse2zebra_comparison.jpg filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

imgs/patchnce.gif filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

imgs/results.gif filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

imgs/singleimage.gif filter=lfs diff=lfs merge=lfs -text

|

LICENSE

ADDED

|

@@ -0,0 +1,212 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Copyright (c) 2020, Taesung Park and Jun-Yan Zhu

|

| 2 |

+

All rights reserved.

|

| 3 |

+

|

| 4 |

+

Redistribution and use in source and binary forms, with or without

|

| 5 |

+

modification, are permitted provided that the following conditions are met:

|

| 6 |

+

|

| 7 |

+

* Redistributions of source code must retain the above copyright notice, this

|

| 8 |

+

list of conditions and the following disclaimer.

|

| 9 |

+

|

| 10 |

+

* Redistributions in binary form must reproduce the above copyright notice,

|

| 11 |

+

this list of conditions and the following disclaimer in the documentation

|

| 12 |

+

and/or other materials provided with the distribution.

|

| 13 |

+

|

| 14 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

|

| 15 |

+

AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

|

| 16 |

+

IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

|

| 17 |

+

DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

|

| 18 |

+

FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

|

| 19 |

+

DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

|

| 20 |

+

SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

|

| 21 |

+

CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

|

| 22 |

+

OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

|

| 23 |

+

OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

| 24 |

+

|

| 25 |

+

--------------------------- LICENSE FOR CycleGAN -------------------------------

|

| 26 |

+

-------------------https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix------

|

| 27 |

+

Copyright (c) 2017, Jun-Yan Zhu and Taesung Park

|

| 28 |

+

All rights reserved.

|

| 29 |

+

|

| 30 |

+

Redistribution and use in source and binary forms, with or without

|

| 31 |

+

modification, are permitted provided that the following conditions are met:

|

| 32 |

+

|

| 33 |

+

* Redistributions of source code must retain the above copyright notice, this

|

| 34 |

+

list of conditions and the following disclaimer.

|

| 35 |

+

|

| 36 |

+

* Redistributions in binary form must reproduce the above copyright notice,

|

| 37 |

+

this list of conditions and the following disclaimer in the documentation

|

| 38 |

+

and/or other materials provided with the distribution.

|

| 39 |

+

|

| 40 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

|

| 41 |

+

AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

|

| 42 |

+

IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

|

| 43 |

+

DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

|

| 44 |

+

FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

|

| 45 |

+

DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

|

| 46 |

+

SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

|

| 47 |

+

CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

|

| 48 |

+

OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

|

| 49 |

+

OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

| 50 |

+

|

| 51 |

+

--------------------------- LICENSE FOR stylegan2-pytorch ----------------------

|

| 52 |

+

----------------https://github.com/rosinality/stylegan2-pytorch/----------------

|

| 53 |

+

MIT License

|

| 54 |

+

|

| 55 |

+

Copyright (c) 2019 Kim Seonghyeon

|

| 56 |

+

|

| 57 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 58 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 59 |

+

in the Software without restriction, including without limitation the rights

|

| 60 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 61 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 62 |

+

furnished to do so, subject to the following conditions:

|

| 63 |

+

|

| 64 |

+

The above copyright notice and this permission notice shall be included in all

|

| 65 |

+

copies or substantial portions of the Software.

|

| 66 |

+

|

| 67 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 68 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 69 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 70 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 71 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 72 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 73 |

+

SOFTWARE.

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

--------------------------- LICENSE FOR pix2pix --------------------------------

|

| 77 |

+

BSD License

|

| 78 |

+

|

| 79 |

+

For pix2pix software

|

| 80 |

+

Copyright (c) 2016, Phillip Isola and Jun-Yan Zhu

|

| 81 |

+

All rights reserved.

|

| 82 |

+

|

| 83 |

+

Redistribution and use in source and binary forms, with or without

|

| 84 |

+

modification, are permitted provided that the following conditions are met:

|

| 85 |

+

|

| 86 |

+

* Redistributions of source code must retain the above copyright notice, this

|

| 87 |

+

list of conditions and the following disclaimer.

|

| 88 |

+

|

| 89 |

+

* Redistributions in binary form must reproduce the above copyright notice,

|

| 90 |

+

this list of conditions and the following disclaimer in the documentation

|

| 91 |

+

and/or other materials provided with the distribution.

|

| 92 |

+

|

| 93 |

+

----------------------------- LICENSE FOR DCGAN --------------------------------

|

| 94 |

+

BSD License

|

| 95 |

+

|

| 96 |

+

For dcgan.torch software

|

| 97 |

+

|

| 98 |

+

Copyright (c) 2015, Facebook, Inc. All rights reserved.

|

| 99 |

+

|

| 100 |

+

Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

|

| 101 |

+

|

| 102 |

+

Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

|

| 103 |

+

|

| 104 |

+

Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

|

| 105 |

+

|

| 106 |

+

Neither the name Facebook nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

|

| 107 |

+

|

| 108 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

| 109 |

+

|

| 110 |

+

--------------------------- LICENSE FOR StyleGAN2 ------------------------------

|

| 111 |

+

--------------------------- Inherited from stylegan2-pytorch -------------------

|

| 112 |

+

Copyright (c) 2019, NVIDIA Corporation. All rights reserved.

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

Nvidia Source Code License-NC

|

| 116 |

+

|

| 117 |

+

=======================================================================

|

| 118 |

+

|

| 119 |

+

1. Definitions

|

| 120 |

+

|

| 121 |

+

"Licensor" means any person or entity that distributes its Work.

|

| 122 |

+

|

| 123 |

+

"Software" means the original work of authorship made available under

|

| 124 |

+

this License.

|

| 125 |

+

|

| 126 |

+

"Work" means the Software and any additions to or derivative works of

|

| 127 |

+

the Software that are made available under this License.

|

| 128 |

+

|

| 129 |

+

"Nvidia Processors" means any central processing unit (CPU), graphics

|

| 130 |

+

processing unit (GPU), field-programmable gate array (FPGA),

|

| 131 |

+

application-specific integrated circuit (ASIC) or any combination

|

| 132 |

+

thereof designed, made, sold, or provided by Nvidia or its affiliates.

|

| 133 |

+

|

| 134 |

+

The terms "reproduce," "reproduction," "derivative works," and

|

| 135 |

+

"distribution" have the meaning as provided under U.S. copyright law;

|

| 136 |

+

provided, however, that for the purposes of this License, derivative

|

| 137 |

+

works shall not include works that remain separable from, or merely

|

| 138 |

+

link (or bind by name) to the interfaces of, the Work.

|

| 139 |

+

|

| 140 |

+

Works, including the Software, are "made available" under this License

|

| 141 |

+

by including in or with the Work either (a) a copyright notice

|

| 142 |

+

referencing the applicability of this License to the Work, or (b) a

|

| 143 |

+

copy of this License.

|

| 144 |

+

|

| 145 |

+

2. License Grants

|

| 146 |

+

|

| 147 |

+

2.1 Copyright Grant. Subject to the terms and conditions of this

|

| 148 |

+

License, each Licensor grants to you a perpetual, worldwide,

|

| 149 |

+

non-exclusive, royalty-free, copyright license to reproduce,

|

| 150 |

+

prepare derivative works of, publicly display, publicly perform,

|

| 151 |

+

sublicense and distribute its Work and any resulting derivative

|

| 152 |

+

works in any form.

|

| 153 |

+

|

| 154 |

+

3. Limitations

|

| 155 |

+

|

| 156 |

+

3.1 Redistribution. You may reproduce or distribute the Work only

|

| 157 |

+

if (a) you do so under this License, (b) you include a complete

|

| 158 |

+

copy of this License with your distribution, and (c) you retain

|

| 159 |

+

without modification any copyright, patent, trademark, or

|

| 160 |

+

attribution notices that are present in the Work.

|

| 161 |

+

|

| 162 |

+

3.2 Derivative Works. You may specify that additional or different

|

| 163 |

+

terms apply to the use, reproduction, and distribution of your

|

| 164 |

+

derivative works of the Work ("Your Terms") only if (a) Your Terms

|

| 165 |

+

provide that the use limitation in Section 3.3 applies to your

|

| 166 |

+

derivative works, and (b) you identify the specific derivative

|

| 167 |

+

works that are subject to Your Terms. Notwithstanding Your Terms,

|

| 168 |

+

this License (including the redistribution requirements in Section

|

| 169 |

+

3.1) will continue to apply to the Work itself.

|

| 170 |

+

|

| 171 |

+

3.3 Use Limitation. The Work and any derivative works thereof only

|

| 172 |

+

may be used or intended for use non-commercially. The Work or

|

| 173 |

+

derivative works thereof may be used or intended for use by Nvidia

|

| 174 |

+

or its affiliates commercially or non-commercially. As used herein,

|

| 175 |

+

"non-commercially" means for research or evaluation purposes only.

|

| 176 |

+

|

| 177 |

+

3.4 Patent Claims. If you bring or threaten to bring a patent claim

|

| 178 |

+

against any Licensor (including any claim, cross-claim or

|

| 179 |

+

counterclaim in a lawsuit) to enforce any patents that you allege

|

| 180 |

+

are infringed by any Work, then your rights under this License from

|

| 181 |

+

such Licensor (including the grants in Sections 2.1 and 2.2) will

|

| 182 |

+

terminate immediately.

|

| 183 |

+

|

| 184 |

+

3.5 Trademarks. This License does not grant any rights to use any

|

| 185 |

+

Licensor's or its affiliates' names, logos, or trademarks, except

|

| 186 |

+

as necessary to reproduce the notices described in this License.

|

| 187 |

+

|

| 188 |

+

3.6 Termination. If you violate any term of this License, then your

|

| 189 |

+

rights under this License (including the grants in Sections 2.1 and

|

| 190 |

+

2.2) will terminate immediately.

|

| 191 |

+

|

| 192 |

+

4. Disclaimer of Warranty.

|

| 193 |

+

|

| 194 |

+

THE WORK IS PROVIDED "AS IS" WITHOUT WARRANTIES OR CONDITIONS OF ANY

|

| 195 |

+

KIND, EITHER EXPRESS OR IMPLIED, INCLUDING WARRANTIES OR CONDITIONS OF

|

| 196 |

+

MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, TITLE OR

|

| 197 |

+

NON-INFRINGEMENT. YOU BEAR THE RISK OF UNDERTAKING ANY ACTIVITIES UNDER

|

| 198 |

+

THIS LICENSE.

|

| 199 |

+

|

| 200 |

+

5. Limitation of Liability.

|

| 201 |

+

|

| 202 |

+

EXCEPT AS PROHIBITED BY APPLICABLE LAW, IN NO EVENT AND UNDER NO LEGAL

|

| 203 |

+

THEORY, WHETHER IN TORT (INCLUDING NEGLIGENCE), CONTRACT, OR OTHERWISE

|

| 204 |

+

SHALL ANY LICENSOR BE LIABLE TO YOU FOR DAMAGES, INCLUDING ANY DIRECT,

|

| 205 |

+

INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES ARISING OUT OF

|

| 206 |

+

OR RELATED TO THIS LICENSE, THE USE OR INABILITY TO USE THE WORK

|

| 207 |

+

(INCLUDING BUT NOT LIMITED TO LOSS OF GOODWILL, BUSINESS INTERRUPTION,

|

| 208 |

+

LOST PROFITS OR DATA, COMPUTER FAILURE OR MALFUNCTION, OR ANY OTHER

|

| 209 |

+

COMMERCIAL DAMAGES OR LOSSES), EVEN IF THE LICENSOR HAS BEEN ADVISED OF

|

| 210 |

+

THE POSSIBILITY OF SUCH DAMAGES.

|

| 211 |

+

|

| 212 |

+

=======================================================================

|

README.md

ADDED

|

@@ -0,0 +1,316 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

|

| 3 |

+

# Contrastive Unpaired Translation (CUT)

|

| 4 |

+

|

| 5 |

+

### [video (1m)](https://youtu.be/Llg0vE_MVgk) | [video (10m)](https://youtu.be/jSGOzjmN8q0) | [website](http://taesung.me/ContrastiveUnpairedTranslation/) | [paper](https://arxiv.org/pdf/2007.15651)

|

| 6 |

+

<br>

|

| 7 |

+

|

| 8 |

+

<img src='imgs/gif_cut.gif' align="right" width=960>

|

| 9 |

+

|

| 10 |

+

<br><br><br>

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

We provide our PyTorch implementation of unpaired image-to-image translation based on patchwise contrastive learning and adversarial learning. No hand-crafted loss and inverse network is used. Compared to [CycleGAN](https://github.com/junyanz/CycleGAN), our model training is faster and less memory-intensive. In addition, our method can be extended to single image training, where each “domain” is only a *single* image.

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

[Contrastive Learning for Unpaired Image-to-Image Translation](http://taesung.me/ContrastiveUnpairedTranslation/)

|

| 20 |

+

[Taesung Park](https://taesung.me/), [Alexei A. Efros](https://people.eecs.berkeley.edu/~efros/), [Richard Zhang](https://richzhang.github.io/), [Jun-Yan Zhu](https://www.cs.cmu.edu/~junyanz/)<br>

|

| 21 |

+

UC Berkeley and Adobe Research<br>

|

| 22 |

+

In ECCV 2020

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

<img src='imgs/patchnce.gif' align="right" width=960>

|

| 26 |

+

|

| 27 |

+

<br><br><br>

|

| 28 |

+

|

| 29 |

+

### Pseudo code

|

| 30 |

+

```python

|

| 31 |

+

import torch

|

| 32 |

+

cross_entropy_loss = torch.nn.CrossEntropyLoss()

|

| 33 |

+

|

| 34 |

+

# Input: f_q (BxCxS) and sampled features from H(G_enc(x))

|

| 35 |

+

# Input: f_k (BxCxS) are sampled features from H(G_enc(G(x))

|

| 36 |

+

# Input: tau is the temperature used in PatchNCE loss.

|

| 37 |

+

# Output: PatchNCE loss

|

| 38 |

+

def PatchNCELoss(f_q, f_k, tau=0.07):

|

| 39 |

+

# batch size, channel size, and number of sample locations

|

| 40 |

+

B, C, S = f_q.shape

|

| 41 |

+

|

| 42 |

+

# calculate v * v+: BxSx1

|

| 43 |

+

l_pos = (f_k * f_q).sum(dim=1)[:, :, None]

|

| 44 |

+

|

| 45 |

+

# calculate v * v-: BxSxS

|

| 46 |

+

l_neg = torch.bmm(f_q.transpose(1, 2), f_k)

|

| 47 |

+

|

| 48 |

+

# The diagonal entries are not negatives. Remove them.

|

| 49 |

+

identity_matrix = torch.eye(S)[None, :, :]

|

| 50 |

+

l_neg.masked_fill_(identity_matrix, -float('inf'))

|

| 51 |

+

|

| 52 |

+

# calculate logits: (B)x(S)x(S+1)

|

| 53 |

+

logits = torch.cat((l_pos, l_neg), dim=2) / tau

|

| 54 |

+

|

| 55 |

+

# return PatchNCE loss

|

| 56 |

+

predictions = logits.flatten(0, 1)

|

| 57 |

+

targets = torch.zeros(B * S, dtype=torch.long)

|

| 58 |

+

return cross_entropy_loss(predictions, targets)

|

| 59 |

+

```

|

| 60 |

+

## Example Results

|

| 61 |

+

|

| 62 |

+

### Unpaired Image-to-Image Translation

|

| 63 |

+

<img src="imgs/results.gif" width="800px"/>

|

| 64 |

+

|

| 65 |

+

### Single Image Unpaired Translation

|

| 66 |

+

<img src="imgs/singleimage.gif" width="800px"/>

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

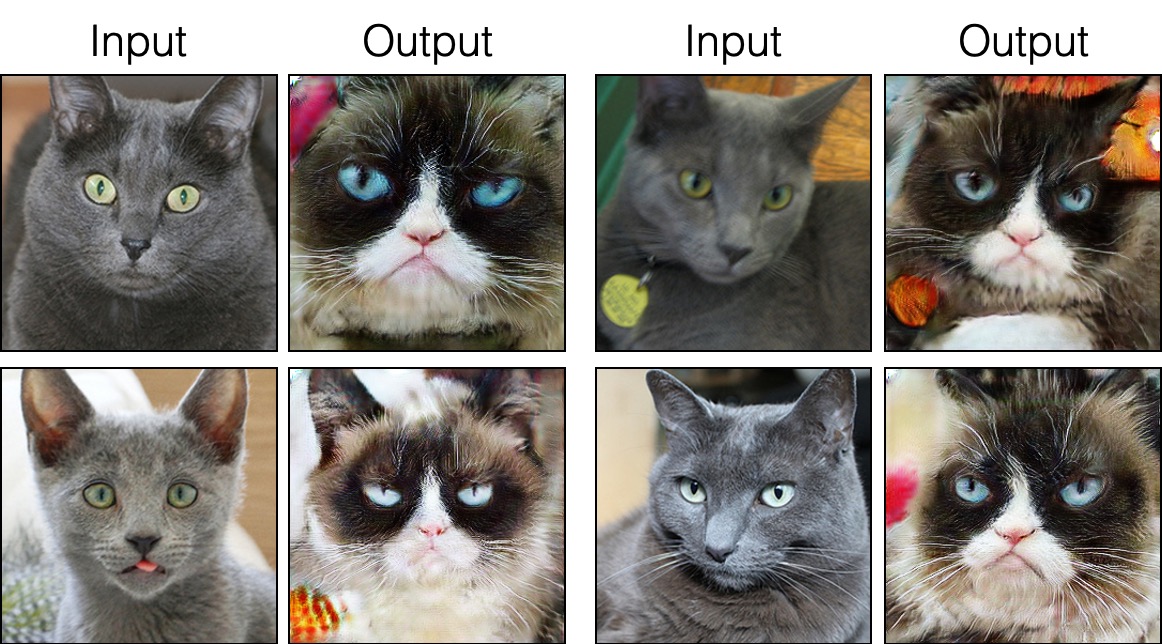

### Russian Blue Cat to Grumpy Cat

|

| 70 |

+

<img src="imgs/grumpycat.jpg" width="800px"/>

|

| 71 |

+

|

| 72 |

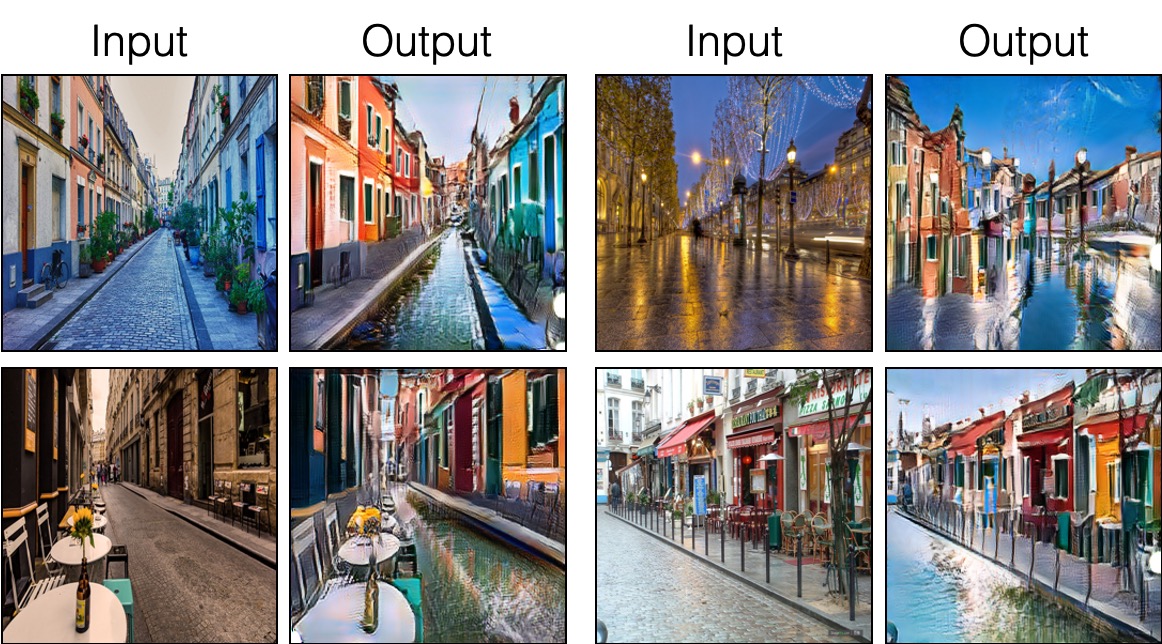

+

### Parisian Street to Burano's painted houses

|

| 73 |

+

<img src="imgs/paris.jpg" width="800px"/>

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

## Prerequisites

|

| 78 |

+

- Linux or macOS

|

| 79 |

+

- Python 3

|

| 80 |

+

- CPU or NVIDIA GPU + CUDA CuDNN

|

| 81 |

+

|

| 82 |

+

### Update log

|

| 83 |

+

|

| 84 |

+

9/12/2020: Added single-image translation.

|

| 85 |

+

|

| 86 |

+

### Getting started

|

| 87 |

+

|

| 88 |

+

- Clone this repo:

|

| 89 |

+

```bash

|

| 90 |

+

git clone https://github.com/taesungp/contrastive-unpaired-translation CUT

|

| 91 |

+

cd CUT

|

| 92 |

+

```

|

| 93 |

+

|

| 94 |

+

- Install PyTorch 1.1 and other dependencies (e.g., torchvision, visdom, dominate, gputil).

|

| 95 |

+

|

| 96 |

+

For pip users, please type the command `pip install -r requirements.txt`.

|

| 97 |

+

|

| 98 |

+

For Conda users, you can create a new Conda environment using `conda env create -f environment.yml`.

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

### CUT and FastCUT Training and Test

|

| 102 |

+

|

| 103 |

+

- Download the `grumpifycat` dataset (Fig 8 of the paper. Russian Blue -> Grumpy Cats)

|

| 104 |

+

```bash

|

| 105 |

+

bash ./datasets/download_cut_dataset.sh grumpifycat

|

| 106 |

+

```

|

| 107 |

+

The dataset is downloaded and unzipped at `./datasets/grumpifycat/`.

|

| 108 |

+

|

| 109 |

+

- To view training results and loss plots, run `python -m visdom.server` and click the URL http://localhost:8097.

|

| 110 |

+

|

| 111 |

+

- Train the CUT model:

|

| 112 |

+

```bash

|

| 113 |

+

python train.py --dataroot ./datasets/grumpifycat --name grumpycat_CUT --CUT_mode CUT

|

| 114 |

+

```

|

| 115 |

+

Or train the FastCUT model

|

| 116 |

+

```bash

|

| 117 |

+

python train.py --dataroot ./datasets/grumpifycat --name grumpycat_FastCUT --CUT_mode FastCUT

|

| 118 |

+

```

|

| 119 |

+

The checkpoints will be stored at `./checkpoints/grumpycat_*/web`.

|

| 120 |

+

|

| 121 |

+

- Test the CUT model:

|

| 122 |

+

```bash

|

| 123 |

+

python test.py --dataroot ./datasets/grumpifycat --name grumpycat_CUT --CUT_mode CUT --phase train

|

| 124 |

+

```

|

| 125 |

+

|

| 126 |

+

The test results will be saved to a html file here: `./results/grumpifycat/latest_train/index.html`.

|

| 127 |

+

|

| 128 |

+

### CUT, FastCUT, and CycleGAN

|

| 129 |

+

<img src="imgs/horse2zebra_comparison.jpg" width="800px"/><br>

|

| 130 |

+

|

| 131 |

+

CUT is trained with the identity preservation loss and with `lambda_NCE=1`, while FastCUT is trained without the identity loss but with higher `lambda_NCE=10.0`. Compared to CycleGAN, CUT learns to perform more powerful distribution matching, while FastCUT is designed as a lighter (half the GPU memory, can fit a larger image), and faster (twice faster to train) alternative to CycleGAN. Please refer to the [paper](https://arxiv.org/abs/2007.15651) for more details.

|

| 132 |

+

|

| 133 |

+

In the above figure, we measure the percentage of pixels belonging to the horse/zebra bodies, using a pre-trained semantic segmentation model. We find a distribution mismatch between sizes of horses and zebras images -- zebras usually appear larger (36.8\% vs. 17.9\%). Our full method CUT has the flexibility to enlarge the horses, as a means of better matching of the training statistics than CycleGAN. FastCUT behaves more conservatively like CycleGAN.

|

| 134 |

+

|

| 135 |

+

### Training using our launcher scripts

|

| 136 |

+

|

| 137 |

+

Please see `experiments/grumpifycat_launcher.py` that generates the above command line arguments. The launcher scripts are useful for configuring rather complicated command-line arguments of training and testing.

|

| 138 |

+

|

| 139 |

+

Using the launcher, the command below generates the training command of CUT and FastCUT.

|

| 140 |

+

```bash

|

| 141 |

+

python -m experiments grumpifycat train 0 # CUT

|

| 142 |

+

python -m experiments grumpifycat train 1 # FastCUT

|

| 143 |

+

```

|

| 144 |

+

|

| 145 |

+

To test using the launcher,

|

| 146 |

+

```bash

|

| 147 |

+

python -m experiments grumpifycat test 0 # CUT

|

| 148 |

+

python -m experiments grumpifycat test 1 # FastCUT

|

| 149 |

+

```

|

| 150 |

+

|

| 151 |

+

Possible commands are run, run_test, launch, close, and so on. Please see `experiments/__main__.py` for all commands. Launcher is easy and quick to define and use. For example, the grumpifycat launcher is defined in a few lines:

|

| 152 |

+

```python

|

| 153 |

+

from .tmux_launcher import Options, TmuxLauncher

|

| 154 |

+

|

| 155 |

+

|

| 156 |

+

class Launcher(TmuxLauncher):

|

| 157 |

+

def common_options(self):

|

| 158 |

+

return [

|

| 159 |

+

Options( # Command 0

|

| 160 |

+

dataroot="./datasets/grumpifycat",

|

| 161 |

+

name="grumpifycat_CUT",

|

| 162 |

+

CUT_mode="CUT"

|

| 163 |

+

),

|

| 164 |

+

|

| 165 |

+

Options( # Command 1

|

| 166 |

+

dataroot="./datasets/grumpifycat",

|

| 167 |

+

name="grumpifycat_FastCUT",

|

| 168 |

+

CUT_mode="FastCUT",

|

| 169 |

+

)

|

| 170 |

+

]

|

| 171 |

+

|

| 172 |

+

def commands(self):

|

| 173 |

+

return ["python train.py " + str(opt) for opt in self.common_options()]

|

| 174 |

+

|

| 175 |

+

def test_commands(self):

|

| 176 |

+

# Russian Blue -> Grumpy Cats dataset does not have test split.

|

| 177 |

+

# Therefore, let's set the test split to be the "train" set.

|

| 178 |

+

return ["python test.py " + str(opt.set(phase='train')) for opt in self.common_options()]

|

| 179 |

+

|

| 180 |

+

```

|

| 181 |

+

|

| 182 |

+

|

| 183 |

+

|

| 184 |

+

### Apply a pre-trained CUT model and evaluate FID

|

| 185 |

+

|

| 186 |

+

To run the pretrained models, run the following.

|

| 187 |

+

|

| 188 |

+

```bash

|

| 189 |

+

|

| 190 |

+

# Download and unzip the pretrained models. The weights should be located at

|

| 191 |

+

# checkpoints/horse2zebra_cut_pretrained/latest_net_G.pth, for example.

|

| 192 |

+

wget http://efrosgans.eecs.berkeley.edu/CUT/pretrained_models.tar

|

| 193 |

+

tar -xf pretrained_models.tar

|

| 194 |

+

|

| 195 |

+

# Generate outputs. The dataset paths might need to be adjusted.

|

| 196 |

+

# To do this, modify the lines of experiments/pretrained_launcher.py

|

| 197 |

+

# [id] corresponds to the respective commands defined in pretrained_launcher.py

|

| 198 |

+

# 0 - CUT on Cityscapes

|

| 199 |

+

# 1 - FastCUT on Cityscapes

|

| 200 |

+

# 2 - CUT on Horse2Zebra

|

| 201 |

+

# 3 - FastCUT on Horse2Zebra

|

| 202 |

+

# 4 - CUT on Cat2Dog

|

| 203 |

+

# 5 - FastCUT on Cat2Dog

|

| 204 |

+

python -m experiments pretrained run_test [id]

|

| 205 |

+

|

| 206 |

+

# Evaluate FID. To do this, first install pytorch-fid of https://github.com/mseitzer/pytorch-fid

|

| 207 |

+

# pip install pytorch-fid

|

| 208 |

+

# For example, to evaluate horse2zebra FID of CUT,

|

| 209 |

+

# python -m pytorch_fid ./datasets/horse2zebra/testB/ results/horse2zebra_cut_pretrained/test_latest/images/fake_B/

|

| 210 |

+

# To evaluate Cityscapes FID of FastCUT,

|

| 211 |

+

# python -m pytorch_fid ./datasets/cityscapes/valA/ ~/projects/contrastive-unpaired-translation/results/cityscapes_fastcut_pretrained/test_latest/images/fake_B/

|

| 212 |

+

# Note that a special dataset needs to be used for the Cityscapes model. Please read below.

|

| 213 |

+

python -m pytorch_fid [path to real test images] [path to generated images]

|

| 214 |

+

|

| 215 |

+

```

|

| 216 |

+

|

| 217 |

+

Note: the Cityscapes pretrained model was trained and evaluated on a resized and JPEG-compressed version of the original Cityscapes dataset. To perform evaluation, please download [this](http://efrosgans.eecs.berkeley.edu/CUT/datasets/cityscapes_val_for_CUT.tar) validation set and perform evaluation.

|

| 218 |

+

|

| 219 |

+

|

| 220 |

+

### SinCUT Single Image Unpaired Training

|

| 221 |

+

|

| 222 |

+

To train SinCUT (single-image translation, shown in Fig 9, 13 and 14 of the paper), you need to

|

| 223 |

+

|

| 224 |

+

1. set the `--model` option as `--model sincut`, which invokes the configuration and codes at `./models/sincut_model.py`, and

|

| 225 |

+

2. specify the dataset directory of one image in each domain, such as the example dataset included in this repo at `./datasets/single_image_monet_etretat/`.

|

| 226 |

+

|

| 227 |

+

For example, to train a model for the [Etretat cliff (first image of Figure 13)](https://github.com/taesungp/contrastive-unpaired-translation/blob/master/imgs/singleimage.gif), please use the following command.

|

| 228 |

+

|

| 229 |

+

```bash

|

| 230 |

+

python train.py --model sincut --name singleimage_monet_etretat --dataroot ./datasets/single_image_monet_etretat

|

| 231 |

+

```

|

| 232 |

+

|

| 233 |

+

or by using the experiment launcher script,

|

| 234 |

+

```bash

|

| 235 |

+

python -m experiments singleimage run 0

|

| 236 |

+

```

|

| 237 |

+

|

| 238 |

+

For single-image translation, we adopt network architectural components of [StyleGAN2](https://github.com/NVlabs/stylegan2), as well as the pixel identity preservation loss used in [DTN](https://arxiv.org/abs/1611.02200) and [CycleGAN](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/models/cycle_gan_model.py#L160). In particular, we adopted the code of [rosinality](https://github.com/rosinality/stylegan2-pytorch), which exists at `models/stylegan_networks.py`.

|

| 239 |

+

|

| 240 |

+

The training takes several hours. To generate the final image using the checkpoint,

|

| 241 |

+

|

| 242 |

+

```bash

|

| 243 |

+

python test.py --model sincut --name singleimage_monet_etretat --dataroot ./datasets/single_image_monet_etretat

|

| 244 |

+

```

|

| 245 |

+

|

| 246 |

+

or simply

|

| 247 |

+

|

| 248 |

+

```bash

|

| 249 |

+

python -m experiments singleimage run_test 0

|

| 250 |

+

```

|

| 251 |

+

|

| 252 |

+

### [Datasets](./docs/datasets.md)

|

| 253 |

+

Download CUT/CycleGAN/pix2pix datasets. For example,

|

| 254 |

+

|

| 255 |

+

```bash

|

| 256 |

+

bash datasets/download_cut_datasets.sh horse2zebra

|

| 257 |

+

```

|

| 258 |

+

|

| 259 |

+

The Cat2Dog dataset is prepared from the AFHQ dataset. Please visit https://github.com/clovaai/stargan-v2 and download the AFHQ dataset by `bash download.sh afhq-dataset` of the github repo. Then reorganize directories as follows.

|

| 260 |

+

```bash

|

| 261 |

+

mkdir datasets/cat2dog

|

| 262 |

+

ln -s datasets/cat2dog/trainA [path_to_afhq]/train/cat

|

| 263 |

+

ln -s datasets/cat2dog/trainB [path_to_afhq]/train/dog

|

| 264 |

+

ln -s datasets/cat2dog/testA [path_to_afhq]/test/cat

|

| 265 |

+

ln -s datasets/cat2dog/testB [path_to_afhq]/test/dog

|

| 266 |

+

```

|

| 267 |

+

|

| 268 |

+

The Cityscapes dataset can be downloaded from https://cityscapes-dataset.com.

|

| 269 |

+

After that, use the script `./datasets/prepare_cityscapes_dataset.py` to prepare the dataset.

|

| 270 |

+

|

| 271 |

+

|

| 272 |

+

#### Preprocessing of input images

|

| 273 |

+

|

| 274 |

+

The preprocessing of the input images, such as resizing or random cropping, is controlled by the option `--preprocess`, `--load_size`, and `--crop_size`. The usage follows the [CycleGAN/pix2pix](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix) repo.

|

| 275 |

+

|

| 276 |

+

For example, the default setting `--preprocess resize_and_crop --load_size 286 --crop_size 256` resizes the input image to `286x286`, and then makes a random crop of size `256x256` as a way to perform data augmentation. There are other preprocessing options that can be specified, and they are specified in [base_dataset.py](https://github.com/taesungp/contrastive-unpaired-translation/blob/master/data/base_dataset.py#L82). Below are some example options.

|

| 277 |

+

|

| 278 |

+

- `--preprocess none`: does not perform any preprocessing. Note that the image size is still scaled to be a closest multiple of 4, because the convolutional generator cannot maintain the same image size otherwise.

|

| 279 |

+

- `--preprocess scale_width --load_size 768`: scales the width of the image to be of size 768.

|

| 280 |

+

- `--preprocess scale_shortside_and_crop`: scales the image preserving aspect ratio so that the short side is `load_size`, and then performs random cropping of window size `crop_size`.

|

| 281 |

+

|

| 282 |

+

More preprocessing options can be added by modifying [`get_transform()`](https://github.com/taesungp/contrastive-unpaired-translation/blob/master/data/base_dataset.py#L82) of `base_dataset.py`.

|

| 283 |

+

|

| 284 |

+

|

| 285 |

+

### Citation

|

| 286 |

+

If you use this code for your research, please cite our [paper](https://arxiv.org/pdf/2007.15651).

|

| 287 |

+

```

|

| 288 |

+

@inproceedings{park2020cut,

|

| 289 |

+

title={Contrastive Learning for Unpaired Image-to-Image Translation},

|

| 290 |

+

author={Taesung Park and Alexei A. Efros and Richard Zhang and Jun-Yan Zhu},

|

| 291 |

+

booktitle={European Conference on Computer Vision},

|

| 292 |

+

year={2020}

|

| 293 |

+

}

|

| 294 |

+

```

|

| 295 |

+

|

| 296 |

+

If you use the original [pix2pix](https://phillipi.github.io/pix2pix/) and [CycleGAN](https://junyanz.github.io/CycleGAN/) model included in this repo, please cite the following papers

|

| 297 |

+

```

|

| 298 |

+

@inproceedings{CycleGAN2017,

|

| 299 |

+

title={Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks},

|

| 300 |

+

author={Zhu, Jun-Yan and Park, Taesung and Isola, Phillip and Efros, Alexei A},

|

| 301 |

+

booktitle={IEEE International Conference on Computer Vision (ICCV)},

|

| 302 |

+

year={2017}

|

| 303 |

+

}

|

| 304 |

+

|

| 305 |

+

|

| 306 |

+

@inproceedings{isola2017image,

|

| 307 |

+

title={Image-to-Image Translation with Conditional Adversarial Networks},

|

| 308 |

+

author={Isola, Phillip and Zhu, Jun-Yan and Zhou, Tinghui and Efros, Alexei A},

|

| 309 |

+

booktitle={IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

|

| 310 |

+

year={2017}

|

| 311 |

+

}

|

| 312 |

+

```

|

| 313 |

+

|

| 314 |

+

|

| 315 |

+

### Acknowledgments

|

| 316 |

+

We thank Allan Jabri and Phillip Isola for helpful discussion and feedback. Our code is developed based on [pytorch-CycleGAN-and-pix2pix](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix). We also thank [pytorch-fid](https://github.com/mseitzer/pytorch-fid) for FID computation, [drn](https://github.com/fyu/drn) for mIoU computation, and [stylegan2-pytorch](https://github.com/rosinality/stylegan2-pytorch/) for the PyTorch implementation of StyleGAN2 used in our single-image translation setting.

|

data/__init__.py

ADDED

|

@@ -0,0 +1,98 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""This package includes all the modules related to data loading and preprocessing

|

| 2 |

+

|

| 3 |

+

To add a custom dataset class called 'dummy', you need to add a file called 'dummy_dataset.py' and define a subclass 'DummyDataset' inherited from BaseDataset.

|

| 4 |

+

You need to implement four functions:

|

| 5 |

+

-- <__init__>: initialize the class, first call BaseDataset.__init__(self, opt).

|

| 6 |

+

-- <__len__>: return the size of dataset.

|

| 7 |

+

-- <__getitem__>: get a data point from data loader.

|

| 8 |

+

-- <modify_commandline_options>: (optionally) add dataset-specific options and set default options.

|

| 9 |

+

|

| 10 |

+

Now you can use the dataset class by specifying flag '--dataset_mode dummy'.

|

| 11 |

+

See our template dataset class 'template_dataset.py' for more details.

|

| 12 |

+

"""

|

| 13 |

+

import importlib

|

| 14 |

+

import torch.utils.data

|

| 15 |

+

from data.base_dataset import BaseDataset

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

def find_dataset_using_name(dataset_name):

|

| 19 |

+

"""Import the module "data/[dataset_name]_dataset.py".

|

| 20 |

+

|

| 21 |

+

In the file, the class called DatasetNameDataset() will

|

| 22 |

+

be instantiated. It has to be a subclass of BaseDataset,

|

| 23 |

+

and it is case-insensitive.

|

| 24 |

+

"""

|

| 25 |

+

dataset_filename = "data." + dataset_name + "_dataset"

|

| 26 |

+

datasetlib = importlib.import_module(dataset_filename)

|

| 27 |

+

|

| 28 |

+

dataset = None

|

| 29 |

+

target_dataset_name = dataset_name.replace('_', '') + 'dataset'

|

| 30 |

+

for name, cls in datasetlib.__dict__.items():

|

| 31 |

+

if name.lower() == target_dataset_name.lower() \

|

| 32 |

+

and issubclass(cls, BaseDataset):

|

| 33 |

+

dataset = cls

|

| 34 |

+

|

| 35 |

+

if dataset is None:

|

| 36 |

+

raise NotImplementedError("In %s.py, there should be a subclass of BaseDataset with class name that matches %s in lowercase." % (dataset_filename, target_dataset_name))

|

| 37 |

+

|

| 38 |

+

return dataset

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

def get_option_setter(dataset_name):

|

| 42 |

+

"""Return the static method <modify_commandline_options> of the dataset class."""

|

| 43 |

+

dataset_class = find_dataset_using_name(dataset_name)

|

| 44 |

+

return dataset_class.modify_commandline_options

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

def create_dataset(opt):

|

| 48 |

+

"""Create a dataset given the option.

|

| 49 |

+

|

| 50 |

+

This function wraps the class CustomDatasetDataLoader.

|

| 51 |

+

This is the main interface between this package and 'train.py'/'test.py'

|

| 52 |

+

|

| 53 |

+

Example:

|

| 54 |

+

>>> from data import create_dataset

|

| 55 |

+

>>> dataset = create_dataset(opt)

|

| 56 |

+

"""

|

| 57 |

+

data_loader = CustomDatasetDataLoader(opt)

|

| 58 |

+

dataset = data_loader.load_data()

|

| 59 |

+

return dataset

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

class CustomDatasetDataLoader():

|

| 63 |

+

"""Wrapper class of Dataset class that performs multi-threaded data loading"""

|

| 64 |

+

|

| 65 |

+

def __init__(self, opt):

|

| 66 |

+

"""Initialize this class

|

| 67 |

+

|

| 68 |

+

Step 1: create a dataset instance given the name [dataset_mode]

|

| 69 |

+

Step 2: create a multi-threaded data loader.

|

| 70 |

+

"""

|

| 71 |

+

self.opt = opt

|

| 72 |

+

dataset_class = find_dataset_using_name(opt.dataset_mode)

|

| 73 |

+

self.dataset = dataset_class(opt)

|

| 74 |

+

print("dataset [%s] was created" % type(self.dataset).__name__)

|

| 75 |

+

self.dataloader = torch.utils.data.DataLoader(

|

| 76 |

+

self.dataset,

|

| 77 |

+

batch_size=opt.batch_size,

|

| 78 |

+

shuffle=not opt.serial_batches,

|

| 79 |

+

num_workers=int(opt.num_threads),

|

| 80 |

+

drop_last=True if opt.isTrain else False,

|

| 81 |

+

)

|

| 82 |

+

|

| 83 |

+

def set_epoch(self, epoch):

|

| 84 |

+

self.dataset.current_epoch = epoch

|

| 85 |

+

|

| 86 |

+

def load_data(self):

|

| 87 |

+

return self

|

| 88 |

+

|

| 89 |

+

def __len__(self):

|

| 90 |

+

"""Return the number of data in the dataset"""

|

| 91 |

+

return min(len(self.dataset), self.opt.max_dataset_size)

|

| 92 |

+

|

| 93 |

+

def __iter__(self):

|

| 94 |

+

"""Return a batch of data"""

|

| 95 |

+

for i, data in enumerate(self.dataloader):

|

| 96 |

+

if i * self.opt.batch_size >= self.opt.max_dataset_size:

|

| 97 |

+

break

|

| 98 |

+

yield data

|

data/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (4.18 kB). View file

|

|

|

data/__pycache__/base_dataset.cpython-310.pyc

ADDED

|

Binary file (8.06 kB). View file

|

|

|

data/__pycache__/image_folder.cpython-310.pyc

ADDED

|

Binary file (2.46 kB). View file

|

|

|

data/__pycache__/unaligned_dataset.cpython-310.pyc

ADDED

|

Binary file (3.07 kB). View file

|

|

|

data/base_dataset.py

ADDED

|

@@ -0,0 +1,230 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""This module implements an abstract base class (ABC) 'BaseDataset' for datasets.

|

| 2 |

+

|

| 3 |

+

It also includes common transformation functions (e.g., get_transform, __scale_width), which can be later used in subclasses.

|

| 4 |

+

"""

|

| 5 |

+

import random

|

| 6 |

+

import numpy as np

|

| 7 |

+

import torch.utils.data as data

|

| 8 |

+

from PIL import Image

|

| 9 |

+

import torchvision.transforms as transforms

|

| 10 |

+

from abc import ABC, abstractmethod

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

class BaseDataset(data.Dataset, ABC):

|

| 14 |

+

"""This class is an abstract base class (ABC) for datasets.

|

| 15 |

+

|

| 16 |

+

To create a subclass, you need to implement the following four functions:

|

| 17 |

+

-- <__init__>: initialize the class, first call BaseDataset.__init__(self, opt).

|

| 18 |

+

-- <__len__>: return the size of dataset.

|

| 19 |

+

-- <__getitem__>: get a data point.

|

| 20 |

+

-- <modify_commandline_options>: (optionally) add dataset-specific options and set default options.

|

| 21 |

+

"""

|

| 22 |

+

|

| 23 |

+

def __init__(self, opt):

|

| 24 |

+

"""Initialize the class; save the options in the class

|

| 25 |

+

|

| 26 |

+

Parameters:

|

| 27 |

+

opt (Option class)-- stores all the experiment flags; needs to be a subclass of BaseOptions

|

| 28 |

+

"""

|

| 29 |

+

self.opt = opt

|

| 30 |

+

self.root = opt.dataroot

|

| 31 |