Update README.md

Browse filesfixed "apre-training" to "a pre-training".

README.md

CHANGED

|

@@ -9,7 +9,7 @@ license: apache-2.0

|

|

| 9 |

|

| 10 |

# Introduction

|

| 11 |

|

| 12 |

-

UL2 is a unified framework for pretraining models that are universally effective across datasets and setups. UL2 uses Mixture-of-Denoisers (MoD),

|

| 13 |

|

| 14 |

|

| 15 |

|

|

|

|

| 9 |

|

| 10 |

# Introduction

|

| 11 |

|

| 12 |

+

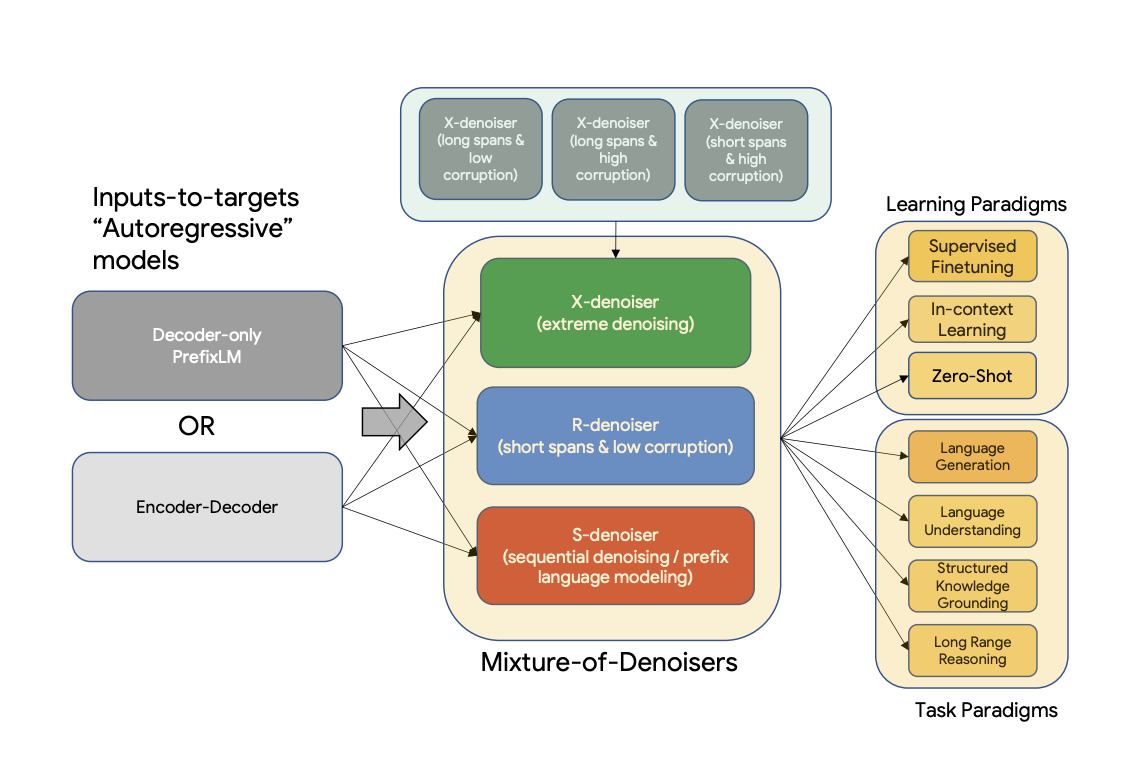

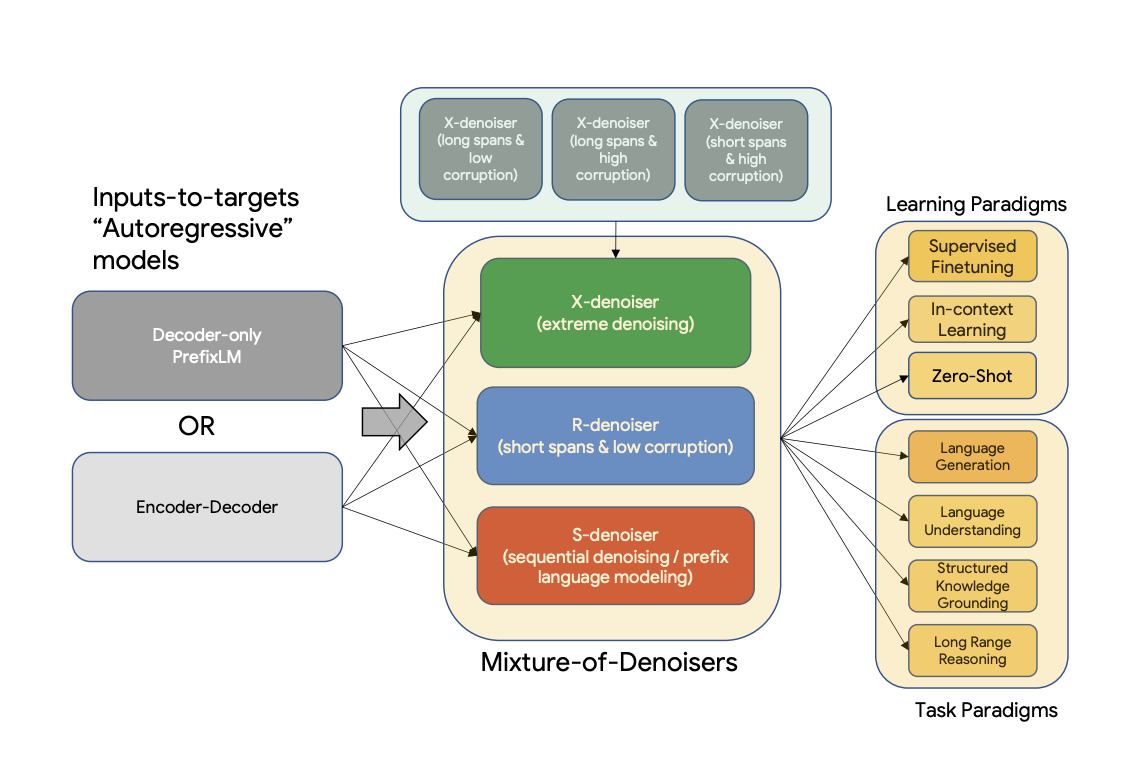

UL2 is a unified framework for pretraining models that are universally effective across datasets and setups. UL2 uses Mixture-of-Denoisers (MoD), a pre-training objective that combines diverse pre-training paradigms together. UL2 introduces a notion of mode switching, wherein downstream fine-tuning is associated with specific pre-training schemes.

|

| 13 |

|

| 14 |

|

| 15 |

|