File size: 4,214 Bytes

cf43b68 3a43cc5 cf43b68 bc89cb6 cf43b68 e64d689 cf43b68 e64d689 cf43b68 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 |

---

language:

- multilingual

- af

- am

- ar

- az

- be

- bg

- bn

- ca

- ceb

- co

- cs

- cy

- da

- de

- el

- en

- eo

- es

- et

- eu

- fa

- fi

- fil

- fr

- fy

- ga

- gd

- gl

- gu

- ha

- haw

- hi

- hmn

- ht

- hu

- hy

- ig

- is

- it

- iw

- ja

- jv

- ka

- kk

- km

- kn

- ko

- ku

- ky

- la

- lb

- lo

- lt

- lv

- mg

- mi

- mk

- ml

- mn

- mr

- ms

- mt

- my

- ne

- nl

- no

- ny

- pa

- pl

- ps

- pt

- ro

- ru

- sd

- si

- sk

- sl

- sm

- sn

- so

- sq

- sr

- st

- su

- sv

- sw

- ta

- te

- tg

- th

- tr

- uk

- und

- ur

- uz

- vi

- xh

- yi

- yo

- zh

- zu

datasets:

- mc4

license: apache-2.0

---

# ByT5 - xxl

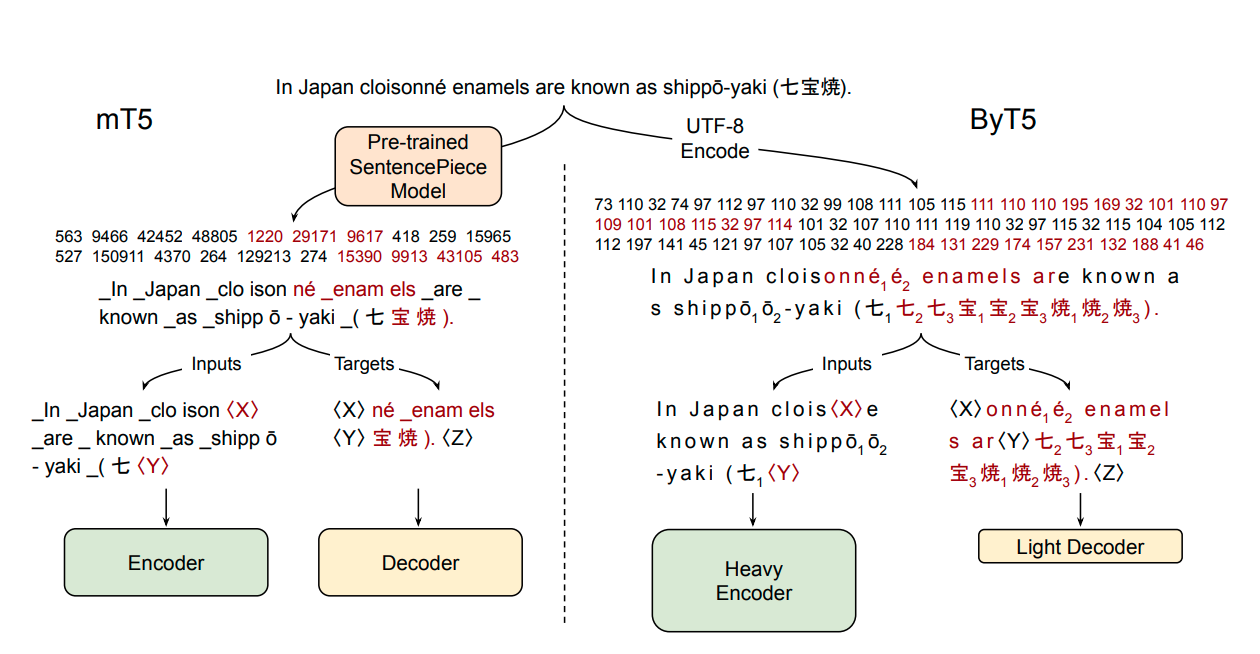

ByT5 is a tokenizer-free version of [Google's T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) and generally follows the architecture of [MT5](https://huggingface.co/google/mt5-xxl).

ByT5 was only pre-trained on [mC4](https://www.tensorflow.org/datasets/catalog/c4#c4multilingual) excluding any supervised training with an average span-mask of 20 UTF-8 characters. Therefore, this model has to be fine-tuned before it is useable on a downstream task.

ByT5 works especially well on noisy text data,*e.g.*, `google/byt5-xxl` significantly outperforms [mt5-xxl](https://huggingface.co/google/mt5-xxl) on [TweetQA](https://arxiv.org/abs/1907.06292).

Paper: [ByT5: Towards a token-free future with pre-trained byte-to-byte models](https://arxiv.org/abs/2105.13626)

Authors: *Linting Xue, Aditya Barua, Noah Constant, Rami Al-Rfou, Sharan Narang, Mihir Kale, Adam Roberts, Colin Raffel*

## Example Inference

ByT5 works on raw UTF-8 bytes and can be used without a tokenizer:

```python

from transformers import T5ForConditionalGeneration

import torch

model = T5ForConditionalGeneration.from_pretrained('google/byt5-xxl')

input_ids = torch.tensor([list("Life is like a box of chocolates.".encode("utf-8"))]) + 3 # add 3 for special tokens

labels = torch.tensor([list("La vie est comme une boîte de chocolat.".encode("utf-8"))]) + 3 # add 3 for special tokens

loss = model(input_ids, labels=labels).loss # forward pass

```

For batched inference & training it is however recommended using a tokenizer class for padding:

```python

from transformers import T5ForConditionalGeneration, AutoTokenizer

model = T5ForConditionalGeneration.from_pretrained('google/byt5-xxl')

tokenizer = AutoTokenizer.from_pretrained('google/byt5-xxl')

model_inputs = tokenizer(["Life is like a box of chocolates.", "Today is Monday."], padding="longest", return_tensors="pt")

labels = tokenizer(["La vie est comme une boîte de chocolat.", "Aujourd'hui c'est lundi."], padding="longest", return_tensors="pt").input_ids

loss = model(**model_inputs, labels=labels).loss # forward pass

```

## Abstract

Most widely-used pre-trained language models operate on sequences of tokens corresponding to word or subword units. Encoding text as a sequence of tokens requires a tokenizer, which is typically created as an independent artifact from the model. Token-free models that instead operate directly on raw text (bytes or characters) have many benefits: they can process text in any language out of the box, they are more robust to noise, and they minimize technical debt by removing complex and error-prone text preprocessing pipelines. Since byte or character sequences are longer than token sequences, past work on token-free models has often introduced new model architectures designed to amortize the cost of operating directly on raw text. In this paper, we show that a standard Transformer architecture can be used with minimal modifications to process byte sequences. We carefully characterize the trade-offs in terms of parameter count, training FLOPs, and inference speed, and show that byte-level models are competitive with their token-level counterparts. We also demonstrate that byte-level models are significantly more robust to noise and perform better on tasks that are sensitive to spelling and pronunciation. As part of our contribution, we release a new set of pre-trained byte-level Transformer models based on the T5 architecture, as well as all code and data used in our experiments.

|