readme: add initial version

Browse files- README.md +280 -0

- stats/figures/all_corpus_stats.png +0 -0

- stats/figures/bl_corpus_stats.png +0 -0

- stats/figures/finnish_europeana_corpus_stats.png +0 -0

- stats/figures/french_europeana_corpus_stats.png +0 -0

- stats/figures/german_europeana_corpus_stats.png +0 -0

- stats/figures/pretraining_loss_finnish_europeana.png +0 -0

- stats/figures/pretraining_loss_historic-multilingual.png +0 -0

- stats/figures/pretraining_loss_historic_english.png +0 -0

- stats/figures/pretraining_loss_swedish_europeana.png +0 -0

- stats/figures/swedish_europeana_corpus_stats.png +0 -0

README.md

ADDED

|

@@ -0,0 +1,280 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language: english

|

| 3 |

+

license: mit

|

| 4 |

+

widget:

|

| 5 |

+

- text: "and I cannot conceive the reafon why [MASK] hath"

|

| 6 |

+

---

|

| 7 |

+

|

| 8 |

+

# Historic Language Models (HLMs)

|

| 9 |

+

|

| 10 |

+

## Languages

|

| 11 |

+

|

| 12 |

+

Our Historic Language Models Zoo contains support for the following languages - incl. their training data source:

|

| 13 |

+

|

| 14 |

+

| Language | Training data | Size

|

| 15 |

+

| -------- | ------------- | ----

|

| 16 |

+

| German | [Europeana](http://www.europeana-newspapers.eu/) | 13-28GB (filtered)

|

| 17 |

+

| French | [Europeana](http://www.europeana-newspapers.eu/) | 11-31GB (filtered)

|

| 18 |

+

| English | [British Library](https://data.bl.uk/digbks/db14.html) | 24GB (year filtered)

|

| 19 |

+

| Finnish | [Europeana](http://www.europeana-newspapers.eu/) | 1.2GB

|

| 20 |

+

| Swedish | [Europeana](http://www.europeana-newspapers.eu/) | 1.1GB

|

| 21 |

+

|

| 22 |

+

## Models

|

| 23 |

+

|

| 24 |

+

At the moment, the following models are available on the model hub:

|

| 25 |

+

|

| 26 |

+

| Model identifier | Model Hub link

|

| 27 |

+

| --------------------------------------------- | --------------------------------------------------------------------------

|

| 28 |

+

| `dbmdz/bert-base-historic-multilingual-cased` | [here](https://huggingface.co/dbmdz/bert-base-historic-multilingual-cased)

|

| 29 |

+

| `dbmdz/bert-base-historic-english-cased` | [here](https://huggingface.co/dbmdz/bert-base-historic-english-cased)

|

| 30 |

+

| `dbmdz/bert-base-finnish-europeana-cased` | [here](https://huggingface.co/dbmdz/bert-base-finnish-europeana-cased)

|

| 31 |

+

| `dbmdz/bert-base-swedish-europeana-cased` | [here](https://huggingface.co/dbmdz/bert-base-swedish-europeana-cased)

|

| 32 |

+

|

| 33 |

+

# Corpora Stats

|

| 34 |

+

|

| 35 |

+

## German Europeana Corpus

|

| 36 |

+

|

| 37 |

+

We provide some statistics using different thresholds of ocr confidences, in order to shrink down the corpus size

|

| 38 |

+

and use less-noisier data:

|

| 39 |

+

|

| 40 |

+

| OCR confidence | Size

|

| 41 |

+

| -------------- | ----

|

| 42 |

+

| **0.60** | 28GB

|

| 43 |

+

| 0.65 | 18GB

|

| 44 |

+

| 0.70 | 13GB

|

| 45 |

+

|

| 46 |

+

For the final corpus we use a OCR confidence of 0.6 (28GB). The following plot shows a tokens per year distribution:

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

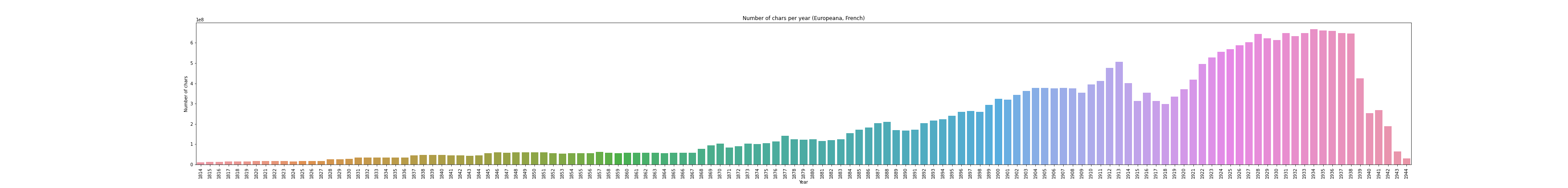

## French Europeana Corpus

|

| 51 |

+

|

| 52 |

+

Like German, we use different ocr confidence thresholds:

|

| 53 |

+

|

| 54 |

+

| OCR confidence | Size

|

| 55 |

+

| -------------- | ----

|

| 56 |

+

| 0.60 | 31GB

|

| 57 |

+

| 0.65 | 27GB

|

| 58 |

+

| **0.70** | 27GB

|

| 59 |

+

| 0.75 | 23GB

|

| 60 |

+

| 0.80 | 11GB

|

| 61 |

+

|

| 62 |

+

For the final corpus we use a OCR confidence of 0.7 (27GB). The following plot shows a tokens per year distribution:

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

|

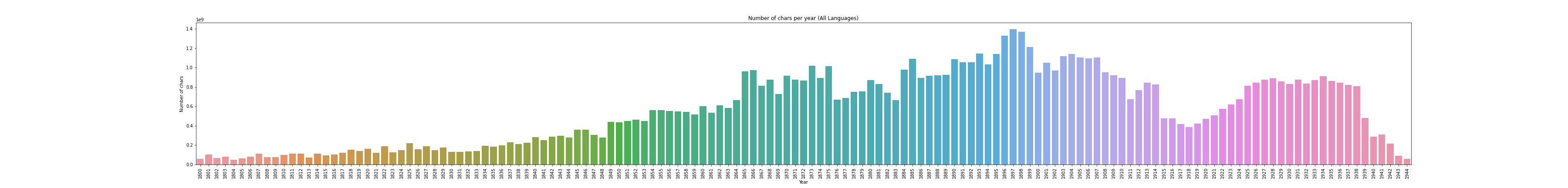

| 66 |

+

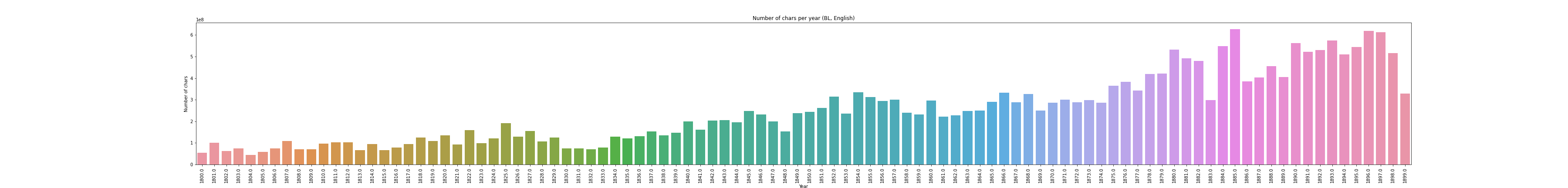

## British Library Corpus

|

| 67 |

+

|

| 68 |

+

Metadata is taken from [here](https://data.bl.uk/digbks/DB21.html). Stats incl. year filtering:

|

| 69 |

+

|

| 70 |

+

| Years | Size

|

| 71 |

+

| ----------------- | ----

|

| 72 |

+

| ALL | 24GB

|

| 73 |

+

| >= 1800 && < 1900 | 24GB

|

| 74 |

+

|

| 75 |

+

We use the year filtered variant. The following plot shows a tokens per year distribution:

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

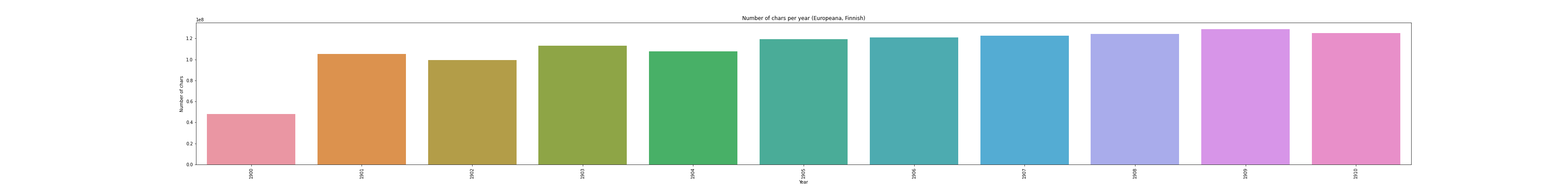

## Finnish Europeana Corpus

|

| 80 |

+

|

| 81 |

+

| OCR confidence | Size

|

| 82 |

+

| -------------- | ----

|

| 83 |

+

| 0.60 | 1.2GB

|

| 84 |

+

|

| 85 |

+

The following plot shows a tokens per year distribution:

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

## Swedish Europeana Corpus

|

| 90 |

+

|

| 91 |

+

| OCR confidence | Size

|

| 92 |

+

| -------------- | ----

|

| 93 |

+

| 0.60 | 1.1GB

|

| 94 |

+

|

| 95 |

+

The following plot shows a tokens per year distribution:

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

## All Corpora

|

| 100 |

+

|

| 101 |

+

The following plot shows a tokens per year distribution of the complete training corpus:

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

# Multilingual Vocab generation

|

| 106 |

+

|

| 107 |

+

For the first attempt, we use the first 10GB of each pretraining corpus. We upsample both Finnish and Swedish to ~10GB.

|

| 108 |

+

The following tables shows the exact size that is used for generating a 32k and 64k subword vocabs:

|

| 109 |

+

|

| 110 |

+

| Language | Size

|

| 111 |

+

| -------- | ----

|

| 112 |

+

| German | 10GB

|

| 113 |

+

| French | 10GB

|

| 114 |

+

| English | 10GB

|

| 115 |

+

| Finnish | 9.5GB

|

| 116 |

+

| Swedish | 9.7GB

|

| 117 |

+

|

| 118 |

+

We then calculate the subword fertility rate and portion of `[UNK]`s over the following NER corpora:

|

| 119 |

+

|

| 120 |

+

| Language | NER corpora

|

| 121 |

+

| -------- | ------------------

|

| 122 |

+

| German | CLEF-HIPE, NewsEye

|

| 123 |

+

| French | CLEF-HIPE, NewsEye

|

| 124 |

+

| English | CLEF-HIPE

|

| 125 |

+

| Finnish | NewsEye

|

| 126 |

+

| Swedish | NewsEye

|

| 127 |

+

|

| 128 |

+

Breakdown of subword fertility rate and unknown portion per language for the 32k vocab:

|

| 129 |

+

|

| 130 |

+

| Language | Subword fertility | Unknown portion

|

| 131 |

+

| -------- | ------------------ | ---------------

|

| 132 |

+

| German | 1.43 | 0.0004

|

| 133 |

+

| French | 1.25 | 0.0001

|

| 134 |

+

| English | 1.25 | 0.0

|

| 135 |

+

| Finnish | 1.69 | 0.0007

|

| 136 |

+

| Swedish | 1.43 | 0.0

|

| 137 |

+

|

| 138 |

+

Breakdown of subword fertility rate and unknown portion per language for the 64k vocab:

|

| 139 |

+

|

| 140 |

+

| Language | Subword fertility | Unknown portion

|

| 141 |

+

| -------- | ------------------ | ---------------

|

| 142 |

+

| German | 1.31 | 0.0004

|

| 143 |

+

| French | 1.16 | 0.0001

|

| 144 |

+

| English | 1.17 | 0.0

|

| 145 |

+

| Finnish | 1.54 | 0.0007

|

| 146 |

+

| Swedish | 1.32 | 0.0

|

| 147 |

+

|

| 148 |

+

# Final pretraining corpora

|

| 149 |

+

|

| 150 |

+

We upsample Swedish and Finnish to ~27GB. The final stats for all pretraining corpora can be seen here:

|

| 151 |

+

|

| 152 |

+

| Language | Size

|

| 153 |

+

| -------- | ----

|

| 154 |

+

| German | 28GB

|

| 155 |

+

| French | 27GB

|

| 156 |

+

| English | 24GB

|

| 157 |

+

| Finnish | 27GB

|

| 158 |

+

| Swedish | 27GB

|

| 159 |

+

|

| 160 |

+

Total size is 130GB.

|

| 161 |

+

|

| 162 |

+

# Pretraining

|

| 163 |

+

|

| 164 |

+

## Multilingual model

|

| 165 |

+

|

| 166 |

+

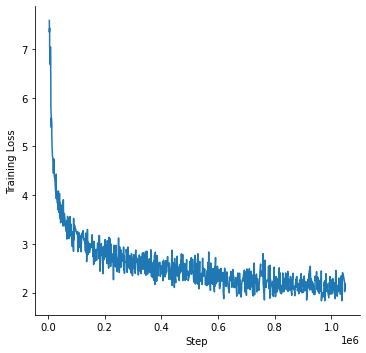

We train a multilingual BERT model using the 32k vocab with the official BERT implementation

|

| 167 |

+

on a v3-32 TPU using the following parameters:

|

| 168 |

+

|

| 169 |

+

```bash

|

| 170 |

+

python3 run_pretraining.py --input_file gs://histolectra/historic-multilingual-tfrecords/*.tfrecord \

|

| 171 |

+

--output_dir gs://histolectra/bert-base-historic-multilingual-cased \

|

| 172 |

+

--bert_config_file ./config.json \

|

| 173 |

+

--max_seq_length=512 \

|

| 174 |

+

--max_predictions_per_seq=75 \

|

| 175 |

+

--do_train=True \

|

| 176 |

+

--train_batch_size=128 \

|

| 177 |

+

--num_train_steps=3000000 \

|

| 178 |

+

--learning_rate=1e-4 \

|

| 179 |

+

--save_checkpoints_steps=100000 \

|

| 180 |

+

--keep_checkpoint_max=20 \

|

| 181 |

+

--use_tpu=True \

|

| 182 |

+

--tpu_name=electra-2 \

|

| 183 |

+

--num_tpu_cores=32

|

| 184 |

+

```

|

| 185 |

+

|

| 186 |

+

The following plot shows the pretraining loss curve:

|

| 187 |

+

|

| 188 |

+

|

| 189 |

+

|

| 190 |

+

## English model

|

| 191 |

+

|

| 192 |

+

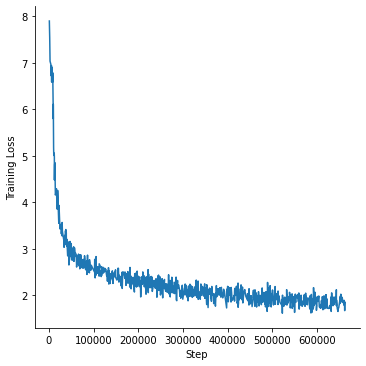

The English BERT model - with texts from British Library corpus - was trained with the Hugging Face

|

| 193 |

+

JAX/FLAX implementation for 10 epochs (approx. 1M steps) on a v3-8 TPU, using the following command:

|

| 194 |

+

|

| 195 |

+

```bash

|

| 196 |

+

python3 run_mlm_flax.py --model_type bert \

|

| 197 |

+

--config_name /mnt/datasets/bert-base-historic-english-cased/ \

|

| 198 |

+

--tokenizer_name /mnt/datasets/bert-base-historic-english-cased/ \

|

| 199 |

+

--train_file /mnt/datasets/bl-corpus/bl_1800-1900_extracted.txt \

|

| 200 |

+

--validation_file /mnt/datasets/bl-corpus/english_validation.txt \

|

| 201 |

+

--max_seq_length 512 \

|

| 202 |

+

--per_device_train_batch_size 16 \

|

| 203 |

+

--learning_rate 1e-4 \

|

| 204 |

+

--num_train_epochs 10 \

|

| 205 |

+

--preprocessing_num_workers 96 \

|

| 206 |

+

--output_dir /mnt/datasets/bert-base-historic-english-cased-512-noadafactor-10e \

|

| 207 |

+

--save_steps 2500 \

|

| 208 |

+

--eval_steps 2500 \

|

| 209 |

+

--warmup_steps 10000 \

|

| 210 |

+

--line_by_line \

|

| 211 |

+

--pad_to_max_length

|

| 212 |

+

```

|

| 213 |

+

|

| 214 |

+

The following plot shows the pretraining loss curve:

|

| 215 |

+

|

| 216 |

+

|

| 217 |

+

|

| 218 |

+

## Finnish model

|

| 219 |

+

|

| 220 |

+

The BERT model - with texts from Finnish part of Europeana - was trained with the Hugging Face

|

| 221 |

+

JAX/FLAX implementation for 40 epochs (approx. 1M steps) on a v3-8 TPU, using the following command:

|

| 222 |

+

|

| 223 |

+

```bash

|

| 224 |

+

python3 run_mlm_flax.py --model_type bert \

|

| 225 |

+

--config_name /mnt/datasets/bert-base-finnish-europeana-cased/ \

|

| 226 |

+

--tokenizer_name /mnt/datasets/bert-base-finnish-europeana-cased/ \

|

| 227 |

+

--train_file /mnt/datasets/hlms/extracted_content_Finnish_0.6.txt \

|

| 228 |

+

--validation_file /mnt/datasets/hlms/finnish_validation.txt \

|

| 229 |

+

--max_seq_length 512 \

|

| 230 |

+

--per_device_train_batch_size 16 \

|

| 231 |

+

--learning_rate 1e-4 \

|

| 232 |

+

--num_train_epochs 40 \

|

| 233 |

+

--preprocessing_num_workers 96 \

|

| 234 |

+

--output_dir /mnt/datasets/bert-base-finnish-europeana-cased-512-dupe1-noadafactor-40e \

|

| 235 |

+

--save_steps 2500 \

|

| 236 |

+

--eval_steps 2500 \

|

| 237 |

+

--warmup_steps 10000 \

|

| 238 |

+

--line_by_line \

|

| 239 |

+

--pad_to_max_length

|

| 240 |

+

```

|

| 241 |

+

|

| 242 |

+

The following plot shows the pretraining loss curve:

|

| 243 |

+

|

| 244 |

+

|

| 245 |

+

|

| 246 |

+

## Swedish model

|

| 247 |

+

|

| 248 |

+

The BERT model - with texts from Swedish part of Europeana - was trained with the Hugging Face

|

| 249 |

+

JAX/FLAX implementation for 40 epochs (approx. 660K steps) on a v3-8 TPU, using the following command:

|

| 250 |

+

|

| 251 |

+

```bash

|

| 252 |

+

python3 run_mlm_flax.py --model_type bert \

|

| 253 |

+

--config_name /mnt/datasets/bert-base-swedish-europeana-cased/ \

|

| 254 |

+

--tokenizer_name /mnt/datasets/bert-base-swedish-europeana-cased/ \

|

| 255 |

+

--train_file /mnt/datasets/hlms/extracted_content_Swedish_0.6.txt \

|

| 256 |

+

--validation_file /mnt/datasets/hlms/swedish_validation.txt \

|

| 257 |

+

--max_seq_length 512 \

|

| 258 |

+

--per_device_train_batch_size 16 \

|

| 259 |

+

--learning_rate 1e-4 \

|

| 260 |

+

--num_train_epochs 40 \

|

| 261 |

+

--preprocessing_num_workers 96 \

|

| 262 |

+

--output_dir /mnt/datasets/bert-base-swedish-europeana-cased-512-dupe1-noadafactor-40e \

|

| 263 |

+

--save_steps 2500 \

|

| 264 |

+

--eval_steps 2500 \

|

| 265 |

+

--warmup_steps 10000 \

|

| 266 |

+

--line_by_line \

|

| 267 |

+

--pad_to_max_length

|

| 268 |

+

```

|

| 269 |

+

|

| 270 |

+

The following plot shows the pretraining loss curve:

|

| 271 |

+

|

| 272 |

+

|

| 273 |

+

|

| 274 |

+

# Acknowledgments

|

| 275 |

+

|

| 276 |

+

Research supported with Cloud TPUs from Google's TPU Research Cloud (TRC) program, previously known as

|

| 277 |

+

TensorFlow Research Cloud (TFRC). Many thanks for providing access to the TRC ❤️

|

| 278 |

+

|

| 279 |

+

Thanks to the generous support from the [Hugging Face](https://huggingface.co/) team,

|

| 280 |

+

it is possible to download both cased and uncased models from their S3 storage 🤗

|

stats/figures/all_corpus_stats.png

ADDED

|

stats/figures/bl_corpus_stats.png

ADDED

|

stats/figures/finnish_europeana_corpus_stats.png

ADDED

|

stats/figures/french_europeana_corpus_stats.png

ADDED

|

stats/figures/german_europeana_corpus_stats.png

ADDED

|

stats/figures/pretraining_loss_finnish_europeana.png

ADDED

|

stats/figures/pretraining_loss_historic-multilingual.png

ADDED

|

stats/figures/pretraining_loss_historic_english.png

ADDED

|

stats/figures/pretraining_loss_swedish_europeana.png

ADDED

|

stats/figures/swedish_europeana_corpus_stats.png

ADDED

|