Upload 19 files

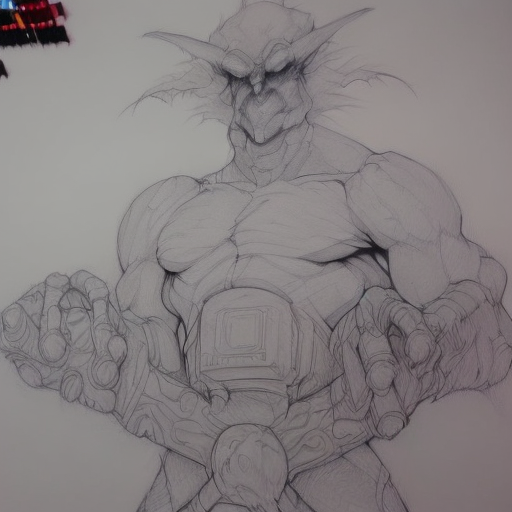

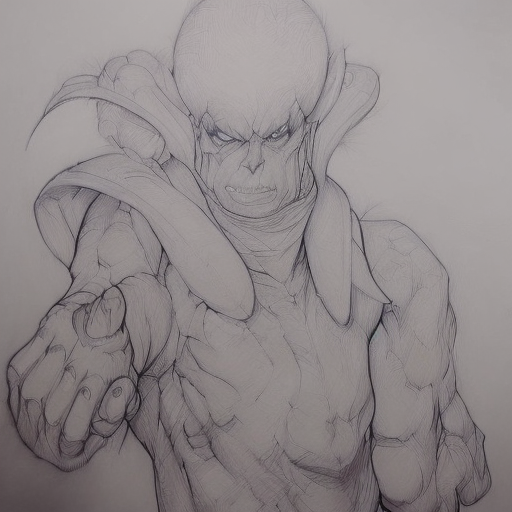

Browse filesThis model is based on SFW detailed pencil drawings.

Instance prompt is evang

You are not allowed to host, finetune, or do inference with the model or its derivatives on websites/apps/etc. If you want to, please email us at theprotoartent@gmail

You are free to use the outputs (images) of the model for commercial purposes in teams of 10 or less

You can't use the model to deliberately produce nor share illegal or harmful outputs or content

The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license.

- args.json +60 -0

- model.ckpt +3 -0

- pencil/._1300 +0 -0

- pencil/._5200 +0 -0

- pencil/._6500 +0 -0

- pencil/1300/._feature_extractor +0 -0

- pencil/1300/args.json +60 -0

- pencil/1300/feature_extractor/preprocessor_config.json +28 -0

- pencil/1300/model_index.json +33 -0

- pencil/5200/._samples +0 -0

- pencil/5200/._text_encoder +0 -0

- pencil/5200/args.json +60 -0

- pencil/5200/model_index.json +33 -0

- pencil/5200/samples/0.png +0 -0

- pencil/5200/samples/1.png +0 -0

- pencil/5200/samples/2.png +0 -0

- pencil/5200/samples/3.png +0 -0

- pencil/5200/text_encoder/config.json +25 -0

- pencil/6500/args.json +60 -0

|

@@ -0,0 +1,60 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"pretrained_model_name_or_path": "runwayml/stable-diffusion-v1-5",

|

| 3 |

+

"pretrained_vae_name_or_path": "stabilityai/sd-vae-ft-mse",

|

| 4 |

+

"revision": "fp16",

|

| 5 |

+

"tokenizer_name": null,

|

| 6 |

+

"instance_data_dir": null,

|

| 7 |

+

"class_data_dir": null,

|

| 8 |

+

"instance_prompt": null,

|

| 9 |

+

"class_prompt": null,

|

| 10 |

+

"save_sample_prompt": "evang",

|

| 11 |

+

"save_sample_negative_prompt": null,

|

| 12 |

+

"n_save_sample": 4,

|

| 13 |

+

"save_guidance_scale": 7.5,

|

| 14 |

+

"save_infer_steps": 20,

|

| 15 |

+

"pad_tokens": false,

|

| 16 |

+

"with_prior_preservation": true,

|

| 17 |

+

"prior_loss_weight": 1.0,

|

| 18 |

+

"num_class_images": 50,

|

| 19 |

+

"output_dir": "/content/drive/MyDrive/stable_diffusion_weights/pencil",

|

| 20 |

+

"seed": 1337,

|

| 21 |

+

"resolution": 512,

|

| 22 |

+

"center_crop": false,

|

| 23 |

+

"train_text_encoder": true,

|

| 24 |

+

"train_batch_size": 1,

|

| 25 |

+

"sample_batch_size": 4,

|

| 26 |

+

"num_train_epochs": 83,

|

| 27 |

+

"max_train_steps": 6500,

|

| 28 |

+

"gradient_accumulation_steps": 1,

|

| 29 |

+

"gradient_checkpointing": false,

|

| 30 |

+

"learning_rate": 1e-06,

|

| 31 |

+

"scale_lr": false,

|

| 32 |

+

"lr_scheduler": "constant",

|

| 33 |

+

"lr_warmup_steps": 0,

|

| 34 |

+

"use_8bit_adam": true,

|

| 35 |

+

"adam_beta1": 0.9,

|

| 36 |

+

"adam_beta2": 0.999,

|

| 37 |

+

"adam_weight_decay": 0.01,

|

| 38 |

+

"adam_epsilon": 1e-08,

|

| 39 |

+

"max_grad_norm": 1.0,

|

| 40 |

+

"push_to_hub": false,

|

| 41 |

+

"hub_token": null,

|

| 42 |

+

"hub_model_id": null,

|

| 43 |

+

"logging_dir": "logs",

|

| 44 |

+

"log_interval": 10,

|

| 45 |

+

"save_interval": 1300,

|

| 46 |

+

"save_min_steps": 0,

|

| 47 |

+

"mixed_precision": "fp16",

|

| 48 |

+

"not_cache_latents": false,

|

| 49 |

+

"hflip": false,

|

| 50 |

+

"local_rank": -1,

|

| 51 |

+

"concepts_list": [

|

| 52 |

+

{

|

| 53 |

+

"instance_prompt": "evang",

|

| 54 |

+

"class_prompt": "photo of a illustration style",

|

| 55 |

+

"instance_data_dir": "/content/data/zwx",

|

| 56 |

+

"class_data_dir": "/content/data/style"

|

| 57 |

+

}

|

| 58 |

+

],

|

| 59 |

+

"read_prompts_from_txts": false

|

| 60 |

+

}

|

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:115faf88cdb30e432b435210481c2243e13715d6601f2c8c9ab41ccb53475b21

|

| 3 |

+

size 2132791380

|

|

Binary file (4.1 kB). View file

|

|

|

|

Binary file (4.1 kB). View file

|

|

|

|

Binary file (4.1 kB). View file

|

|

|

|

Binary file (4.1 kB). View file

|

|

|

|

@@ -0,0 +1,60 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"pretrained_model_name_or_path": "runwayml/stable-diffusion-v1-5",

|

| 3 |

+

"pretrained_vae_name_or_path": "stabilityai/sd-vae-ft-mse",

|

| 4 |

+

"revision": "fp16",

|

| 5 |

+

"tokenizer_name": null,

|

| 6 |

+

"instance_data_dir": null,

|

| 7 |

+

"class_data_dir": null,

|

| 8 |

+

"instance_prompt": null,

|

| 9 |

+

"class_prompt": null,

|

| 10 |

+

"save_sample_prompt": "evang",

|

| 11 |

+

"save_sample_negative_prompt": null,

|

| 12 |

+

"n_save_sample": 4,

|

| 13 |

+

"save_guidance_scale": 7.5,

|

| 14 |

+

"save_infer_steps": 20,

|

| 15 |

+

"pad_tokens": false,

|

| 16 |

+

"with_prior_preservation": true,

|

| 17 |

+

"prior_loss_weight": 1.0,

|

| 18 |

+

"num_class_images": 50,

|

| 19 |

+

"output_dir": "/content/drive/MyDrive/stable_diffusion_weights/pencil",

|

| 20 |

+

"seed": 1337,

|

| 21 |

+

"resolution": 512,

|

| 22 |

+

"center_crop": false,

|

| 23 |

+

"train_text_encoder": true,

|

| 24 |

+

"train_batch_size": 1,

|

| 25 |

+

"sample_batch_size": 4,

|

| 26 |

+

"num_train_epochs": 83,

|

| 27 |

+

"max_train_steps": 6500,

|

| 28 |

+

"gradient_accumulation_steps": 1,

|

| 29 |

+

"gradient_checkpointing": false,

|

| 30 |

+

"learning_rate": 1e-06,

|

| 31 |

+

"scale_lr": false,

|

| 32 |

+

"lr_scheduler": "constant",

|

| 33 |

+

"lr_warmup_steps": 0,

|

| 34 |

+

"use_8bit_adam": true,

|

| 35 |

+

"adam_beta1": 0.9,

|

| 36 |

+

"adam_beta2": 0.999,

|

| 37 |

+

"adam_weight_decay": 0.01,

|

| 38 |

+

"adam_epsilon": 1e-08,

|

| 39 |

+

"max_grad_norm": 1.0,

|

| 40 |

+

"push_to_hub": false,

|

| 41 |

+

"hub_token": null,

|

| 42 |

+

"hub_model_id": null,

|

| 43 |

+

"logging_dir": "logs",

|

| 44 |

+

"log_interval": 10,

|

| 45 |

+

"save_interval": 1300,

|

| 46 |

+

"save_min_steps": 0,

|

| 47 |

+

"mixed_precision": "fp16",

|

| 48 |

+

"not_cache_latents": false,

|

| 49 |

+

"hflip": false,

|

| 50 |

+

"local_rank": -1,

|

| 51 |

+

"concepts_list": [

|

| 52 |

+

{

|

| 53 |

+

"instance_prompt": "evang",

|

| 54 |

+

"class_prompt": "photo of a illustration style",

|

| 55 |

+

"instance_data_dir": "/content/data/zwx",

|

| 56 |

+

"class_data_dir": "/content/data/style"

|

| 57 |

+

}

|

| 58 |

+

],

|

| 59 |

+

"read_prompts_from_txts": false

|

| 60 |

+

}

|

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"crop_size": {

|

| 3 |

+

"height": 224,

|

| 4 |

+

"width": 224

|

| 5 |

+

},

|

| 6 |

+

"do_center_crop": true,

|

| 7 |

+

"do_convert_rgb": true,

|

| 8 |

+

"do_normalize": true,

|

| 9 |

+

"do_rescale": true,

|

| 10 |

+

"do_resize": true,

|

| 11 |

+

"feature_extractor_type": "CLIPFeatureExtractor",

|

| 12 |

+

"image_mean": [

|

| 13 |

+

0.48145466,

|

| 14 |

+

0.4578275,

|

| 15 |

+

0.40821073

|

| 16 |

+

],

|

| 17 |

+

"image_processor_type": "CLIPFeatureExtractor",

|

| 18 |

+

"image_std": [

|

| 19 |

+

0.26862954,

|

| 20 |

+

0.26130258,

|

| 21 |

+

0.27577711

|

| 22 |

+

],

|

| 23 |

+

"resample": 3,

|

| 24 |

+

"rescale_factor": 0.00392156862745098,

|

| 25 |

+

"size": {

|

| 26 |

+

"shortest_edge": 224

|

| 27 |

+

}

|

| 28 |

+

}

|

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "StableDiffusionPipeline",

|

| 3 |

+

"_diffusers_version": "0.14.0.dev0",

|

| 4 |

+

"feature_extractor": [

|

| 5 |

+

"transformers",

|

| 6 |

+

"CLIPFeatureExtractor"

|

| 7 |

+

],

|

| 8 |

+

"requires_safety_checker": true,

|

| 9 |

+

"safety_checker": [

|

| 10 |

+

null,

|

| 11 |

+

null

|

| 12 |

+

],

|

| 13 |

+

"scheduler": [

|

| 14 |

+

"diffusers",

|

| 15 |

+

"PNDMScheduler"

|

| 16 |

+

],

|

| 17 |

+

"text_encoder": [

|

| 18 |

+

"transformers",

|

| 19 |

+

"CLIPTextModel"

|

| 20 |

+

],

|

| 21 |

+

"tokenizer": [

|

| 22 |

+

"transformers",

|

| 23 |

+

"CLIPTokenizer"

|

| 24 |

+

],

|

| 25 |

+

"unet": [

|

| 26 |

+

"diffusers",

|

| 27 |

+

"UNet2DConditionModel"

|

| 28 |

+

],

|

| 29 |

+

"vae": [

|

| 30 |

+

"diffusers",

|

| 31 |

+

"AutoencoderKL"

|

| 32 |

+

]

|

| 33 |

+

}

|

|

Binary file (4.1 kB). View file

|

|

|

|

Binary file (4.1 kB). View file

|

|

|

|

@@ -0,0 +1,60 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"pretrained_model_name_or_path": "runwayml/stable-diffusion-v1-5",

|

| 3 |

+

"pretrained_vae_name_or_path": "stabilityai/sd-vae-ft-mse",

|

| 4 |

+

"revision": "fp16",

|

| 5 |

+

"tokenizer_name": null,

|

| 6 |

+

"instance_data_dir": null,

|

| 7 |

+

"class_data_dir": null,

|

| 8 |

+

"instance_prompt": null,

|

| 9 |

+

"class_prompt": null,

|

| 10 |

+

"save_sample_prompt": "evang",

|

| 11 |

+

"save_sample_negative_prompt": null,

|

| 12 |

+

"n_save_sample": 4,

|

| 13 |

+

"save_guidance_scale": 7.5,

|

| 14 |

+

"save_infer_steps": 20,

|

| 15 |

+

"pad_tokens": false,

|

| 16 |

+

"with_prior_preservation": true,

|

| 17 |

+

"prior_loss_weight": 1.0,

|

| 18 |

+

"num_class_images": 50,

|

| 19 |

+

"output_dir": "/content/drive/MyDrive/stable_diffusion_weights/pencil",

|

| 20 |

+

"seed": 1337,

|

| 21 |

+

"resolution": 512,

|

| 22 |

+

"center_crop": false,

|

| 23 |

+

"train_text_encoder": true,

|

| 24 |

+

"train_batch_size": 1,

|

| 25 |

+

"sample_batch_size": 4,

|

| 26 |

+

"num_train_epochs": 83,

|

| 27 |

+

"max_train_steps": 6500,

|

| 28 |

+

"gradient_accumulation_steps": 1,

|

| 29 |

+

"gradient_checkpointing": false,

|

| 30 |

+

"learning_rate": 1e-06,

|

| 31 |

+

"scale_lr": false,

|

| 32 |

+

"lr_scheduler": "constant",

|

| 33 |

+

"lr_warmup_steps": 0,

|

| 34 |

+

"use_8bit_adam": true,

|

| 35 |

+

"adam_beta1": 0.9,

|

| 36 |

+

"adam_beta2": 0.999,

|

| 37 |

+

"adam_weight_decay": 0.01,

|

| 38 |

+

"adam_epsilon": 1e-08,

|

| 39 |

+

"max_grad_norm": 1.0,

|

| 40 |

+

"push_to_hub": false,

|

| 41 |

+

"hub_token": null,

|

| 42 |

+

"hub_model_id": null,

|

| 43 |

+

"logging_dir": "logs",

|

| 44 |

+

"log_interval": 10,

|

| 45 |

+

"save_interval": 1300,

|

| 46 |

+

"save_min_steps": 0,

|

| 47 |

+

"mixed_precision": "fp16",

|

| 48 |

+

"not_cache_latents": false,

|

| 49 |

+

"hflip": false,

|

| 50 |

+

"local_rank": -1,

|

| 51 |

+

"concepts_list": [

|

| 52 |

+

{

|

| 53 |

+

"instance_prompt": "evang",

|

| 54 |

+

"class_prompt": "photo of a illustration style",

|

| 55 |

+

"instance_data_dir": "/content/data/zwx",

|

| 56 |

+

"class_data_dir": "/content/data/style"

|

| 57 |

+

}

|

| 58 |

+

],

|

| 59 |

+

"read_prompts_from_txts": false

|

| 60 |

+

}

|

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "StableDiffusionPipeline",

|

| 3 |

+

"_diffusers_version": "0.14.0.dev0",

|

| 4 |

+

"feature_extractor": [

|

| 5 |

+

"transformers",

|

| 6 |

+

"CLIPFeatureExtractor"

|

| 7 |

+

],

|

| 8 |

+

"requires_safety_checker": true,

|

| 9 |

+

"safety_checker": [

|

| 10 |

+

null,

|

| 11 |

+

null

|

| 12 |

+

],

|

| 13 |

+

"scheduler": [

|

| 14 |

+

"diffusers",

|

| 15 |

+

"PNDMScheduler"

|

| 16 |

+

],

|

| 17 |

+

"text_encoder": [

|

| 18 |

+

"transformers",

|

| 19 |

+

"CLIPTextModel"

|

| 20 |

+

],

|

| 21 |

+

"tokenizer": [

|

| 22 |

+

"transformers",

|

| 23 |

+

"CLIPTokenizer"

|

| 24 |

+

],

|

| 25 |

+

"unet": [

|

| 26 |

+

"diffusers",

|

| 27 |

+

"UNet2DConditionModel"

|

| 28 |

+

],

|

| 29 |

+

"vae": [

|

| 30 |

+

"diffusers",

|

| 31 |

+

"AutoencoderKL"

|

| 32 |

+

]

|

| 33 |

+

}

|

|

|

|

|

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "runwayml/stable-diffusion-v1-5",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"CLIPTextModel"

|

| 5 |

+

],

|

| 6 |

+

"attention_dropout": 0.0,

|

| 7 |

+

"bos_token_id": 0,

|

| 8 |

+

"dropout": 0.0,

|

| 9 |

+

"eos_token_id": 2,

|

| 10 |

+

"hidden_act": "quick_gelu",

|

| 11 |

+

"hidden_size": 768,

|

| 12 |

+

"initializer_factor": 1.0,

|

| 13 |

+

"initializer_range": 0.02,

|

| 14 |

+

"intermediate_size": 3072,

|

| 15 |

+

"layer_norm_eps": 1e-05,

|

| 16 |

+

"max_position_embeddings": 77,

|

| 17 |

+

"model_type": "clip_text_model",

|

| 18 |

+

"num_attention_heads": 12,

|

| 19 |

+

"num_hidden_layers": 12,

|

| 20 |

+

"pad_token_id": 1,

|

| 21 |

+

"projection_dim": 768,

|

| 22 |

+

"torch_dtype": "float32",

|

| 23 |

+

"transformers_version": "4.26.1",

|

| 24 |

+

"vocab_size": 49408

|

| 25 |

+

}

|

|

@@ -0,0 +1,60 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"pretrained_model_name_or_path": "runwayml/stable-diffusion-v1-5",

|

| 3 |

+

"pretrained_vae_name_or_path": "stabilityai/sd-vae-ft-mse",

|

| 4 |

+

"revision": "fp16",

|

| 5 |

+

"tokenizer_name": null,

|

| 6 |

+

"instance_data_dir": null,

|

| 7 |

+

"class_data_dir": null,

|

| 8 |

+

"instance_prompt": null,

|

| 9 |

+

"class_prompt": null,

|

| 10 |

+

"save_sample_prompt": "evang",

|

| 11 |

+

"save_sample_negative_prompt": null,

|

| 12 |

+

"n_save_sample": 4,

|

| 13 |

+

"save_guidance_scale": 7.5,

|

| 14 |

+

"save_infer_steps": 20,

|

| 15 |

+

"pad_tokens": false,

|

| 16 |

+

"with_prior_preservation": true,

|

| 17 |

+

"prior_loss_weight": 1.0,

|

| 18 |

+

"num_class_images": 50,

|

| 19 |

+

"output_dir": "/content/drive/MyDrive/stable_diffusion_weights/pencil",

|

| 20 |

+

"seed": 1337,

|

| 21 |

+

"resolution": 512,

|

| 22 |

+

"center_crop": false,

|

| 23 |

+

"train_text_encoder": true,

|

| 24 |

+

"train_batch_size": 1,

|

| 25 |

+

"sample_batch_size": 4,

|

| 26 |

+

"num_train_epochs": 83,

|

| 27 |

+

"max_train_steps": 6500,

|

| 28 |

+

"gradient_accumulation_steps": 1,

|

| 29 |

+

"gradient_checkpointing": false,

|

| 30 |

+

"learning_rate": 1e-06,

|

| 31 |

+

"scale_lr": false,

|

| 32 |

+

"lr_scheduler": "constant",

|

| 33 |

+

"lr_warmup_steps": 0,

|

| 34 |

+

"use_8bit_adam": true,

|

| 35 |

+

"adam_beta1": 0.9,

|

| 36 |

+

"adam_beta2": 0.999,

|

| 37 |

+

"adam_weight_decay": 0.01,

|

| 38 |

+

"adam_epsilon": 1e-08,

|

| 39 |

+

"max_grad_norm": 1.0,

|

| 40 |

+

"push_to_hub": false,

|

| 41 |

+

"hub_token": null,

|

| 42 |

+

"hub_model_id": null,

|

| 43 |

+

"logging_dir": "logs",

|

| 44 |

+

"log_interval": 10,

|

| 45 |

+

"save_interval": 1300,

|

| 46 |

+

"save_min_steps": 0,

|

| 47 |

+

"mixed_precision": "fp16",

|

| 48 |

+

"not_cache_latents": false,

|

| 49 |

+

"hflip": false,

|

| 50 |

+

"local_rank": -1,

|

| 51 |

+

"concepts_list": [

|

| 52 |

+

{

|

| 53 |

+

"instance_prompt": "evang",

|

| 54 |

+

"class_prompt": "photo of a illustration style",

|

| 55 |

+

"instance_data_dir": "/content/data/zwx",

|

| 56 |

+

"class_data_dir": "/content/data/style"

|

| 57 |

+

}

|

| 58 |

+

],

|

| 59 |

+

"read_prompts_from_txts": false

|

| 60 |

+

}

|