GPTree: Towards Explainable Decision-Making via LLM-powered Decision Trees

Overview

- The paper proposes a novel approach called GPTree that combines large language models (LLMs) and decision trees to enable explainable decision-making.

- GPTree uses LLMs to generate natural language explanations for the decisions made by the decision tree model.

- The authors evaluate GPTree on a dataset related to founder success, demonstrating its ability to provide interpretable and faithful explanations for its predictions.

Plain English Explanation

The paper introduces a new technique called GPTree that aims to make decision-making more understandable and transparent. Decision trees are a type of machine learning model that can be used to make predictions or decisions, but they can sometimes be difficult for humans to interpret.

GPTree combines decision trees with large language models (LLMs), which are powerful AI systems that can understand and generate human-like text. The idea behind GPTree is to use the LLM to generate natural language explanations for the decisions made by the decision tree model. This allows the model to not only make predictions, but also provide clear and understandable reasons for those predictions.

The authors tested GPTree on a dataset related to the success of startup founders, and found that it was able to provide interpretable and faithful explanations for its predictions. This suggests that GPTree could be a useful tool for explainable AI in a variety of domains, where it's important for humans to understand the reasoning behind automated decision-making.

Key Findings

- GPTree combines decision trees and large language models to enable explainable decision-making.

- The authors evaluated GPTree on a dataset related to founder success, and found that it could provide interpretable and faithful explanations for its predictions.

- This suggests that GPTree could be a useful tool for explainable AI in a variety of domains where it's important for humans to understand the reasoning behind automated decisions.

Technical Explanation

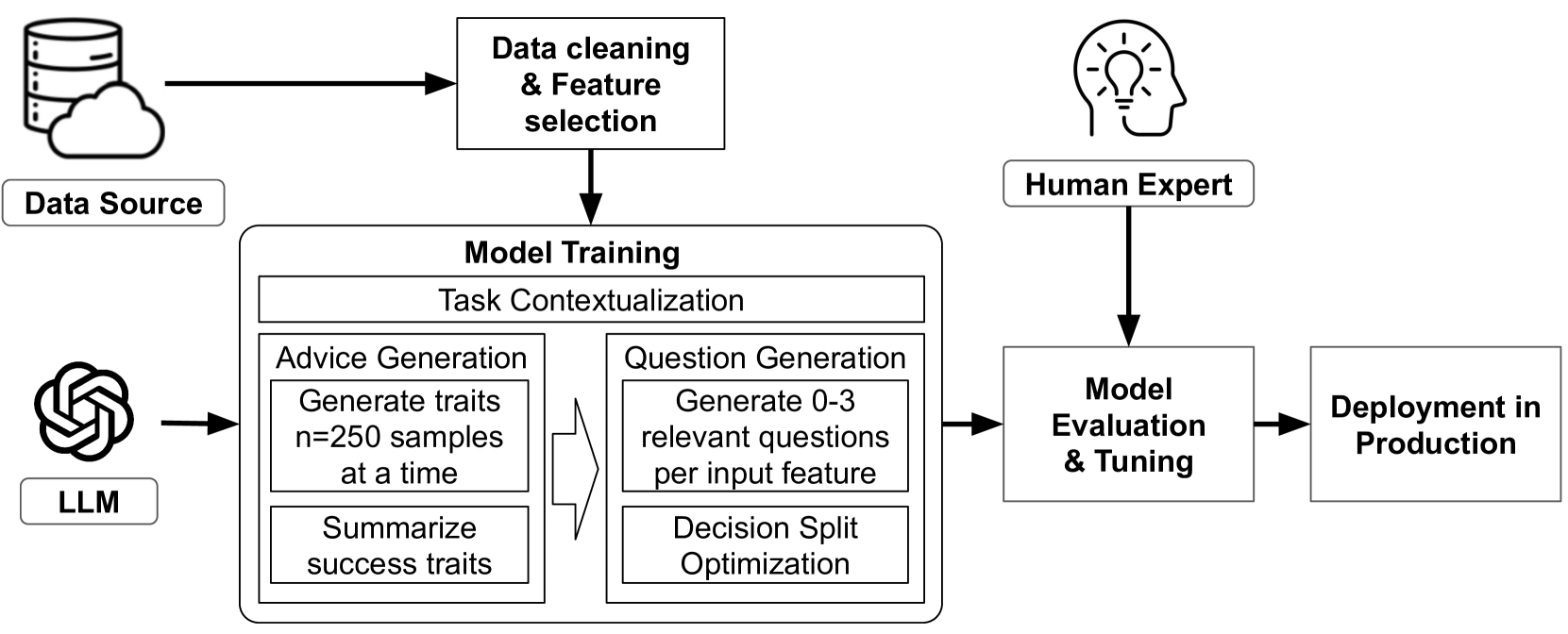

The core idea behind GPTree is to use a large language model (LLM) to generate natural language explanations for the decisions made by a decision tree model. The authors first train a decision tree model on a given dataset, and then use the LLM to generate explanations for each decision node in the tree.

The LLM is trained on a large corpus of text data, which allows it to understand and generate human-like natural language. To generate explanations for the decision tree, the authors provide the LLM with information about the current decision node, including the feature being used to make the decision, the threshold value, and the resulting predicted class. The LLM then generates a natural language explanation that explains the reasoning behind the decision.

The authors evaluate GPTree on a dataset related to the success of startup founders, and find that it is able to provide interpretable and faithful explanations for its predictions. This suggests that GPTree could be a useful tool for enabling explainable AI in a variety of domains, where it's important for humans to understand the reasoning behind automated decision-making.

Critical Analysis

The authors acknowledge that GPTree has some limitations. For example, the quality of the explanations generated by the LLM will depend on the quality of the training data and the LLM itself. If the LLM has biases or inaccuracies in its knowledge, these could be reflected in the explanations it generates.

Additionally, the authors note that GPTree may not be suitable for all types of decision problems, particularly those with highly complex or nuanced decision-making processes. In such cases, the decision tree model may not be able to capture the full complexity of the problem, and the explanations generated by the LLM may not be sufficiently detailed or accurate.

Further research could explore ways to improve the robustness and versatility of GPTree, such as by incorporating additional techniques for interpretable machine learning or by investigating the use of different types of LLMs or decision tree models.

Conclusion

The GPTree approach proposed in this paper represents an interesting step forward in the field of explainable AI. By combining decision trees and large language models, GPTree enables the generation of natural language explanations for automated decision-making, potentially making these systems more transparent and trustworthy for human users. While the approach has some limitations, the authors' evaluation on a dataset related to founder success suggests that GPTree could be a valuable tool for enabling explainable decision-making in a variety of real-world applications.