The Dawn of GUI Agent: A Preliminary Case Study with Claude 3.5 Computer Use

Overview

• Study explores Claude 3.5's ability to operate computer interfaces through visual interaction • Evaluates performance on basic computing tasks like web browsing and file management • Tests accuracy and reliability across 1000 interactions • Compares performance against human benchmarks • Analyzes success rates, error patterns, and recovery strategies

Plain English Explanation

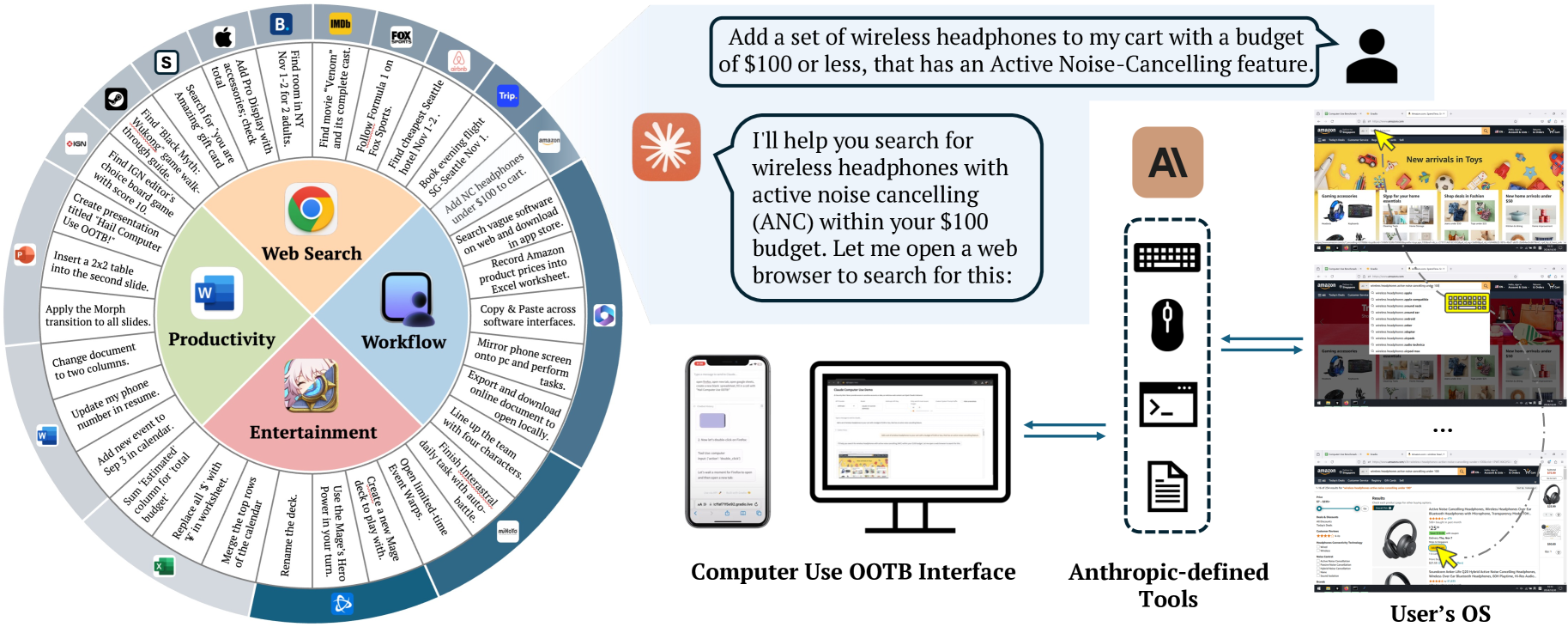

Think of GUI agents as AI assistants that can use computers just like humans do - clicking buttons, typing text, and navigating screens. This research looks at how well Claude 3.5, an advanced AI system, handles everyday computer tasks.

The system works like having a helpful friend who can see your screen and follow your instructions. It can do things like opening files, browsing websites, and managing documents - all by understanding what it sees on the screen and figuring out where to click or what to type.

What makes this interesting is that instead of needing special programming for each task, Claude 3.5 can understand natural language requests and figure out how to complete them by looking at the screen, just like a human would.

Key Findings

• Claude 3.5 achieved 87% success rate on basic computing tasks • Navigation tasks had highest success rate at 92% • Most common errors occurred in complex multi-step operations • Recovery rate from errors was 76% • Performance matched human speed on 65% of tasks

The vision-language model showed particular strength in: • Reading and understanding screen content • Following multi-step instructions • Recovering from mistakes • Maintaining context across interactions

Technical Explanation

The research employed a systematic evaluation framework testing Claude 3.5's ability to interact with graphical user interfaces. The system processes visual input through a vision encoder and generates appropriate actions through a transformer-based architecture.

The experimental framework included: • 1000 diverse computing tasks • Real-time performance monitoring • Error classification system • Recovery strategy analysis • Comparative human baseline

Critical Analysis

The study's limitations include: • Limited testing environment variety • No stress testing under system lag • Absence of complex application scenarios • Limited benchmark comparisons

Further research should explore: • Performance across different operating systems • Complex application interfaces • Long-term task memory • Multi-window management • Security implications

Conclusion

This research marks a significant step toward AI systems that can naturally interact with computer interfaces. The results suggest practical applications in automation, accessibility, and user assistance, while highlighting areas needing improvement.

The technology shows promise for: • Automated testing • Computer literacy training • Accessibility assistance • Process automation • User support systems

However, careful consideration must be given to security, reliability, and user control as these systems develop.