Upload folder using huggingface_hub (#4)

Browse files- aedd4b869b32b7ffee1c85907cc8ec6450d888457a83a42ad0bc821f1a843846 (a0ef2d2d0f73e10070562867ba0df17793d92e68)

- 0b843baa4dada46b4841c000d835849aca95d81f8771a56269909ae346b7f2f0 (c445e710c8f0f01ae0aabc832479dba4fc94d54f)

- README.md +11 -6

- config.json +1 -1

- model/optimized_model.pkl +2 -2

- model/smash_config.json +2 -2

- plots.png +0 -0

README.md

CHANGED

|

@@ -19,27 +19,32 @@ metrics:

|

|

| 19 |

</div>

|

| 20 |

<!-- header end -->

|

| 21 |

|

| 22 |

-

# Simply make AI models cheaper, smaller, faster, and greener!

|

| 23 |

-

|

| 24 |

[](https://twitter.com/PrunaAI)

|

| 25 |

[](https://github.com/PrunaAI)

|

| 26 |

[](https://www.linkedin.com/company/93832878/admin/feed/posts/?feedType=following)

|

|

|

|

|

|

|

|

|

|

| 27 |

|

| 28 |

- Give a thumbs up if you like this model!

|

| 29 |

- Contact us and tell us which model to compress next [here](https://www.pruna.ai/contact).

|

| 30 |

- Request access to easily compress your *own* AI models [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

|

| 31 |

- Read the documentations to know more [here](https://pruna-ai-pruna.readthedocs-hosted.com/en/latest/)

|

| 32 |

-

-

|

| 33 |

|

| 34 |

## Results

|

| 35 |

|

| 36 |

|

| 37 |

|

| 38 |

-

|

|

|

|

|

|

|

|

|

|

| 39 |

|

| 40 |

## Setup

|

| 41 |

|

| 42 |

You can run the smashed model with these steps:

|

|

|

|

| 43 |

0. Check cuda, torch, packaging requirements are installed. For cuda, check with `nvcc --version` and install with `conda install nvidia/label/cuda-12.1.0::cuda`. For packaging and torch, run `pip install packaging torch`.

|

| 44 |

1. Install the `pruna-engine` available [here](https://pypi.org/project/pruna-engine/) on Pypi. It might take 15 minutes to install.

|

| 45 |

```bash

|

|

@@ -72,9 +77,9 @@ You can run the smashed model with these steps:

|

|

| 72 |

|

| 73 |

The configuration info are in `config.json`.

|

| 74 |

|

| 75 |

-

## License

|

| 76 |

|

| 77 |

-

We follow the same license as the original model. Please check the license of the original model CompVis

|

| 78 |

|

| 79 |

## Want to compress other models?

|

| 80 |

|

|

|

|

| 19 |

</div>

|

| 20 |

<!-- header end -->

|

| 21 |

|

|

|

|

|

|

|

| 22 |

[](https://twitter.com/PrunaAI)

|

| 23 |

[](https://github.com/PrunaAI)

|

| 24 |

[](https://www.linkedin.com/company/93832878/admin/feed/posts/?feedType=following)

|

| 25 |

+

[](https://discord.gg/CP4VSgck)

|

| 26 |

+

|

| 27 |

+

# Simply make AI models cheaper, smaller, faster, and greener!

|

| 28 |

|

| 29 |

- Give a thumbs up if you like this model!

|

| 30 |

- Contact us and tell us which model to compress next [here](https://www.pruna.ai/contact).

|

| 31 |

- Request access to easily compress your *own* AI models [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

|

| 32 |

- Read the documentations to know more [here](https://pruna-ai-pruna.readthedocs-hosted.com/en/latest/)

|

| 33 |

+

- Join Pruna AI community on Discord [here](https://discord.gg/CP4VSgck) to share feedback/suggestions or get help.

|

| 34 |

|

| 35 |

## Results

|

| 36 |

|

| 37 |

|

| 38 |

|

| 39 |

+

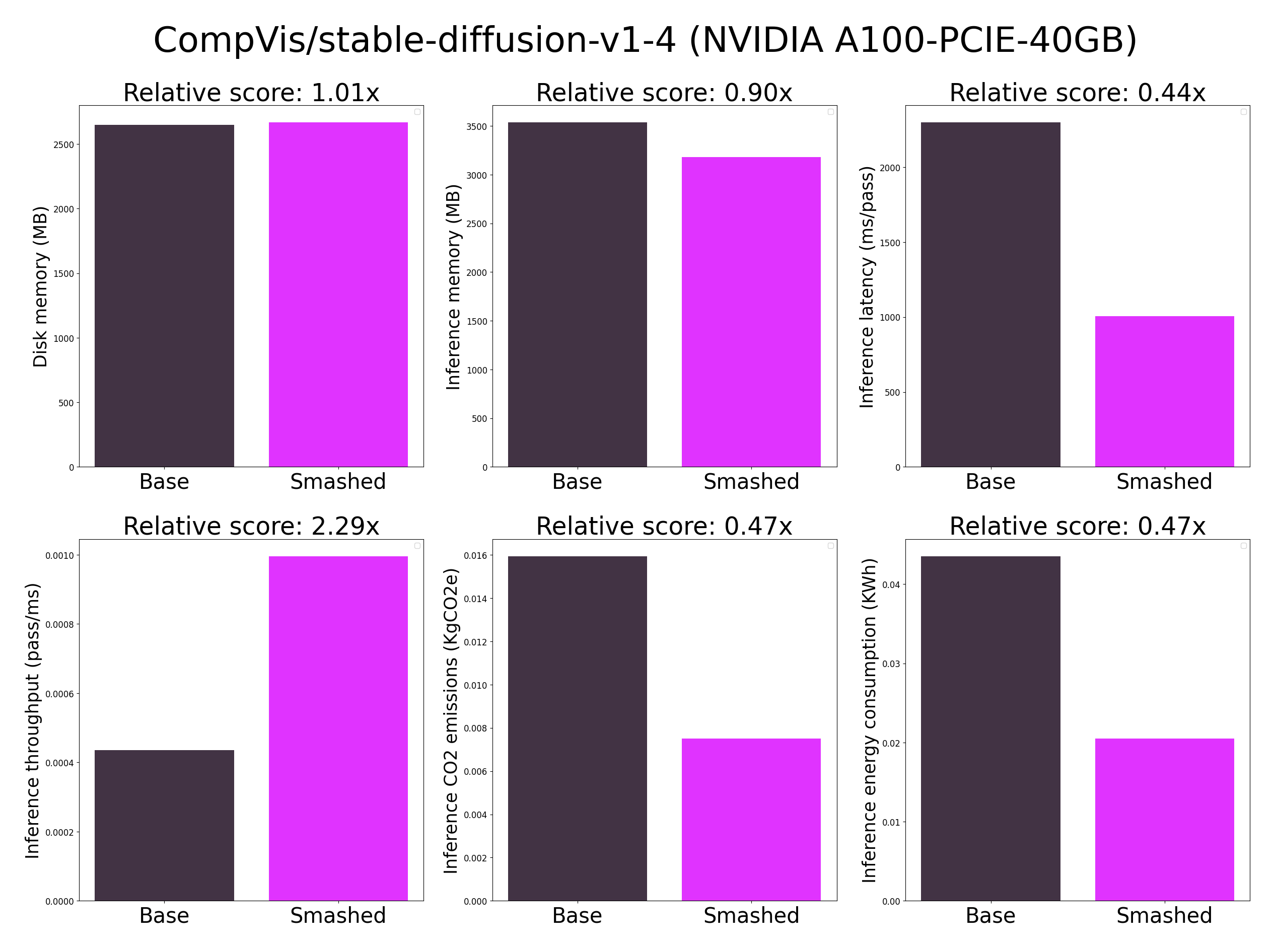

**Important remarks:**

|

| 40 |

+

- The quality of the model output might slightly vary compared to the base model. There might be minimal quality loss.

|

| 41 |

+

- These results were obtained on NVIDIA A100-PCIE-40GB with configuration described in config.json and are obtained after a hardware warmup. Efficiency results may vary in other settings (e.g. other hardware, image size, batch size, ...).

|

| 42 |

+

- You can request premium access to more compression methods and tech support for your specific use-cases [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

|

| 43 |

|

| 44 |

## Setup

|

| 45 |

|

| 46 |

You can run the smashed model with these steps:

|

| 47 |

+

|

| 48 |

0. Check cuda, torch, packaging requirements are installed. For cuda, check with `nvcc --version` and install with `conda install nvidia/label/cuda-12.1.0::cuda`. For packaging and torch, run `pip install packaging torch`.

|

| 49 |

1. Install the `pruna-engine` available [here](https://pypi.org/project/pruna-engine/) on Pypi. It might take 15 minutes to install.

|

| 50 |

```bash

|

|

|

|

| 77 |

|

| 78 |

The configuration info are in `config.json`.

|

| 79 |

|

| 80 |

+

## Credits & License

|

| 81 |

|

| 82 |

+

We follow the same license as the original model. Please check the license of the original model CompVis/stable-diffusion-v1-4 before using this model which provided the base model.

|

| 83 |

|

| 84 |

## Want to compress other models?

|

| 85 |

|

config.json

CHANGED

|

@@ -1 +1 @@

|

|

| 1 |

-

{"pruners": "None", "pruning_ratio":

|

|

|

|

| 1 |

+

{"pruners": "None", "pruning_ratio": "None", "factorizers": "None", "quantizers": "None", "n_quantization_bits": 32, "output_deviation": 0.0, "compilers": "['step_caching', 'tiling', 'diffusers2']", "static_batch": true, "static_shape": false, "controlnet": "None", "unet_dim": 4, "device": "cuda", "batch_size": 1, "max_batch_size": 1, "image_height": 512, "image_width": 512, "version": "1.4", "task": "txt2img", "weight_name": "None", "model_name": "CompVis/stable-diffusion-v1-4", "save_load_fn": "stable_fast"}

|

model/optimized_model.pkl

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

-

size

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9287aed9a24b58b9a5d619feebdb3567110d29741c66aa8af937f880704ffc43

|

| 3 |

+

size 2743426179

|

model/smash_config.json

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

-

size

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3d8870f8c3dad4ed45677b4707444380dd1a84e0b05451a9f34bcc79048f9a67

|

| 3 |

+

size 683

|

plots.png

CHANGED

|

|